You’re (Finally) In The Cloud. Now, Stop Acting So On-Prem.

Imagine you’ve been building houses with a hammer and nails for most of your career, and I gave you a nail gun. But instead of pressing it to the wood and pulling the trigger, you turn it sideways and hit the nail with the gun as if it were a hammer.

You would probably think it’s expensive and not overly effective, while the site’s inspector is going to rightly view it as a safety hazard.

Well, that’s because you’re using modern tooling, but with legacy thinking and processes. And while this analogy isn’t a perfect encapsulation of how some data teams operate after moving from on-premises to a modern data stack, it’s close.

Teams quickly understand how hyper elastic compute and storage services can enable them to handle more diverse data types at a previously unheard of volume and velocity, but they don’t always understand the impact of the cloud to their workflows.

So perhaps a better analogy for these recently migrated data teams would be if I gave you 1,000 nail guns…and then watched you turn them all sideways to hit 1,000 nails at the same time.

Regardless, the important thing to understand is that the modern data stack doesn’t just allow you to store and process bigger data faster, it allows you to handle data fundamentally differently to accomplish new goals and extract different types of value.

This is partly due to the increase in scale and speed, but also as a result of richer metadata and more seamless integrations across the ecosystem.

In this post, I highlight three of the more common ways I see data teams change their behavior in the cloud, and five ways they don’t (but should). Let’s dive in.

3 Ways Data Teams Changed with the Cloud

There are reasons data teams move to a modern data stack (beyond the CFO finally freeing up budget). These use cases are typically the first and easiest behavior shift for data teams once they enter the cloud. They are:

Moving from ETL to ELT to accelerate time-to-insight

You can’t just load anything into your on-premise database– especially not if you want a query to return before you hit the weekend. As a result, these data teams need to carefully consider what data to pull and how to transform it into its final state often via a pipeline hardcoded in Python.

That’s like making specific meals to order for every data consumer rather than putting out a buffet, and as anyone who has been on a cruise ship knows, when you need to feed an insatiable demand for data across the organization, a buffet is the way to go.

This was the case for AutoTrader UK technical lead Edward Kent who spoke with my team last year about data trust and the demand for self-service analytics.

“We want to empower AutoTrader and its customers to make data-informed decisions and democratize access to data through a self-serve platform….As we’re migrating trusted on-premises systems to the cloud, the users of those older systems need to have trust that the new cloud-based technologies are as reliable as the older systems they’ve used in the past,” he said.

When data teams migrate to the modern data stack, they gleefully adopt automated ingestion tools like Fivetran or transformation tools like dbt and Spark to go along with more sophisticated data curation strategies. Analytical self-service opens up a whole new can of worms, and it’s not always clear who should own data modeling, but on the whole it’s a much more efficient way of addressing analytical (and other!) use cases.

Real-time data for operational decision making

In the modern data stack, data can move fast enough that it no longer needs to be reserved for those daily metric pulse checks. Data teams can take advantage of Delta live tables, Snowpark, Kafka, Kinesis, micro-batching and more.

Not every team has a real-time data use case, but those that do are typically well aware. These are usually companies with significant logistics in need of operational support or technology companies with strong reporting integrated into their products (although a good portion of the latter were born in the cloud).

Challenges still exist of course. These can sometimes involve running parallel architectures (analytical batches and real-time streams) and trying to reach a level of quality control that is not possible to the degree most would like. But most data leaders quickly understand the value unlock that comes from being able to more directly support real-time operational decision making.

Generative AI and machine learning

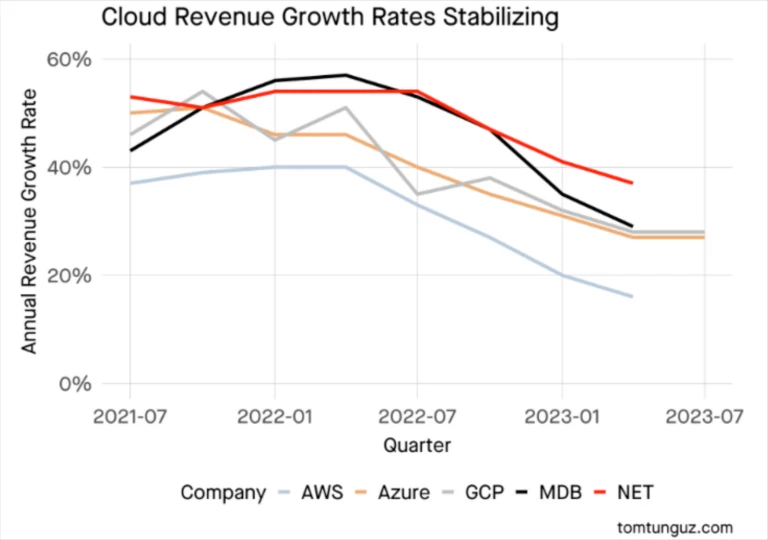

Data teams are acutely aware of the GenAI wave, and many industry watchers suspect that this emerging technology is driving a huge wave of infrastructure modernization and utilization.

But before ChatGPT plagiarized generated its first essay, machine learning applications had slowly moved from cutting-edge to standard best practice for a number of data intensive industries including media, e-commerce, and advertising.

Today, many data teams immediately start examining these use cases the minute they have scalable storage and compute (although some would benefit from building a better foundation).

If you recently moved to the cloud and haven’t asked the business how these use cases could better support the business, put it on the calendar. For this week. Or today. You’ll thank me later.

5 Ways Data Teams Still Act Like They Are On-Prem

Now, let’s take a look at some of the unrealized opportunities formerly on-premises data teams can be slower to exploit.

Side note: I want to be clear that while my earlier analogy was a bit humorous, I am not making fun of the teams that still operate on-premises or are operating in the cloud using the processes below. Change is hard. It’s even more difficult to do when you are facing a constant backlog and ever increasing demand.

Data testing

Data teams that are on-premises don’t have the scale or rich metadata from central query logs or modern table formats to easily run machine learning driven anomaly detection (in other words data observability).

Instead, they work with domain teams to understand data quality requirements and translate those into SQL rules, or data tests. For example, customer_id should never be NULL or currency_conversion should never have a negative value. There are on-premise based tools designed to help accelerate and manage this process.

When these data teams get to the cloud, their first thought isn’t to approach data quality differently, it’s to execute data tests at cloud scale. It’s what they know.

I’ve seen case studies that read like horror stories (and no I won’t name names) where a data engineering team is running millions of tasks across thousands of DAGs to monitor data quality across hundreds of pipelines. Yikes!

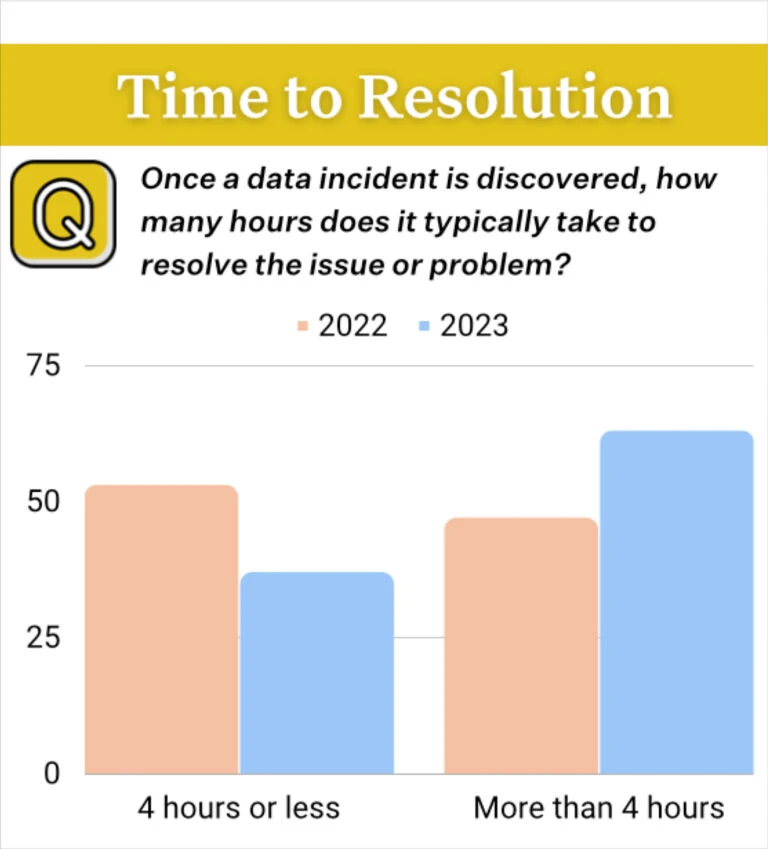

What happens when you run a half million data tests? I’ll tell you. Even if the vast majority pass, there are still tens of thousands that will fail. And they will fail again tomorrow, because there is no context to expedite root cause analysis or even begin to triage and figure out where to start.

You’ve somehow alert fatigued your team AND still not reached the level of coverage you need. Not to mention wide-scale data testing is both time and cost intensive.

Instead, data teams should leverage technologies that can detect, triage, and help RCA potential issues while reserving data tests (or custom monitors) to the most clear thresholds on the most important values within the most used tables.

Data modeling for data lineage

There are many legitimate reasons to support a central data model, and you’ve probably read all of them in an awesome Chad Sanderson post.

But, every once in a while I run into data teams on the cloud that are investing considerable time and resources into maintaining data models for the sole reason of maintaining and understanding data lineage. When you are on-premises, that is essentially your best bet unless you want to read through long blocks of SQL code and create a corkboard so full of flashcards and yarn that your significant other starts asking if you are OK.

(“No Lior! I’m not OK, I’m trying to understand how this WHERE clause changes which columns are in this JOIN!”)

Multiple tools within the modern data stack–including data catalogs, data observability platforms, and data repositories–can leverage metadata to create automated data lineage. It’s just a matter of picking a flavor.

Customer segmentation

In the old world, the view of the customer is flat whereas we know it really should be a 360 global view.

This limited customer view is the result of pre-modeled data (ETL), experimentation constraints, and the length of time required for on-premises databases to calculate more sophisticated queries (unique counts, distinct values) on larger data sets.

Unfortunately, data teams don’t always remove the blinders from their customer lens once those constraints have been removed in the cloud. There are often several reasons for this, but the largest culprits by far are good old fashioned data silos.

The customer data platform that the marketing team operates is still alive and kicking. That team could benefit from enriching their view of prospects and customers from other domain’s data that is stored in the warehouse/lakehouse, but the habits and sense of ownership built from years of campaign management is hard to break.

So instead of targeting prospects based on the highest estimated lifetime value, it’s going to be cost per lead or cost per click. This is a missed opportunity for data teams to contribute value in a directly and highly visible way to the organization.

Export external data sharing

Copying and exporting data is the worst. It takes time, adds costs, creates versioning issues, and makes access control virtually impossible.

Instead of taking advantage of your modern data stack to create a pipeline to export data to your typical partners at blazing fast speeds, more data teams on the cloud should leverage zero copy data sharing. Just like managing the permissions of a cloud file has largely replaced the email attachment, zero copy data sharing allows access to data without having to move it from the host environment.

Both Snowflake and Databricks have announced and heavily featured their data sharing technologies at their annual summits the last two years, and more data teams need to start taking advantage.

Optimizing cost and performance

Within many on-premises systems, it falls to the database administrator to oversee all the variables that could impact overall performance and regulate as necessary.

Within the modern data stack, on the other hand, you often see one of two extremes.

In a few cases, the role of DBA remains or it’s farmed out to a central data platform team, which can create bottlenecks if not managed properly. More common however, is that cost or performance optimization becomes the wild west until a particularly eye-watering bill hits the CFO’s desk.

This often occurs when data teams don’t have the right cost monitors in place, and there is a particularly aggressive outlier event (perhaps bad code or exploding JOINs).

Additionally, some data teams fail to take full advantage of the “pay for what you use” model and instead opt for committing to a predetermined amount of credits (typically at a discount)…and then exceed it. While there is nothing inherently wrong in credit commit contracts, having that runway can create some bad habits that can build up over time if you aren’t careful.

The cloud enables and encourages a more continuous, collaborative and integrated approach for DevOps/DataOps, and the same is true when it comes to FinOps. The teams I see that are the most successful with cost optimization within the modern data stack are those that make it part of their daily workflows and incentivize those closest to the cost.

“The rise of consumption based pricing makes this even more critical as the release of a new feature could potentially cause costs to rise exponentially,” said Tom Milner at Tenable. “As the manager of my team, I check our Snowflake costs daily and will make any spike a priority in our backlog.”

This creates feedback loops, shared learnings, and thousands of small, quick fixes that drive big results.

“We’ve got alerts set up when someone queries anything that would cost us more than $1. This is quite a low threshold, but we’ve found that it doesn’t need to cost more than that. We found this to be a good feedback loop. [When this alert occurs] it’s often someone forgetting a filter on a partitioned or clustered column and they can learn quickly,” said Stijn Zanders at Aiven.

Finally, deploying charge-back models across teams, previously unfathomable in the pre-cloud days, is a complicated, but ultimately worthwhile endeavor I’d like to see more data teams evaluate.

Be a learn-it-all

Microsoft CEO Satya Nadella has spoken about how he deliberately shifted the company’s organizational culture from “know-it-alls” to “learn-it-alls.” This would be my best advice for data leaders, whether you have just migrated or have been on the leading edge of data modernization for years.

I understand just how overwhelming it can be. New technologies are coming fast and furious, as are calls from the vendors hawking them. Ultimately, it’s not going to be about having the “most modernist” data stack in your industry, but rather creating alignment between modern tooling, top talent, and best practices.

To do that, always be ready to learn how your peers are tackling many of the challenges you are facing. Engage on social media, read Medium, follow analysts, and attend conferences. I’ll see you there!

Ready to stop acting so on-prem and move from hyperscale data testing to data observability? Let’s talk!

Our promise: we will show you the product.

Product demo.

Product demo.  What is data observability?

What is data observability?  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage