All About ETL Testing: Checklist, Tools, and 4 Useful SQL Queries

ETL (extract, transform, and load) testing is essentially the data world’s version of a highly rigorous proofreading session.

Imagine if The Wall Street Journal published articles without a final read-through. There might be typos, the facts might be jumbled, or worse, the entire argument could be upside down. So they have editors that scrutinize every word.

Just as a news editor ensures the clarity and accuracy of an article before it hits your screen, ETL testing is the unsung hero ensuring the data your business relies on is spot-on.

ETL testing aims to ensure that the data loaded into a target system is accurate, complete, and consistent, and that it adheres to quality standards.

Table of Contents

When is ETL testing needed?

Really, any time an E, T, or L occurs you should do ETL testing. Some common scenarios are:

- Data Warehousing Projects: Any time data from multiple sources is consolidated for reporting and analytics purposes.

- Data Migration Projects: Make sure the data is migrated accurately without any loss or corruption.

- Business Intelligence (BI) Projects: Accurate and timely data is required for analysis and decision-making.

- Data Integration Projects: In projects where data from different sources needs to be integrated, ETL testing ensures that the integrated data is consistent and accurate.

- Regulatory Compliance: In industries like healthcare and finance, regulatory compliance mandates accurate and complete data reporting.

- Data Quality Assurance: Identify and rectify data quality issues like duplicate records, missing values, and inconsistencies in data.

ETL testing checklist

Here are the essential aspects of each ETL phase:

Extract

🔲 Data extraction logic matches the specified requirements?

🔲 Correct data is pulled from the source?

🔲 No data loss?

Transform

🔲 Data transformation rules are applied?

🔲 Special characters and data types are handled correctly?

🔲 No corruption or unintended changes?

Load

🔲 Logging is in place to capture failures?

🔲 All data is loaded into the target system?

🔲 No duplicates, nulls, or other data quality errors?

🔲 Consistency across data fields and records?

🔲 Compliance with all relevant data governance and security policies?

🔲 Meets business requirements and user expectations?

General

🔲 Security checked for data at rest and in transit?

🔲 Scalability tests to ensure the system can handle increased data volumes?

Popular SQL queries for ETL testing

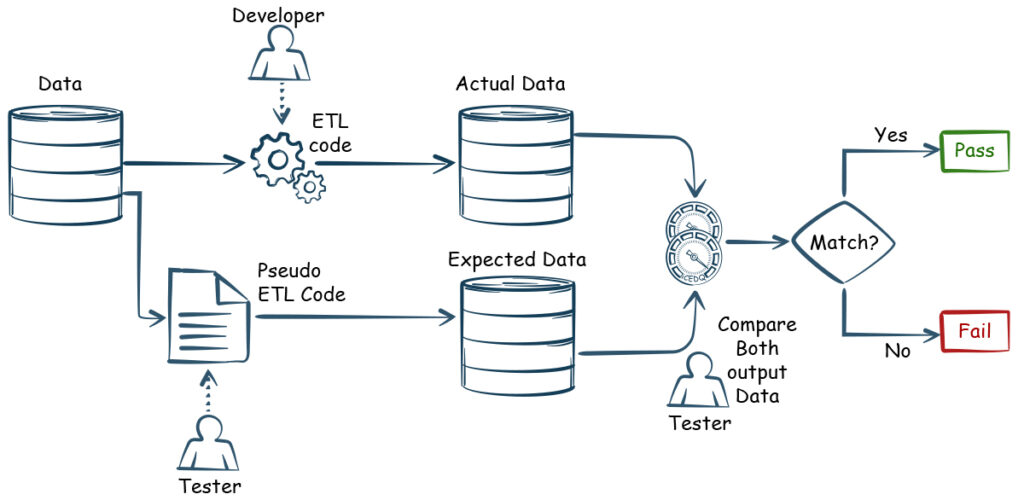

With its many functions and operators, SQL is an indispensable tool to interact directly with the data in its native environment so you can validate and verify it. Here are some example SQL queries for different types of ETL testing scenarios:

1. Test Data Completeness

To check if all the records from the source system are loaded into the target system, you might use a simple count query on both systems and compare the results:

-- Count records in the source table

SELECT COUNT(*) as source_count FROM source_table;

-- Count records in the target table

SELECT COUNT(*) as target_count FROM target_table;2.Test Data Transformation Rules

To validate that the transformation rules have been applied correctly, you can write queries that reflect these rules and compare the output against expected results. Suppose a rule states that all emails in the customer data should be converted to lowercase:

-- Check if email addresses are transformed to lowercase

SELECT email

FROM target_table

WHERE BINARY email != LOWER(email);This query selects any email from the target table that does not match its lowercase version, shining light on an issue with the transformation rule.

3. Test Data Quality

For example you can check for null values, duplicates, or correct data ranges. Here’s how you might check for null values in mandatory fields:

-- Check for NULL values in a mandatory column

SELECT COUNT(*)

FROM target_table

WHERE mandatory_column IS NULL;

To check for duplicate records:

-- Check for duplicate records based on a unique identifier

SELECT unique_identifier, COUNT(*)

FROM target_table

GROUP BY unique_identifier

HAVING COUNT(*) > 1;

-- Check that data falls within acceptable ranges

SELECT *

FROM table_name

WHERE numeric_column < lower_bound OR numeric_column > upper_bound;

4. Test Data Integration

To verify that data from different sources has been integrated correctly, you might need to compare key fields that should be consistent across the tables:

-- Compare data across two tables

SELECT a.key_field, b.key_field

FROM table_a a

JOIN table_b b ON a.key_field = b.key_field

WHERE a.other_field != b.other_field;Use the right tool for the job

While you can cobble together ETL testing automations in SQL or Python, sophisticated ETL scenarios call for more specialized tools.

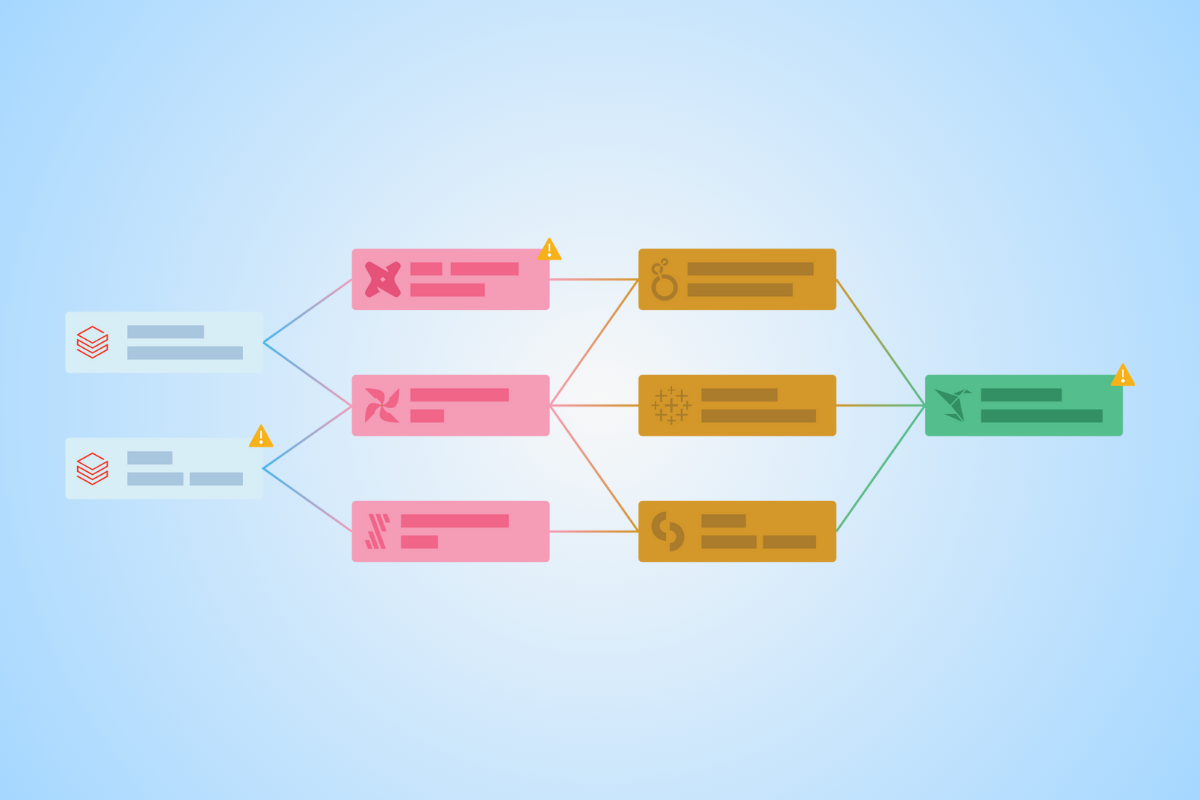

For exotic stuff like non-relational databases, streaming data, or just messy, unformatted data – especially if your job involves the financial and operational backbone of major enterprises – a better way to find and fix bad data fast is data observability platform like Monte Carlo.

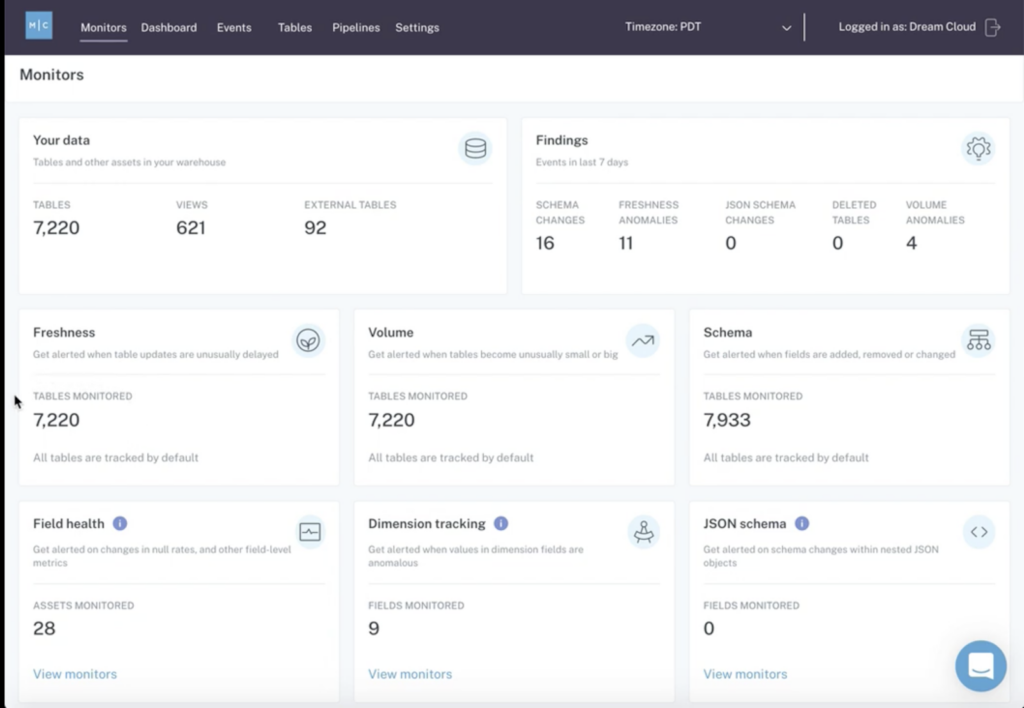

Monte Carlo offers a full suite of tools to help you gain full visibility into your data, systems, and code. It’s ETL testing and much more.

✓ Automated machine learning monitors

✓ Integrated data lineage

✓ Comprehensive root cause analysis

✓ Data quality dashboards

✓ Incident alerts

✓ Integrations with all the tools you use

The dynamic nature of modern ETL processes demands a more robust toolkit. Request a demo of Monte Carlo today.

Our promise: we will show you the product.

Product demo.

Product demo.  What is data observability?

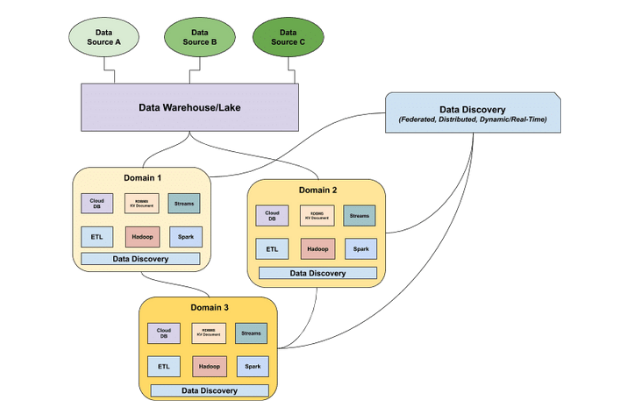

What is data observability?  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage