DataOps vs DevOps Explained

While the DevOps methodology has been taking over the world of software development, data teams are just beginning to realize the benefits that a similar approach can bring to the world of data.

Enter the nascent discipline of DataOps. Similar to how DevOps applies CI/CD to software development and operations, DataOps entails a CI/CD-like, automation-first approach to building and scaling data products.

From a conceptual standpoint, DataOps draws many parallels from DevOps, but from an implementation standpoint, the responsibilities and skillset differences between DataOps vs DevOps engineers couldn’t be more different.

Table of Contents

What Is The Difference Between DataOps vs DevOps?

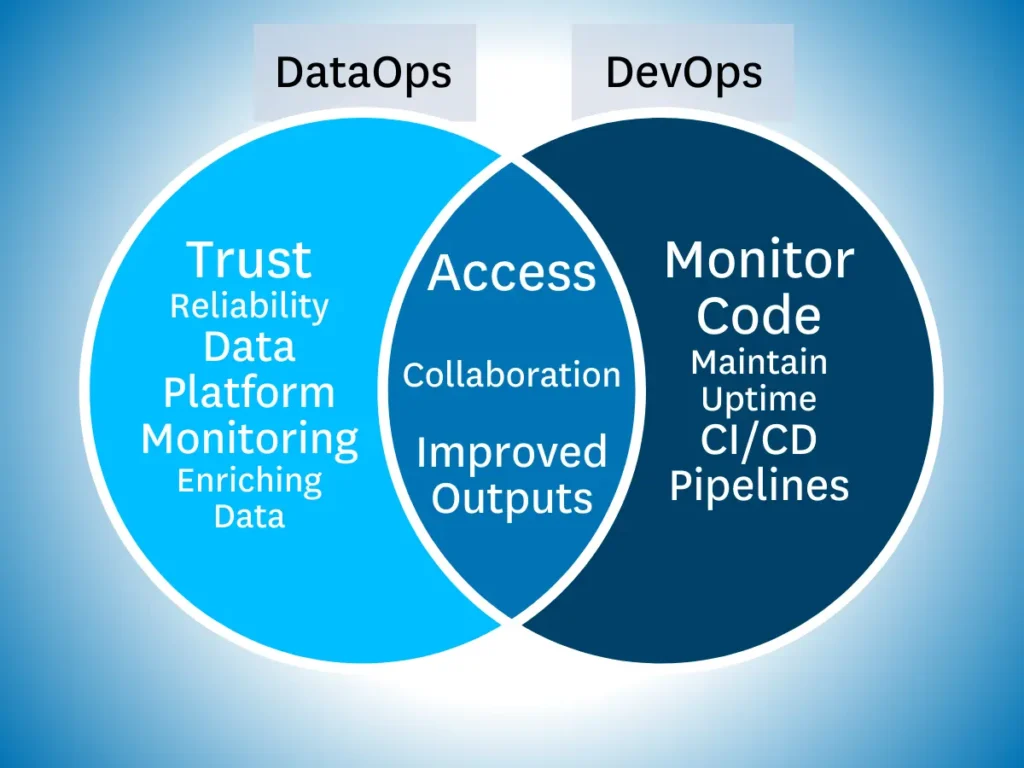

While the two may sound quite similar, DevOps isn’t actually a part of DataOps. While both DataOps and DevOps are founded on the same basic philosophy access and collaboration between teams, these two disciplines serve completely separate functions within an organization.

DevOps is a methodology that brings development and operations teams together to make software development and delivery more efficient, DataOps focuses on breaking down silos between data producers and data consumers to make data more valuable.

So, now that we understand the basics, let’s jump into the differences between DataOps vs DevOps in a bit more detail.

4 Key Differences Between DataOps and DevOps Explained

| DataOps | DevOps |

Makes sure that data is reliable, trustworthy, and not broken | Makes sure that the website or software (mainly backend) is not broken with addition, alteration, or removal of code |

DataOps’ ecosystem consists of databases, data warehouses, schemas, tables, views, integration logs from other key systems | Here CI/CD pipelines are built, automation of code is discussed, and uptime & availability are improved constantly |

DataOps is platform agnostic. It is a group of concepts that can be put into practice where there is data. | DevOps is also platform agnostic however the cloud companies have streamlined the DevOps playbook |

DataOps focuses on monitoring and enriching data | DevOps focuses on the code |

Let’s dive into what DevOps and DataOps engineers do all day, and why if you work with data you should implement DataOps at your company.

What Does a DevOps Engineer Do?

DevOps engineers remove silos between software developers (Dev) and operations (Ops) teams as they facilitate the seamless and reliable release of software to production. DevOps focuses on service uptime, continuous integration, continuous deployment without breaking, container orchestration, security, and more.

Nowadays, DevOps Engineer is occasionally called a Platform Engineer. Platform Engineering is a DevOps approach, where organizations develop a shared platform to improve the developer experience and productivity with self-service capabilities and automated infrastructure operations. It’s still DevOps engineering, but if your organization prefers a shared platform, then you may hear the “platform engineering” terminology.

Before DevOps came into play, large organizations like IBM had giant application-wide code releases. This led to iterations being slow. Debugging and redeploying was close to impossible. With DevOps, software developers can easily test a new feature or pull the plug on an old feature without stopping the main server. Such is the power of DevOps.

What is the Salary of DataOps vs DevOps?

While it’s hard to pinpoint the exact salary of DataOps engineers vs. DevOps engineers, typically DataOps engineers make an anverage income of $100,000 in the United States. This can range depending on your exact location.

On the other hand, DevOps engineers in the United States typically earn an average salary of approximately $120,000, but that can be higher depending on exact location. These salaries can overlap depending on experience, location, and industry.

What Are The Four Phases of the DevOps Lifecycle?

A standard DevOps lifecycle contains four phases. They are Planning, Developing, Delivering, and Continuous Improvement.

1. Planning

This is the ideation phase; tasks are created and are backlogged based on priority. Multiple products will lead to multiple backlogs. The waterfall approach does not work well with DevOps tasks, therefore, agile methodologies like Scrum or Kanban are used.

2. Develop

This phase consists of coding, writing, unit testing, reviewing, and integrating code with the existing system. Upon successful development of code, the same is prepared for deployment into various environments. DevOps teams automate mundane and manual steps. They achieve stability and confidence by incrementing in a small manner. This is where continuous deployment and continuous integration arises.

3. Delivery

In this phase, the code is deployed in an appropriate environment. This could be prod, pre-prod, staging, etc. Regardless of where the code is deployed it is deployed in a consistent and reliable way. The Git language has made it easy to deploy code on almost all popular servers by just typing a few lines of code.

4. Operate

This is the phase that involves monitoring, maintaining, and fixing applications in production. This is the actual place where downtime is spotted and reported. DevOps teams identify issues using tools like PagerDuty in the operational phase before their customers find out about them.

What Does a DataOps Engineer Do?

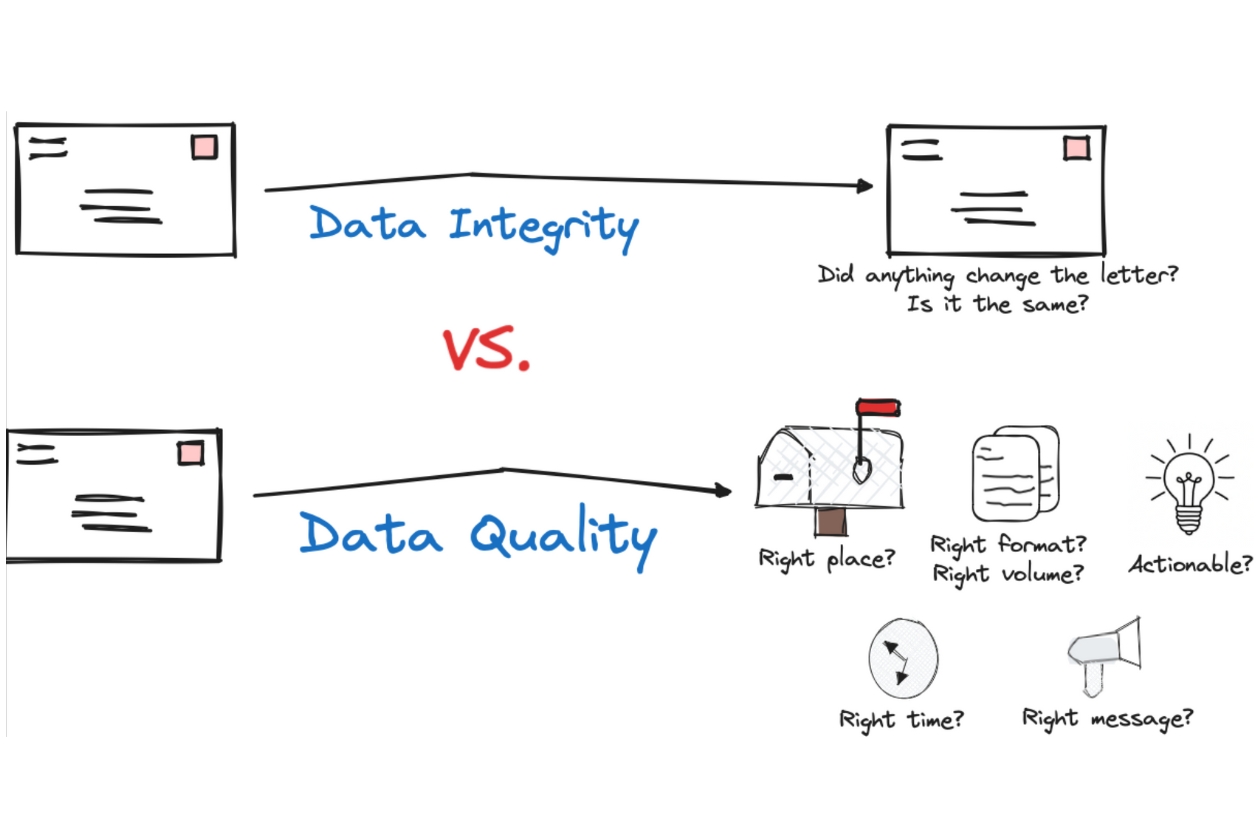

A DataOps engineer works tirelessly to remove silos in order to increase the reliability of data and this, in turn, breeds confidence and trust in the data.

A DataOps engineer makes sure that records of events, their representation, and their lineage are maintained at all times. The main goal of the DataOps engineer is to reduce the negative impact of data downtime, prevent errors from going undetected for days, and gain insight into the data from a holistic standpoint.

The DataOps lifecycle takes inspiration from the DevOps lifecycle, but incorporates different technologies and processes given the ever-changing nature of data.

What Does a DataOps Lifecycle Look Like?

A DataOps cycle has eight stages: planning, development, integration, testing, release, deployment, operation, and monitoring. A DataOps engineer must be well versed in all of these stages in order to have a seamless DataOps infrastructure.

1. Planning

Partnering with product, engineering, and business teams to set KPIs, SLAs, and SLIs for the quality and availability of data.

2. Development

Building the data products and machine learning models that will power your data application.

3. Integration

Integrating the code and/or data product within your existing tech and or data stack. For example, you might integrate a dbt model with Airflow so the dbt module can automatically run.

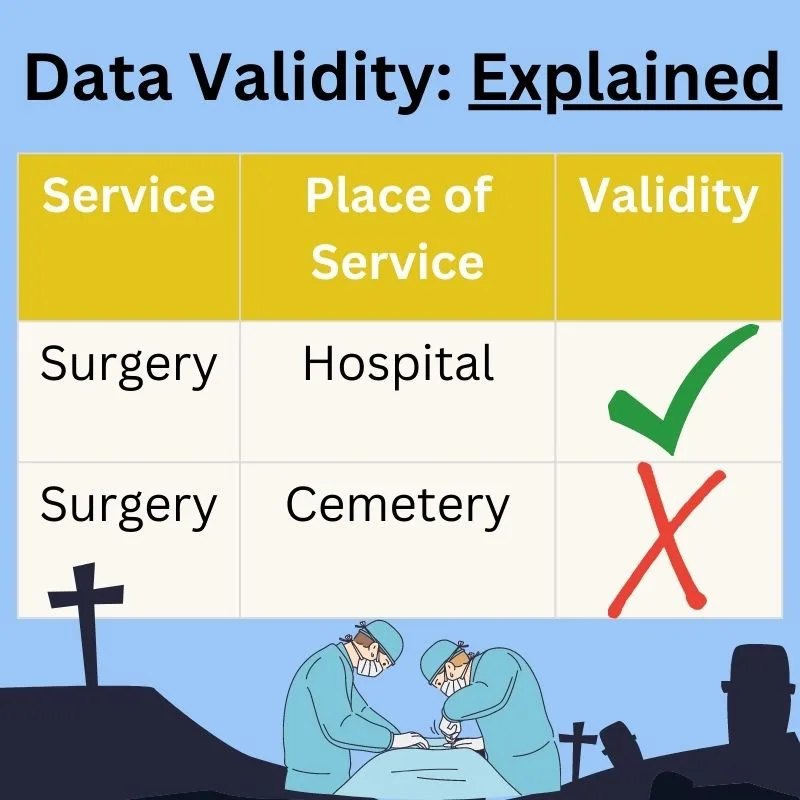

4. Testing

Testing your data to make sure it matches business logic and meets basic operational thresholds (such as uniqueness of your data or no null values).

5. Release

Releasing your data into a test environment.

6. Deployment

Merging your data into production.

7. Operate

Running your data into applications such as Looker or Tableau dashboards and data loaders that feed machine learning models.

8. Monitor

Continuously monitoring and alerting for any anomalies in the data.

What is DataOps role in AI?

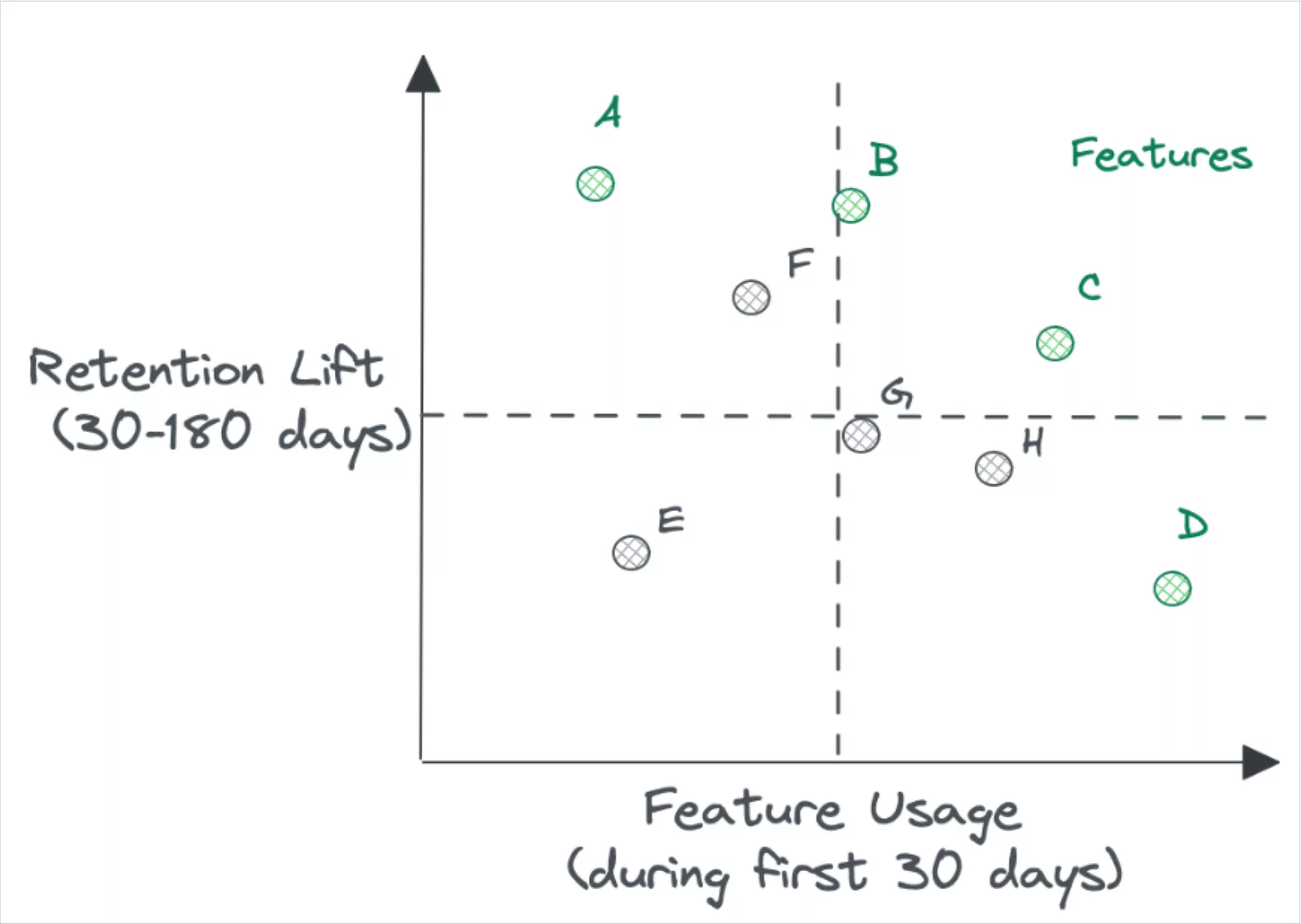

DataOps plays a crucial role in AI and ML development. Because DataOps focuses on streamlining and automating processes to collect, prepare, and deliver data for analytics, it is a key player in ensuring data is ready for AI.

DataOps also ensures that data is governed and secure with processes to address privacy, compliance and ethical data usage. Secure and private data is a key aspect of ethical and scalable AI technologies. DataOps also helps to establish feedback loops for monitoring AL model performance, and DataOps engineers can adjust data pipelines as needed to avoid data downtime and unnecessary costs.

At the Heart of Both DevOps and DataOps is Observability

The common thread between DataOps vs DevOps is observability: your ability to fully understand the health of your systems. While DevOps engineers leverage observability to prevent application downtime, DataOps engineers leverage data observability to prevent data downtime.

Just like DevOps in the early 2010s, DataOps will become increasingly critical this decade. Done correctly, data can be an organization’s crown jewel. Done incorrectly, you’ll find that with big data comes big headaches.

If you want to operationalize your data at scale, you need a data observability platform like Monte Carlo.

Monte Carlo was recently recognized as a DataOps leader by G2 and data engineers at Clearcover, Vimeo, and Fox rely on Monte Carlo to improve data reliability across data pipelines.

Data can break for millions of reasons, and the sooner you know—and fix it—the better.

Learn more about Monte Carlo by scheduling a time to speak with our team using the form below!

Our promise: we will show you the product.

Product demo.

Product demo.  What is data observability?

What is data observability?  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage