Build vs Buy Data Pipeline Guide

In an evolving data landscape, the explosion of new tooling solutions—from cloud-based transforms to data observability—has made the question of “build versus buy” increasingly important for data leaders.

In Part 3 of our Build vs. Buy series, Nishith Agarwal, Head of Data & ML Platforms at Lyra Health and creator of Apache Hudi, draws on his experiences at Uber and Lyra Health to share how his 5 considerations—cost, complexity, expertise, time to value, and competitive advantage—impacts the decision to build vs buy another critical layer of your modern data stack: build vs buy data pipelines. Missed Nishith’s 5 considerations? Check out Part 1 of the build vs buy guide to catch up.

The deeper we go into the build vs buy debate, the more we begin to understand how varied the answers to that question can be—and concurrently, how important it is to get it right.

But answering the build versus buy question isn’t easy. As we saw in Part 2 of our series, the definition of “building” and “buying” can change based on what layer of the data stack we’re considering. Sometimes the build versus buy debate can include layers of its own, with decisions to buy often evolving into a decision to use open-source tooling to retain flexibility or leverage fully-managed solutions for faster time-to-value.

But it’s not just our data platform that impacts how we define the problem and its solution. What we need and what level of development flexibility we’ll tolerate in our platform components will also be informed by the maturity of both our organizations and our data platforms themselves. In the case of high growth startups for example, what could be a build one day may be a resounding “buy” the next.

In this article, we’ll dive deep into the data presentation layers of the data stack to consider how scale impacts our build versus buy decisions, and how we can thoughtfully apply our five considerations at various points in our platform’s maturity to find the right mix of components for our organizations unique business needs. To do this, we’ll consider four separate layers of the data platform that power our data pipelines:

- 5 build vs buy considerations

- Build vs buy data ingestion

- Build vs buy transformation

- Build vs buy orchestration

- Build vs buy business intelligence

Recapping the 5 build vs buy considerations

Before we can get into our build vs buy data pipeline discussion, let’s first quickly review our five considerations. While we won’t get into the minutia of every consideration for every level of the data stack, it’s important to recall these five considerations as they’ll nonetheless steer the direction of our conversation.

- Cost & resources—the total cost in both dollars and resources to either build or buy your data platform’s tooling

- Complexity & interoperability—the impact on current and future platform integrations of building or buying

- Expertise & recruiting—the expertise required to build and maintain a given platform layer and the impact it’s likely to have on recruiting

- Time to value—the total time to stand up and see value from a given solution plus the time required to update and maintain that tooling for new use cases

- Competitive advantage—the strategic advantages inherent in building custom tooling compared to the advantages of moving faster with a managed solution

Now that we’ve had a quick refresher, let’s jump into our build vs buy data pipelines discussion in a bit more detail.

Still need a little more refreshing before you move on? Check out Part 1 of our series for a comprehensive look at our five considerations framework.

Data ingestion

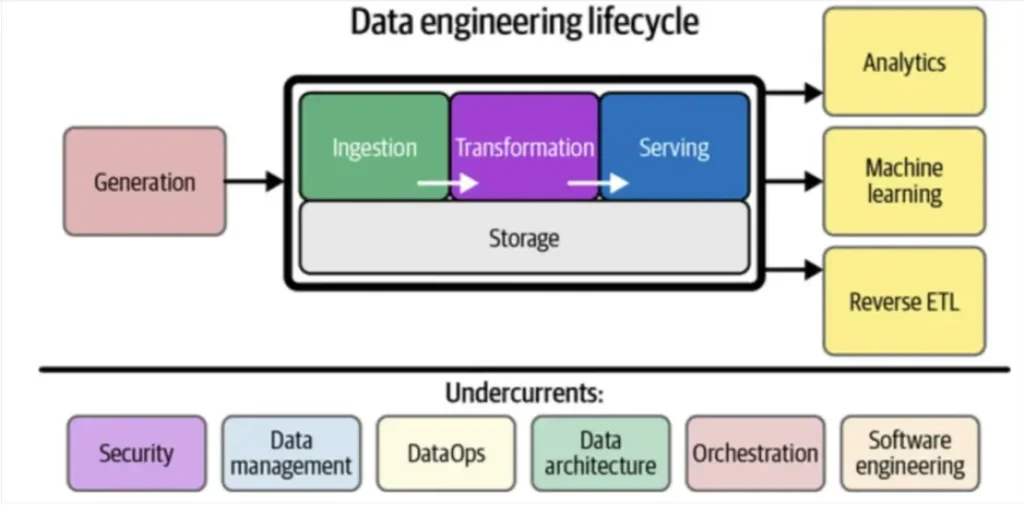

When we think about the flow of data in a pipeline, data ingestion is where the data first enters our platform. During data ingestion, raw data is extracted from sources and ferried to either a staging server for transformation or directly into the storage level of your data stack—usually in the form of a data warehouse or data lake.

There are two primary types of raw data. The first is referred to as “events” data, which consists of clickstream events, logs, and other user activity events; while the second type— known as “transactional” data—captures all the upstream services.

The ingestion process can be broadly categorized into 3 paradigms:

- Batch: import data in bulk from varied sources

- Streaming: ingest event style data

- Incremental (or mini batch): in between batch and streaming

This data ingestion process can be accomplished by either querying the source directly, using upstream systems to publish events, or some combination of the two. There are several out-of-the-box solutions like Fivetran, Airbyte, or Debezium that assist in implementing these approaches.

If streaming data is a priority for your platform, you might also choose to leverage a system like Confluent’s Apache Kafka along with some of the above mentioned technologies.

The ingestion process typically consists of several complementary actions:

- Authenticating source data

- Schema change management

- First-time snapshot or bootstrap

- Incremental data publishing

- Setting up data ingestion pipelines

Which you choose—and by extension what tooling you’ll consider—will depend on the goals and constraints of your data team.

Build vs buy data ingestion

Like every tool we have or will cover, whether to build or buy your ingestion tooling will depend entirely on your situation. The answer here really lies in what data sources you need and how much you’re willing to spend to get them.

If you’re a scaling team with a relatively simple ingestion strategy—relying primarily on common data sources like Salesforce—a solution like Fivetran’s “connectors” will probably make a lot of sense for your team. Fivetran is a managed SaaS solution that automates the entire ingestion process—from connection to maintenance—right out of the box with optimized integrations for the most popular data sources. That means less engineering time spent coding and maintaining pipelines—and less complexity down the road as you begin to invest in other layers of your data stack. All this adds up to repeatable and low-impact processes for connecting new data sources that will enable your data platform to scale in pace with your broader organization.

Having said all that, there are two major caveats. If you require custom ingestion sources or latency guarantees that are too specialized for a managed solution (like sensitive data use-cases) or you need to connect with sources and connectors that aren’t currently supported by vendors, this solution likely won’t be sufficient for your needs. Due to the different disparate moving technologies involved in ingestion, you may need some combination of Fivetran, Kafka, Spark, data lake formats and more. But in many growing organizations, a combination of Fivetran and custom pipelines using Airflow will usually do the trick.

The scale of data events depends entirely on the product. Products that generate machine data (like network devices) or products that more frequently engage users will quickly run into problems with scale. At Uber, for example, data ingestion scale quickly surpassed what out-of-the-box solutions could support. In the early days, Kafka was used to capture events data while a combination of Sqoop and MapReduce were used to ingest transactional data from upstream databases. But as the business grew, requirements for correctness, completeness, and latency evolved with it. Uber’s operations teams needed more real-time insights into what was happening in the cities. Data scientists and engineers alike were clamoring for reliable and consistent data that was free from underlying ingestion problems at scale. With a lack of open source solutions that met the requirements of Uber’s extreme scale, all data events and transactional flows were eventually routed through Kafka exclusively using an implementation similar to Debezium called DBEvents.

For most organizations today, the question becomes whether to leverage a managed open-source solution like Airbyte to speed time-to-value and optimize engineering resources; or retain more build control by building a customized solution in-house with open-source tooling. Supporting high quality datasets with strong guarantees for data completeness and latency requires an extremely robust data ingestion platform that becomes particularly complex at scale. Upstream data evolution breaks pipelines. Sudden event streams create backlogs and delays. These are persistent problems for data engineers at this scale.

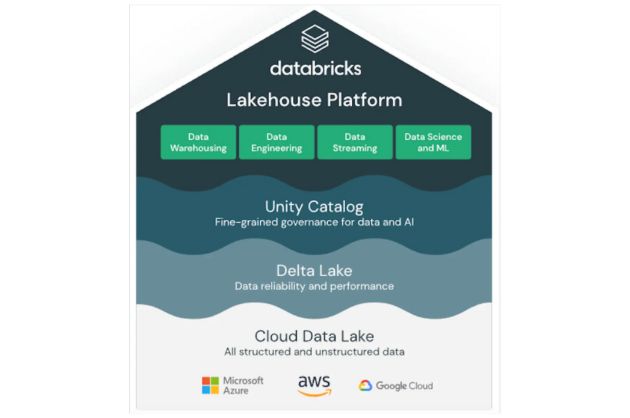

Thankfully, as the modern data landscape has evolved, data ingestion has evolved with it. Many of the companies backing open source solutions are well versed in not only the architecture of data ingestion but the challenges it brings with it—from data quality and data corruption to latency and completeness. And more companies are attempting to tackle this problem by the day, like Databricks with AutoLoader or Snowpipe from Snowflake. But while these are great products, and it’s certainly a step in the right direction, managing a data ingestion platform at scale continues to be a challenge for most data organizations.

When it comes to data ingestion, the important question is whether you actually have such real-time use-cases at your company. Do you really have the throughput and scale that would necessitate building an infrastructure of this magnitude? If the answer is yes, more than likely you’ll need to stand-up some form of data ingestion platform in-house. And there will be a clear competitive advantage and resource benefit for doing so.

But if you can survive on solutions like Fivetran and Airbyte without a noticeable degradation in performance, data quality, or your ability to meet your SLAs, it makes all the sense in the world to do so!

Data transformation

Once you’ve ingested your raw data, it needs to be transformed into something structured before it can be fed into your BI tooling and data products. Generally speaking, most transformations are performed using SQL, leveraging open source solutions like dbt Core, or utilizing a managed service like dbt Cloud.

Build vs buy data transformation

When it comes to transformations, there’s no denying that dbt has become the de facto solution across the industry. Its open source solution is robust out-of-the-box, and it offers sufficient features to meet the transformation needs of most data teams.

Investing in something like dbt makes creating and managing complex models faster and more reliable for expanding engineering and practitioner teams because dbt relies on modular SQL and engineering best practices to make data transforms more accessible.

Unlike manual SQL writing which is generally limited to data engineers, dbt’s modular SQL makes it possible for anyone familiar with SQL to create their own data transformation jobs. This means faster time to value, reduced engineering time, and, in some cases, a reduced demand on expertise to drive your platform forward. This is increasingly important if you’re considering implementing a self-service framework like a data mesh for your organization’s data operations.

Again, the notable exception here being large companies like Netflix or Uber. These enterprise-level data companies possess both the functional expertise and institutional knowledge to create specialized tools in-house, and stand to gain a substantial strategic advantage from investing the time to do so. At Uber, we built a platform similar to dbt that managed 1000’s of modeled tables. And for the scale of our platform and the needs of our organization, that made great business sense.

But if that’s not you, investing your time into standing up a dbt instance is going to be the most obvious approach to optimizing your transforms. What’s more, dbt offers both open source and managed solutions for teams depending on their needs and experience.

So, let’s consider the two primary dbt solutions: dbt Core and dbt Cloud.

When should you use dbt Core?

dbt Core is a free open source solution that gives team’s access to the dbt platform using command prompts. While it doesn’t offer complete parity with dbt’s fully managed solution, it’s regularly maintained and provides most of the same benefits as it’s premium counterpart, including:

- Building reusable data models that automatically pull model changes to dependencies

- Publishing canonical models

- Branching, pull requests, and code reviews.

- Basic data quality tests to quickly monitor for anomalies

- Usable data catalog and lineage infrastructure

Because it’s entirely open source, dbt Core also offers greater flexibility, allowing data leaders to fully-manage how they develop and deploy their dbt instance. dbt Core is great for teams with limited budgets and those desiring greater flexibility above and beyond what a managed solution would allow. In the context of our framework, that means lower cost and higher opportunity to build competitive advantage through custom tooling, but at the cost of time-to-value, complexity, and the need for more dedicated data engineering expertise.

When should you invest in dbt Cloud?

While dbt Cloud isn’t free, it does provide more out of the box features that make investing worthwhile for certain teams.

Unlike dbt Core which relies on a command line interface, dbt Cloud utilizes a browser-based integrated development environment (IDE) to develop, test, schedule, and investigate data models. This allows for faster speeds and simpler development for users with limited proficiency in a CLI.

In addition to a streamlined cloud environment and improved run speeds, dbt Cloud also offers a suite of proprietary tools and features that aren’t available within the open source tool, including job scheduling, job logging, monitoring and alerting, documentation, version-control integration, and role-based access controls.

While dbt Cloud might be overkill for some teams, it’s excellent for organizations hoping to implement a data mesh or empower analytics teams to self-serve their data models without relying solely on data engineering resources which can often create bottlenecks and drive down ROI.

dbt also offers improved time-to-value because teams will be able to onboard and produce much faster with less specialized expertise and fewer manual processes to control.

In the context of our considerations, that means the fastest time to value with the least complexity and the lowest expertise requirements, but at the cost of both budget and flexibility.

If you’re looking for efficiency across teams, like to run a lean team, or you need to manage a large dbt deployment, dbt Cloud by fishtown analytics is going to be the way to go.

Data orchestration

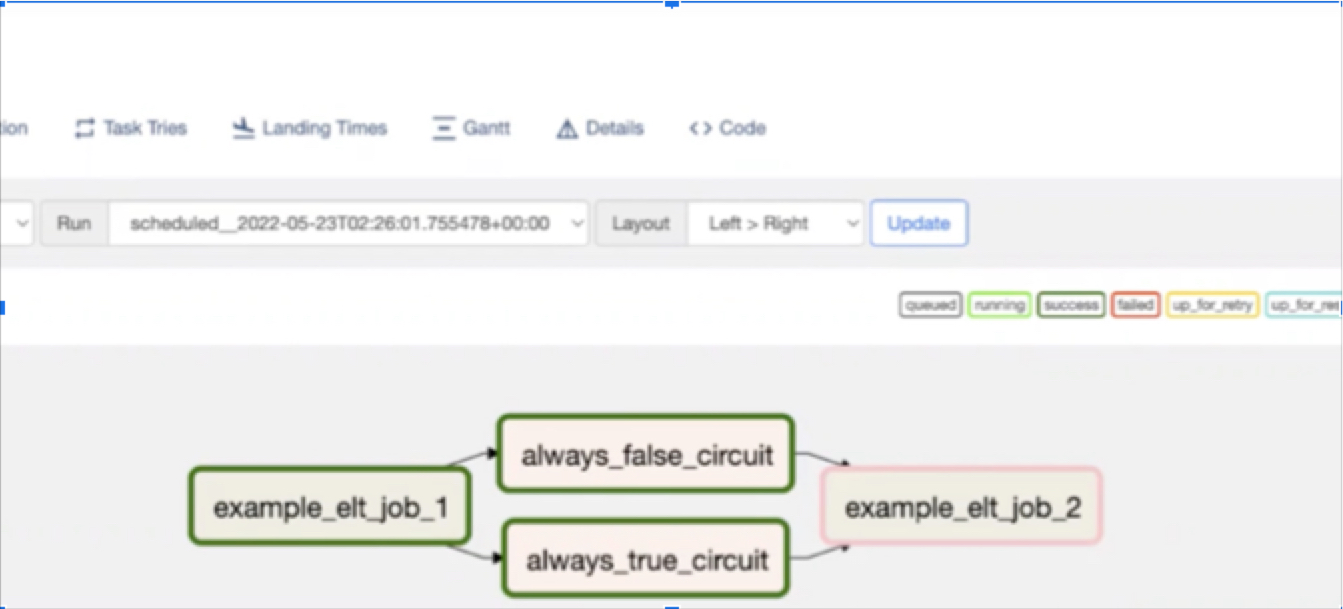

When you’re extracting and processing data, the order of operation matters. As your pipeline needs to evolve with new data sources and use cases, scheduling becomes critical to successfully managing your data ingestion and transformation jobs. That’s where orchestration comes into play.

Data orchestration brings automation to the process of moving data from source to storage. In the broadest terms, data orchestration is the configuration of multiple tasks into a single end-to-end process. Data orchestration triggers when and how critical jobs will be activated to ensure data flows predictably through complex data platforms at the right time, in the right sequence, and at the appropriate velocity to maintain production standards.

Some of the most popular solutions for data orchestration include Apache Airflow, Dagster, and relative newcomer Prefect. Many of these popular tools are open source and many managed solutions exist for each of them. Every cloud provider also has a vertically integrated orchestrator for organizations that exclusively and heavily use solutions from cloud providers.

Build vs buy orchestration tooling

Unlike the other components we’ve discussed in Part 3, data pipelines don’t require orchestration to be considered functional—at least not at a foundational level. And data orchestration tools are generally easy to stand-up for initial use-cases. However, once data platforms scale beyond a certain point, managing jobs will quickly become unwieldy by in-house standards.

Some of the challenges you’ll likely face with your critical pipelines—and the transformations running on them—include:

- ZERO downtime upgrades

- Guaranteeing SLAs for data pipelines

- Fault tolerances

- And infrastructure upgrades

In the early days at Uber, we invested in technologies such as Oozie to manage many of our map-reduce ingestion jobs with cron jobs managing our ETL. While this worked well for a time, the data infrastructure team quickly found itself needing to support hundreds of thousands of pipelines across the company. Airflow was an upcoming project at the time that supported most of the use-cases we needed. But to integrate Airflow into Uber’s data infrastructure stack at this level of scale, the project had to be forked and a multiplicity of functionalities added to support the existing customer deployment patterns and use-cases within the company.

As you can imagine, building and supporting an infrastructure of that magnitude (or building the car while we’re driving it) was no small feat. It required a complete data workflow and an orchestration team that’s frankly not feasible for most organizations.

Thankfully, most companies today will have the option to buy managed solutions before they reach this level of scale. At Lyra Health, our journey has evolved from our teams maintaining the orchestration infrastructure in-house to using managed solutions that allow us to overcome the above mentioned challenges fairly efficiently. Looking back, the catalyzing event was the promise we made to our customers. We made the decision to utilize a managed solution to enable us to scale faster, reduce infrastructure maintenance, and provide the kind of fault tolerance that would deliver the high quality data our customers expected.

Additionally, with a managed solution, you can avail customer support to investigate, discover, and resolve bugs that are bound to arise with these open source solutions.

So, if you’re just beginning your platform journey, standing up your orchestration in-house is a good first step—assuming you have the skills and resources to do it. But as you begin providing SLAs to your customers, be ready to make the switch to a managed solution sooner than later. Your data team and your customers will thank you.

Business intelligence

Business intelligence is the aggregated, standardized, and graphical representation of data sets that’s leveraged by your downstream users to understand and activate data for business use cases—from insights and decision-making to powering digital products, monitoring and reporting, and driving innovation across the organization.

When should you invest in business intelligence?

While business intelligence tools provide a varied set of functionality, the most prominently used are data visualizations. Data consumers need a way to quickly make sense of the data and visualizations are easy to consume, easy to share, and easy to defend. As a result, most businesses large and small alike will invest in BI tooling early in their data platform journey.

So, how does scale impact our business intelligence tooling? As our downstream consumers grow, their data visualization needs will grow in parallel. As data leaders, we need solutions that can efficiently support those needs as they increase without materially increasing the burden on our engineering resources.

Build vs buy business intelligence tooling

A good business intelligence tool will allow consumers without a SQL background to easily understand and manipulate the data for insights and storytelling. And as you can imagine, that’s no easy feat.

During my time at Uber, we took a hybrid approach to BI tooling. Some of the components of our intelligence tooling were built in-house for specific use-cases, while others leveraged out-of-the-box solutions.

Like warehouse and data lake development, BI solutions require very specific skill sets to build and maintain. And even with the right skill sets, it’s extremely difficult to build a BI solution entirely in-house that could reach feature parity with the advanced plug-and-play BI solutions already on the market such as Tableau, PowerBI, Looker, and Data Studio.

Of course, as I’ve often said, it all depends on your team’s expertise and how much you’re willing to invest into your BI solution. There could certainly be value in building aspects of your BI platform or data products to meet very specific use-cases using open source solutions such as Apache Superset. But for resource cost, staffing, and time-to-value, it’s safe to say that leveraging out-of-the-box solutions is a pretty tried and proven approach to investing in your BI tooling.

Scale drives data platform development. Your goals drive the decisions.

Whether we’re at the storage layer or orchestration, pain is always the catalyst for platform development. A gap in our analytics. Frequent data quality issues. An overextended data team.

Each time your organization clears a new benchmark in scale, the build versus buy debate continues. What works one day doesn’t work on day 1,000. And what works on day 1,000 will be a bottleneck at IPO.

But as we saw in the build vs buy data pipelines debate, scale doesn’t just drive the timing of our build versus buy debate—it defines the context of our build versus buy debate as well. Are we small and cost-conscious? Are we scaling quickly and moving fast? Are we large and well resourced? Where we find our organization—and our platform—on the maturity curve will color the way we view the build versus buy debate.

Like the work of a good data engineer, the toil of the build versus buy debate is never done. But no matter what layer of the stack we consider, if we understand the needs of our stakeholders—and the five considerations we’ve outlined in this series—we’ll be ready with whatever complexity the build versus buy debate can throw at us.

Product demo.

Product demo.  What is data observability?

What is data observability?  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage