How Snowflake Data Quality Monitoring Helps Monte Carlo Customers Deliver More Reliable Data Workloads

Raise your hand (or nod your head in solidarity at your desk) if your CEO ever told you that the numbers in a critical dashboard are “way off?”

Has a customer ever called out errors in product dashboards?

What about a spike in data volume for an important financial model that went unnoticed for weeks – eventually costing your company millions of dollars – because there wasn’t a noticeable change in your KPIs?

As data volumes grow, pipelines become increasingly complex, and data product use cases diversify, it’s critical that this data is accurate and reliable. In fact, according to Wakefied’s 2023 State of Data Products Survey, over 200 data leaders cited that the number one roadblock to data product adoption was lack of data quality, and our 2022 State of Data Quality survey, data teams upwards two days per week firefighting bad data.

Lack of data reliability can have serious repercussions on the business, including wasted time and resources, customer churn, reputational risk, and even financial loss.

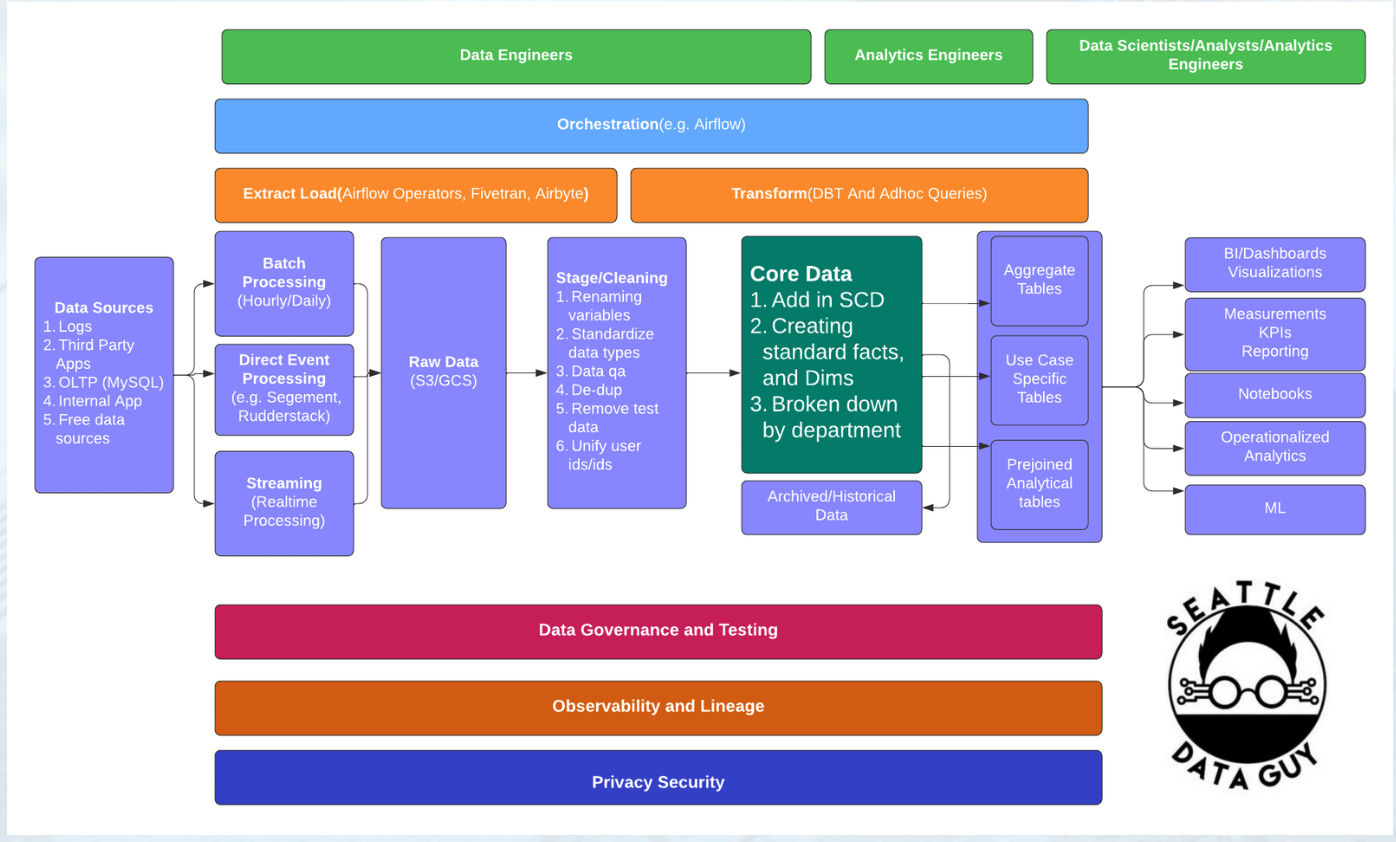

The good news? Today at Snowflake Summit, Snowflake announced Data Quality Monitoring, a new feature that empowers data teams to define, measure, and monitor data quality metrics in the Data Cloud. This new functionality makes it easy for Snowflake customers to build and maintain a centralized library of data quality checks that you can easily and scalably apply to several assets at once, preventing teams from having to piece together ad-hoc checks for each pipeline.

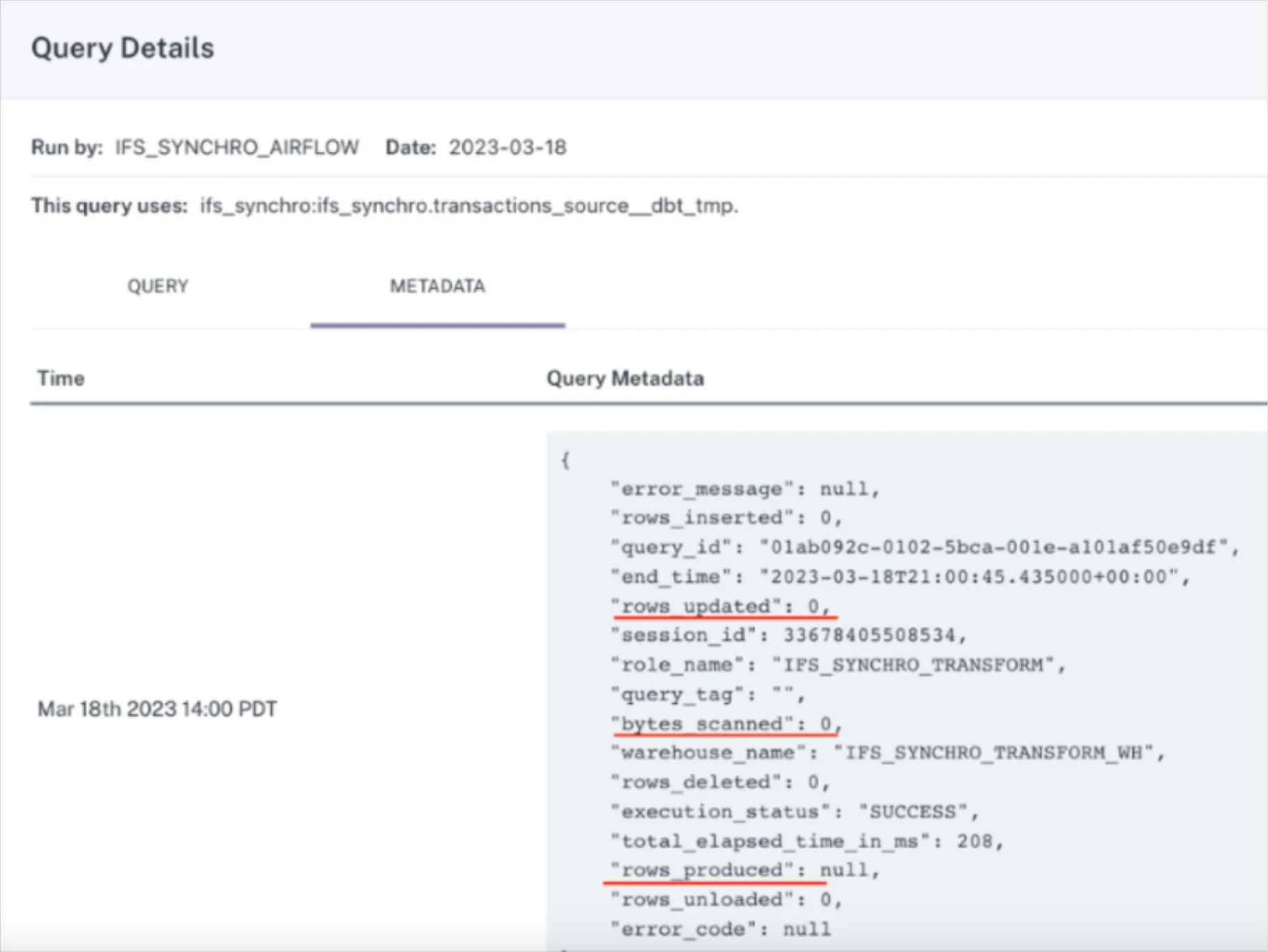

Among other critical functionalities, Snowflake Data Quality Monitoring gives teams out-of-the-box metrics and automated periodic measurements of defined metrics for your critical data, as well as the ability to monitor measurement output – all in the Data Cloud. Now, data teams on Snowflake can associate specific data quality metrics with a table or view, making it easy to monitor for these data quality metrics across multiple tables and columns at once.

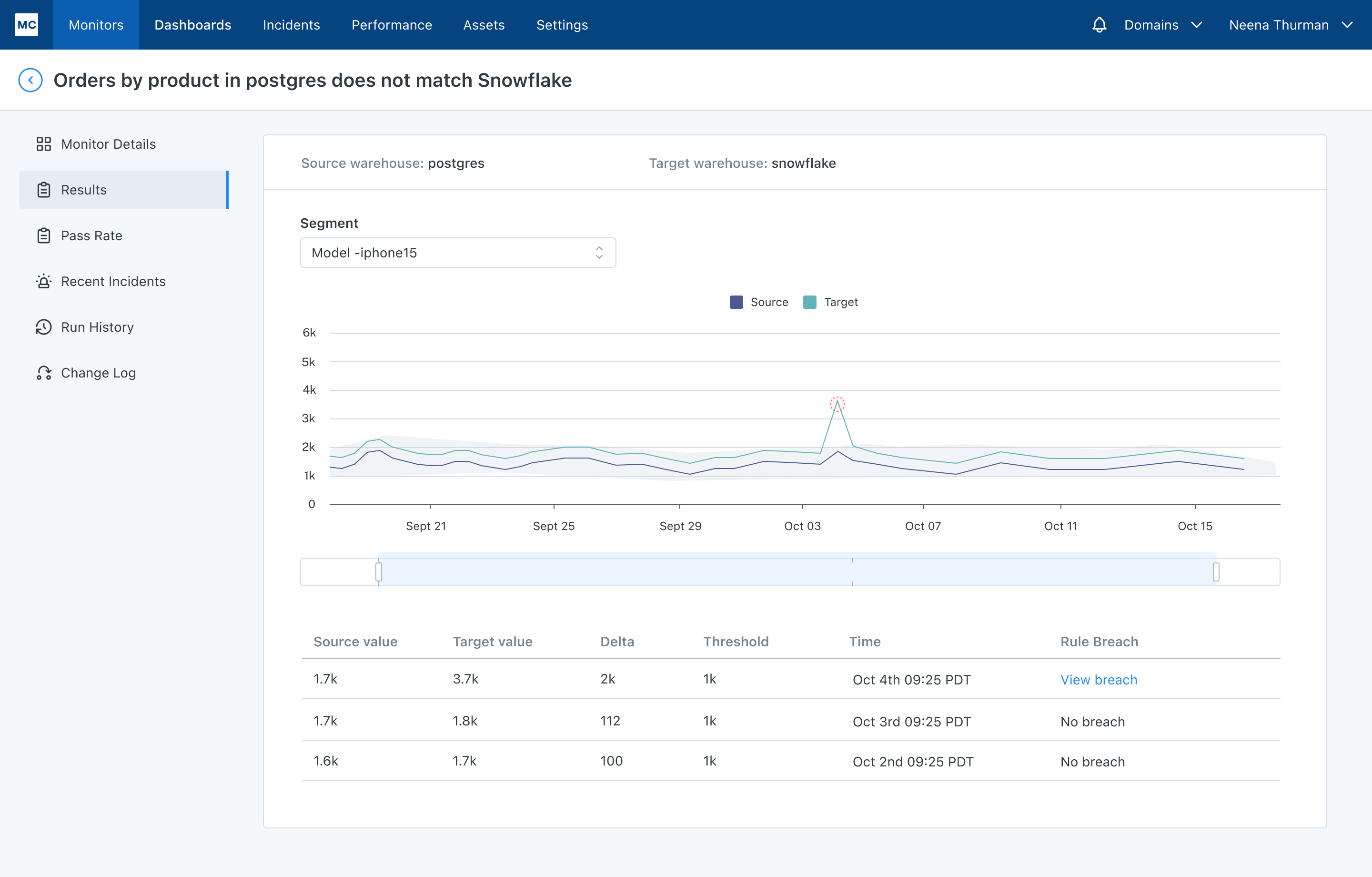

This release brings much needed visibility to the serious problem of data downtime, in other words, periods of time when data is missing, inaccurate, or otherwise erroneous, and provides greater insight into the health of your data, as well as tools to improve it. Snowflake Data Quality Monitoring will also help data observability providers like Monte Carlo optimize the performance of data quality management across Data Cloud environments.

Additionally, by building data quality management features into its native service, Snowflake is signaling the importance of data reliability for its customers. As the leader in data observability, we are excited to partner with Snowflake to elevate this conversation and pioneer a future where data is accurate, reliable, and trustworthy across the data lifecycle through automated, end-to-end data quality monitoring, alerting, and resolution.

Attending Snowflake Summit? Stop by booth #1740 to learn more about how Monte Carlo and Snowflake are partnering to help teams eliminate data downtime and improve data trust.

Interested in learning how Monte Carlo and Snowflake drive data reliability at scale? Request a demo of our joint solution.

Our promise: we will show you the product.

Product demo.

Product demo.  What is data observability?

What is data observability?  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage