Data Integrity vs. Data Validity: Key Differences with a Zoo Analogy

Imagine a zoo data quality analyst entering data on the heights of different animals and they accidentally swap the data for giraffes and penguins.

The data has integrity because the information is all there. The heights for both giraffes and penguins have been entered, and there’s no missing or corrupt data.

However, the data is not valid because the height information is incorrect – penguins have the height data for giraffes, and vice versa. The data doesn’t accurately represent the real heights of the animals, so it lacks validity.

The key differences are that data integrity refers to having complete and consistent data, while data validity refers to correctness and real-world meaning – validity requires integrity but integrity alone does not guarantee validity.

Let’s dive deeper into these two crucial concepts, both essential for maintaining high-quality data. We’ll explore their definitions, purposes, and methods so you can ensure both data integrity and data validity in your organization.

What is Data Integrity?

Data integrity is the process of maintaining the consistency, accuracy, and trustworthiness of data throughout its lifecycle, including storage, retrieval, and usage. Integrity is crucial for meeting regulatory requirements, maintaining user confidence, and preventing data breaches or loss.

Besides the zoo example, some other examples of data integrity include ensuring that data is not accidentally or maliciously altered, preventing unauthorized access to sensitive information, and maintaining the consistency of data across multiple databases or systems.

How Do You Maintain Data Integrity?

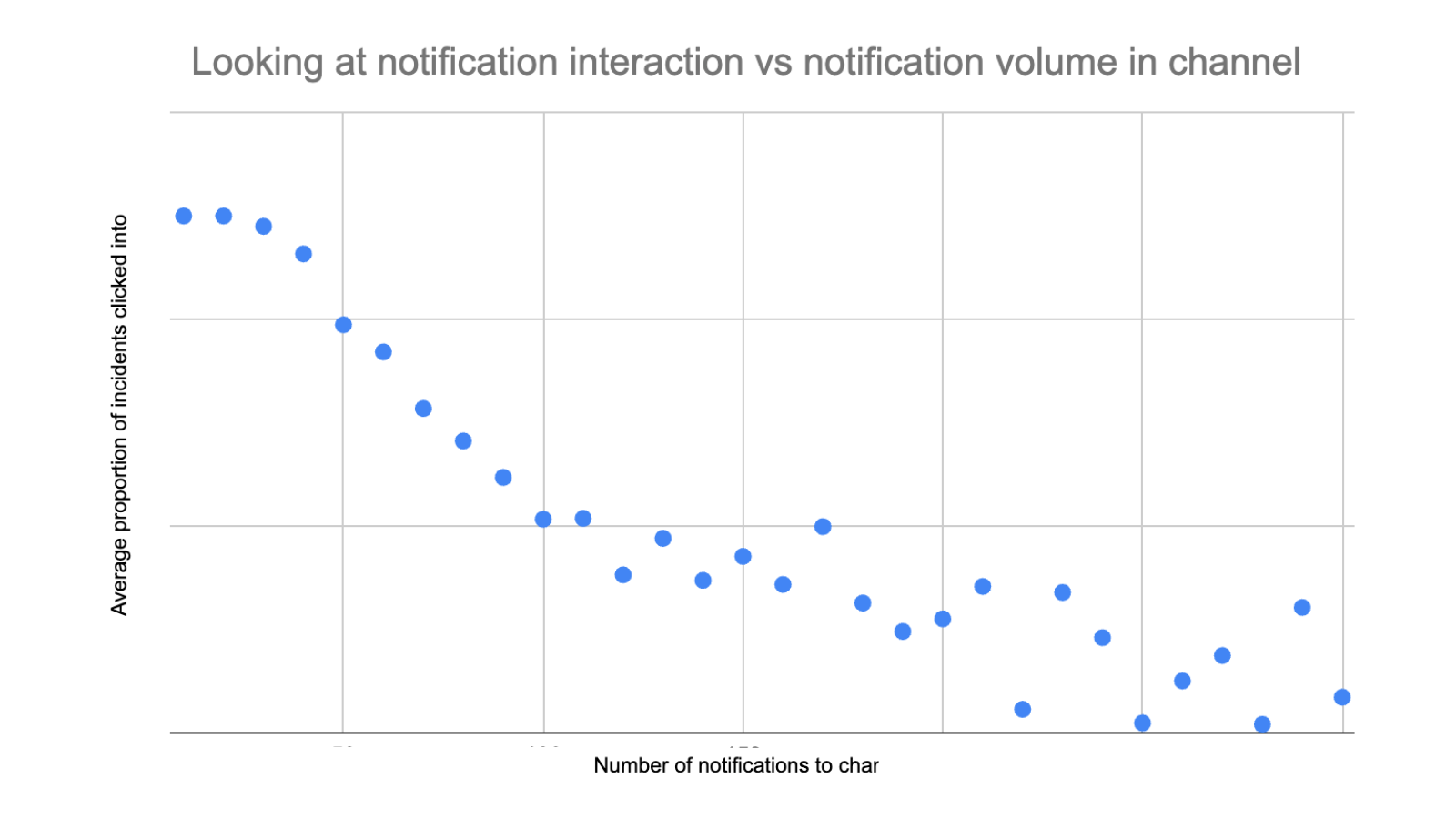

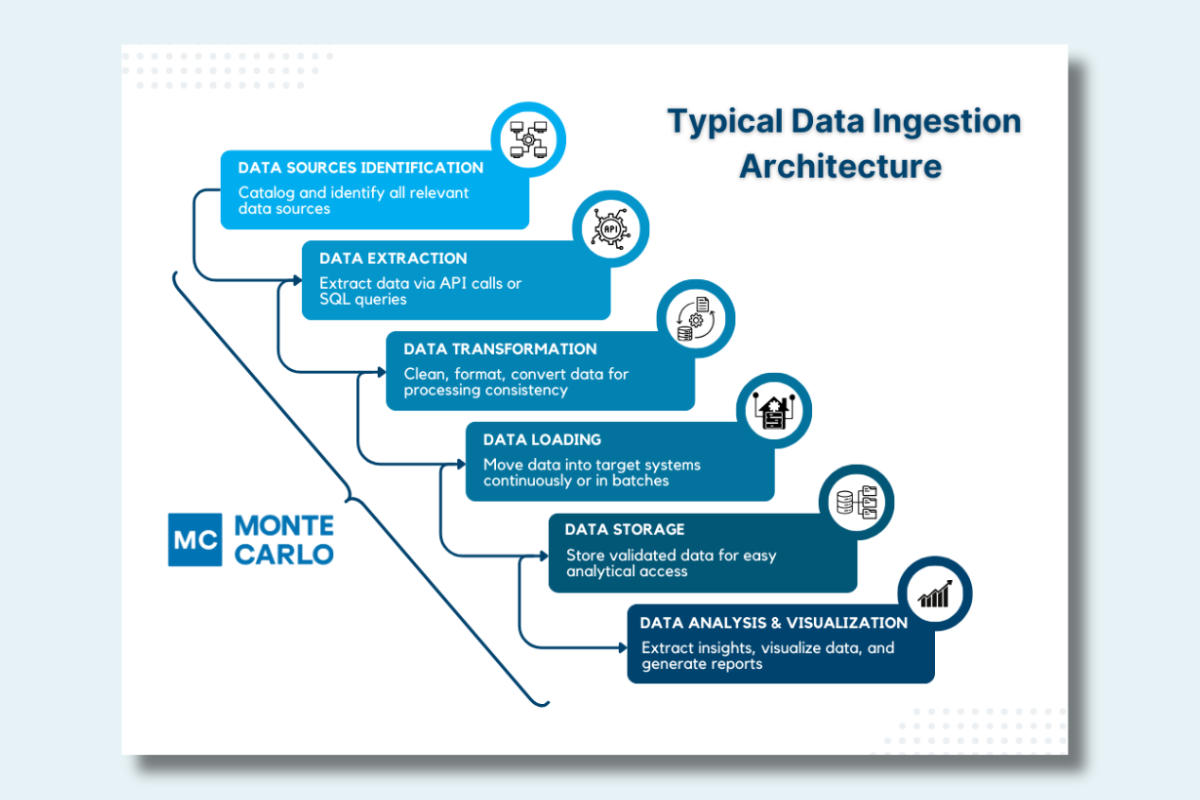

Data integrity issues can arise at multiple points across the data pipeline. We often refer to these issues as data freshness or stale data. For example:

- The source system could provide corrupt data or rows with excessive NULLs.

- A poorly coded data pipeline could introduce an error during the data ingestion phase as the data is being clean or normalized.

- A failed Apache Airflow job or dbt model error could prevent data from continuing to flow to downstream tables.

- Empty queries could create data integrity issues.

Traditional methods to maintain data integrity include referential integrity, data consistency checks, and data backups and recovery. The most effective way to maintain data integrity is to monitor the integrity of the data pipeline and leverage data quality monitoring.

Learn more in our blog post 9 Best Practices To Maintain Data Integrity.

What Is Data Validity?

Data validity refers to the correctness and relevance of data. It ensures that the data entered into a system is accurate, meaningful, and follows predefined rules or constraints. Data validity is vital for making well-informed decisions and preventing errors based on incorrect data.

Examples of data validity include verifying that email addresses follow a standard format, ensuring that numerical data falls within a certain range, and checking that mandatory fields are filled out in a form.

How Do You Maintain Data Validity?

In some cases, data validity incidents are easily detected when reviewing a dataset. A column depicting shipment time should never be negative for example.

However in most cases, data validity can be difficult for data engineers, analytical engineers, data scientists and other data professionals to assess.

While anyone can notice if data is missing and data integrity has been breached, typically only those that regularly work with a data set will understand the nuances of a particular field or the relationships between multiple columns.

For example, a data analyst working within a specific domain may have the context necessary to notice an anomaly in the range of values between fields depicting the price of a transaction and the country in which it took place.

Data validity issues happen for many of the same reasons that data integrity incidents occur. One additional common data validity challenge is metrics standardization. For example, two analysts may have different models for how they calculate monthly recurring revenue.

This data validity challenge can be overcome with the implementation of a metrics or semantics layer. This has been introduced by LookML for BI reporting and by dbt to cover the larger data stack.

Methods to ensure data validity include data validation rules, data input controls, data cleansing, and data observability. Learn more in our blog post Data Validity: 8 Clear Rules You Can Use Today.

Data Validity vs. Data Integrity: Comparing the Differences

Purpose

Data integrity and data validity are part of the six dimensions of data quality (along with accuracy, completeness, uniqueness, and consistency) each serve distinct purposes.

Data integrity is about making sure information is complete, organized, and safe from damage or mistakes. Data validity, on the other hand, is about making sure the information is correct and makes sense.

Focus

Data validity emphasizes the correctness and relevance of data at the point of entry or during data collection. Data integrity is more concerned with safeguarding the data from unauthorized access, corruption, or loss throughout its entire existence in a system.

Methods

Some methods to ensure data integrity include:

- Backups: Creating copies of information, like having an extra copy of your school notes in case you lose the original.

- Rules: Setting up rules for how information is entered, like having a template for your notes so they’re consistent and easy to read.

- Access control: Limiting who can change the information, like letting only your teacher correct your notes to avoid mistakes.

For data validity, some methods include:

- Validation: Checking that the information entered follows specific rules, like making sure a phone number has the right number of digits.

- Review: Having someone double-check the information, like asking a friend to look over your notes for mistakes.

- Comparing: Cross-referencing the information with other sources, like comparing your notes with a textbook to make sure the facts are correct.

Consequences

Both data integrity and data validity are important because they help us trust and use data to make good decisions.

The downstream implications of bad data integrity or bad data validity include loss of this trust, reduced efficiency, compliance issues, poor decision-making, and damage to reputation.

Take a proactive and systematic approach

Data integrity and data validity are terms that emerged in an earlier era of data management, when data was far more static and structured.

In today’s fast-paced and complex data landscape, understanding data as it moves through the modern data stack requires a more comprehensive approach.

This is where the concept of data observability comes in – the ability to monitor data pipelines, detect issues, and understand the context around data to ensure not just integrity and validity, but all the data quality metrics that matter.

Data observability is a more powerful paradigm than the older data integrity and validity constructs for today’s world of real-time processing and the prevalence of distributed systems that organizations now inhabit.

Interested in learning more about the Monte Carlo data observability platform? Schedule a time to talk to us below!

Our promise: we will show you the product.

Product demo.

Product demo.  What is data observability?

What is data observability?  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage