Exclusive Preview: O’Reilly Data Quality Fundamentals

Below, we share an excerpt from Data Quality Fundamentals: A Practical Guide to Building Trustworthy Data Pipelines, written by Barr Moses, Lior Gavish, and Molly Vorwerck. Access the first 10 pages for free, here.

If you’ve experienced any of the following scenarios, raise your hand. (Or, you can just nod in solidarity – there’s no way we’ll know otherwise).

5,000 rows in a critical (and relatively predictable) table suddenly turns into 500, with no rhyme or reason.

A broken dashboard that causes an executive dashboard to spit null values.

A hidden schema change that breaks a downstream pipeline.

And the list goes on.

This book is for everyone who has suffered from unreliable data, silently or with muffled screams, and wants to do something about it. We expect that these individuals will come from data engineers, data analytics, or data science backgrounds, and be actively involved in building, scaling, and managing their company’s data pipelines.

On the surface, it may seem like Data Quality Fundamentals is a manual about how to clean, wrangle, and generally make sense of data – and it does. But moreso, this book tackles best practices, technologies, and processes around building more reliable data systems, and in the process, cultivating data trust with your team and stakeholders.

In Chapter 1, we’ll discuss why data quality deserves attention now, and how architectural and technological trends are contributing to an overall decrease in governance and reliability. We’ll introduce the concept of “data downtime,” and explain how it harkens back to the early days of site reliability engineering (SRE) teams and how these same DevOps principles can apply to your data engineering workflows, too.

In Chapter 2, we’ll highlight how to build more resilient data systems by walking through how you can solve for and measure data quality across several key data pipeline technologies, including data warehouses, data lakes, and data catalogs. These three foundational technologies store, process, and track data health pre-production, which naturally leads us into Chapter 3, where we’ll walk through how to collect, clean, transform, and test your data with quality and reliability in mind.

Next, Chapter 4 will walk through one of the most important aspects of the data reliability workflow – proactive anomaly detection and monitoring – by sharing how to build a data quality monitor using a publicly available data set about exoplanets. This tutorial will give readers the opportunity to directly apply the lessons they’ve learned in Data Quality Fundamentals to their work in the field, albeit at a limited scale.

Chapter 5 will provide readers with a bird’s eye view into what it takes to put these critical technologies together and architect robust systems and processes that ensure data quality is measured and maintained no matter the use case. We’ll also share how best-in-class data teams at Airbnb, Uber, Intuit, and other companies integrate data reliability into their day-to-day workflows, including setting SLAs, SLIs, and SLOs, and building data platforms that optimize for data quality across five key pillars: freshness, volume, distribution, schema, and lineage.

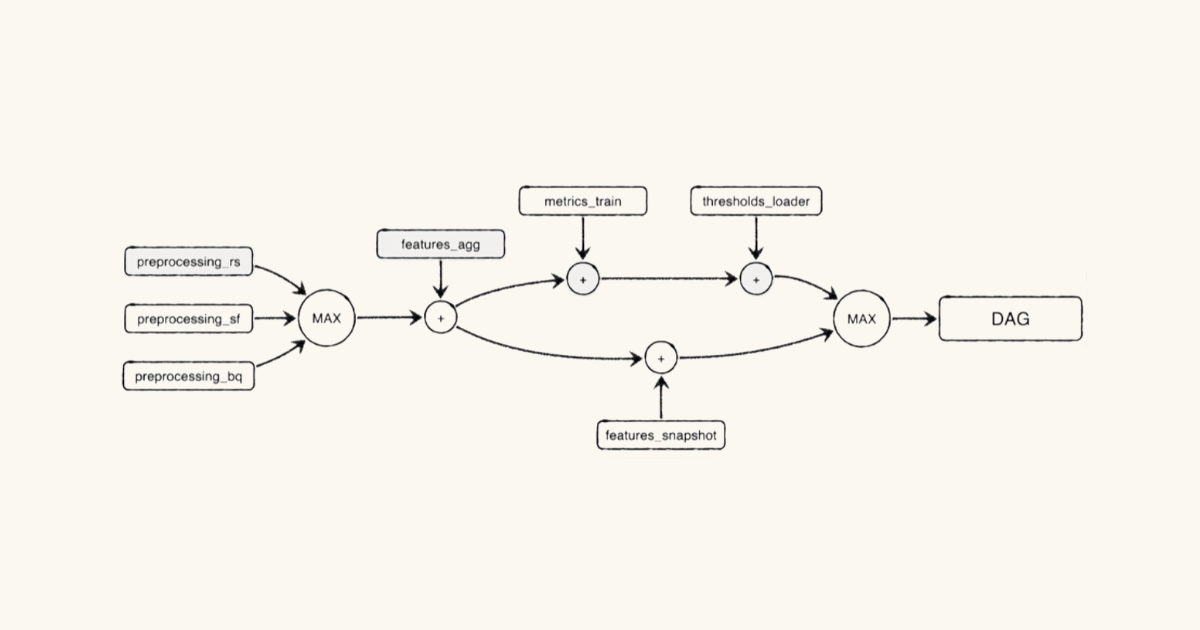

In Chapter 6, we’ll dive into the steps necessary to actually react to and fix data quality issues in production environments, including data incident management, root cause analysis, post-mortems, and establishing incident communication best practices. Then, in Chapter 7, readers will take their understanding of root cause analysis one step further by learning how to build field-level lineage using popular and widely adopted open source tools that should be in every data engineer’s arsenal.

In Chapter 8, we’ll discuss some of the cultural and organizational barriers data teams must cross when evangelizing and democratizing data quality at scale, including best-in-class principles like treating your data like a product, understanding your company’s RACI matrix for data quality, and how to structure your data team for maximum business impact.

In Chapter 9, we’ll share several real-world case studies and conversations with leading minds in the data engineering space, including Zhamak Dehghani, creator of the data mesh, António Fitas, whose team bravely shares their story of how they’re migrating towards a decentralized (and data quality-first!) data architecture, and Alex Tverdohleb, VP of Data Services at Fox and a pioneer of the “controlled freedom” data management technique. This patchwork of theory and on-the-ground examples will help you visualize how several of the technical and process-driven data quality concepts we highlight in Chapters 1-8 can come to life in stunning color.

And finally, in Chapter 10, we finish our book with a tangible calculation for measuring the financial impact of poor data on your business, in human hours, as way to help readers (many of whom are tasked with fixing data downtime) make the case with leadership to invest in more tools and processes to solve these problems. We’ll also highlight four of our predictions for the future of data quality as it relates to broader industry trends, such as distributed data management and the rise of the data lakehouse.

At the very least, we hope that you walk away from this book with a few tricks up your sleeve when it comes to making the case for prioritizing data quality and reliability across your organization. As any seasoned data leader will tell you, data trust is never built in a day, but with the right approach, incremental progress can be made – pipeline by pipeline.

Check out our exclusive preview of O’Reilly’s Data Quality Fundamentals here.

Our promise: we will show you the product.

Product demo.

Product demo.  What is data observability?

What is data observability?  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage