Mastering Data Quality: 5 Lessons from Data Leaders at Nasdaq and Babylist

What does it mean to be truly “data-driven”?

All too often, teams tasked with becoming “data-driven” get excited about new technologies (Snowflake! dbt! Databricks!) while overlooking or failing to understand what it really takes to make their tools — and, ultimately, their data initiatives — successful.

When it comes to driving impact with your data, you first need to understand and manage that data’s quality. Achieving high-quality data isn’t something that happens overnight, though; rather, it’s a phased process that requires organizational buy-in.

On the surface, it doesn’t seem like Babylist and Nasdaq are companies that have much in common. When it comes to their commitment to data quality and reliability, though, they’re more alike than you might think.

We spoke with Mark Stange-Tregear, VP of Data at Babylist, and Michael Weiss, Senior Product Manager at Nasdaq, to learn how each has embarked on his respective journey to build a modern data stack premised on overarching data trust. In our conversation, we learned about Mark and Mike’s “crawl, walk, run” approach to data quality and gained helpful tips and tricks for mastering data quality at scale.

Building modern data stacks at Babylist and Nasdaq

Mark Stange-Tregear, Babylist

As VP of Data at Babylist, Mark Stange-Tregear keeps pretty busy.

“We use data a lot to really understand our users, our business operations, and to draw insights into how the business is operating, how our users’ experience is going, and to factor that into the decisions we make on a day-to-day basis about our product or our business operations or our planning,” he says.

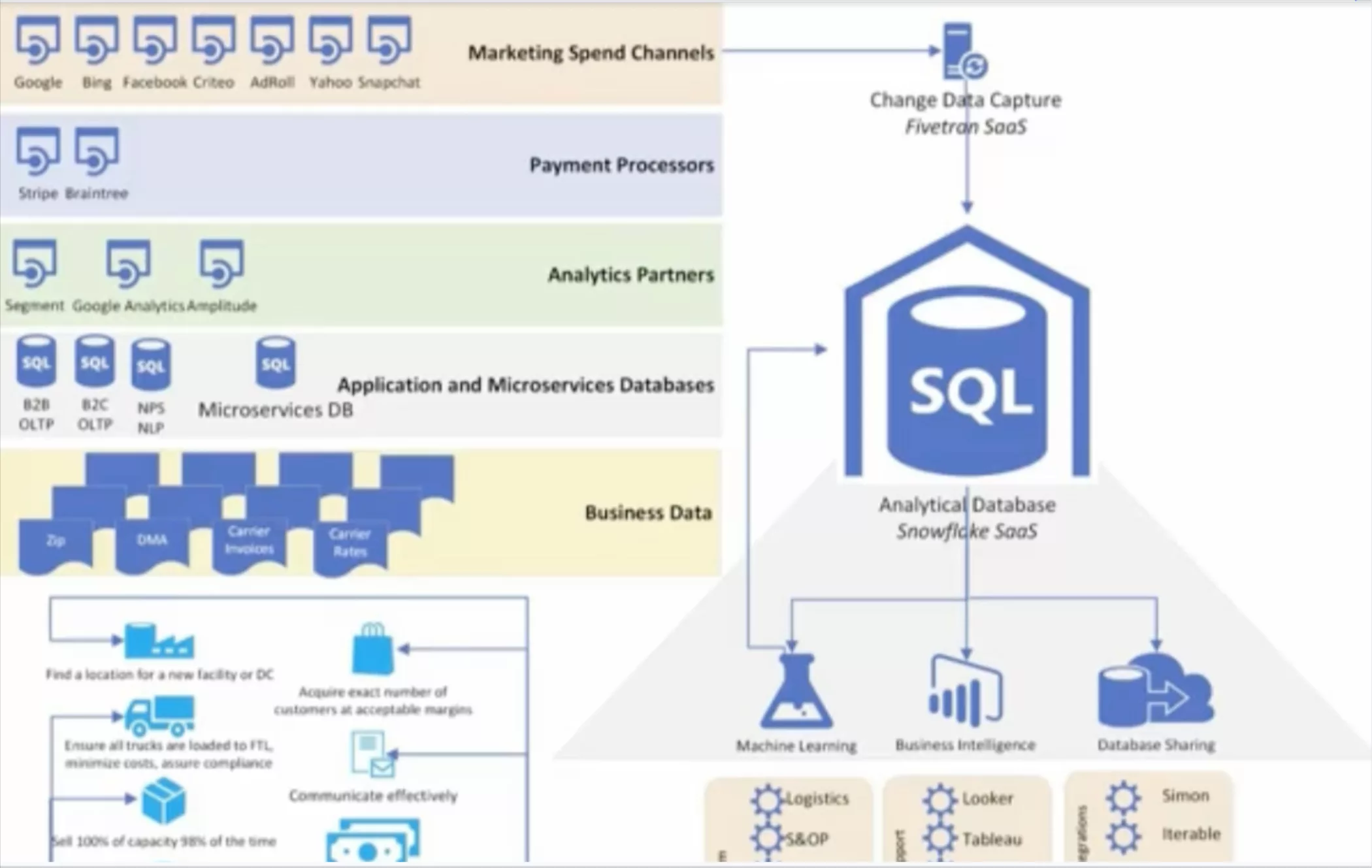

Babylist’s sophisticated data stack comprises several technologies: Snowflake at the center of storage and compute, dbt for modeling, an AWS data science stack atop SageMaker, Segment and Astronomer for reverse ETL into various internal and external tools, Sigma for data visualization, Secoda for cataloging, and Monte Carlo for data observability.

To Mark, several predictable traits — accuracy, for example, and frequency and consistency of updates — define data quality, but discoverability is key. “You’re not going to have a lot of use of data if no one can discover it,” says Mark. Likewise, it’s important that end users truly understand the company’s data and how various data sets interact with one another. “It’s very difficult to say you’ve got a high-quality data set if people don’t actually understand what it means or how it interacts,” he says.

Mike Weiss, Nasdaq

Like Babylist, Nasdaq is a “data-informed” organization that views data as a vital part of the organization’s broader mission.

“As exchange operators and stewards of the financial health and wellbeing of the global economy, we have to make sure we’re making decisions off of data consistently, and it’s critical to our business,” says Mike.

Nasdaq’s data stack centers around a proprietary ingestion service the company built in 2013 – which means the team has been operating a data warehousing and lake solution in AWS for the better part of a decade.

Elsewhere in the data stack, Mike and his team use Parque and S3 for raw data storage, Redshift as the query engine, dbt to prepare models for end users, QuickSight for business intelligence, Monte Carlo for data observability, and Atlan for cataloging and governance.

To Mike, data timeliness and completeness are important vectors of success when it comes to assessing data quality, although he notes the value of other more complex qualities as well. “Things like accuracy, reliability, readability, and relevance can get more challenging depending on the width of your data,” he notes.

The crawl/walk/run toward data quality

As stewards of data quality across their organization, Mark and Mike have both been responsible for investing in the tools and deploying the processes that ensure data reliability at scale. At both Babylist and Nasdaq, data quality isn’t binary — it’s not something that either exists or it doesn’t, and inching toward higher levels of data quality has happened slowly and deliberately over time.

Mark and Mike discussed the crawl/walk/run framework vis a vis data quality, in which achievements happen over ongoing phases, and shared their insights at each step of the journey.

Here are five lessons we learned from their stories.

Lesson 1: Gain organizational alignment first

Data quality is a company value, not a data team responsibility. The sooner multiple constituents can embrace this fact, the better — and the faster the data team can proceed from beyond the “crawl” phase.

“At the end of the day, if you really do want to use data as an organization in a fairly fundamental way, it’s something everyone has to be aware of and has to be taken seriously,” says Mike.

Gaining organizational alignment happens in a few ways, but much of it centers around higher-level thinking and communication.

“It comes down to how the organization is going to use the data,” explains Mark. “Not all data has the same impact. It’s critical to understand: what are my data areas, my data groupings, what level of accuracy or quality do I need them to be at to appropriately fit with what we’re trying to use them to do?”

Once your data team understands the use case and value of each data set, you can more clearly communicate that to the broader organization and make the case for data quality at scale.

“The moment we started putting data in the hands of the decision-makers directly, more so than by proxy via some engineering team, it became more tangible for them,” says Mike. “They can really understand [what it means] when data is wrong.”

Lesson 2: Apply software engineering best practices

In the earliest stages of pursuing data quality, it’s important to lay a solid foundation by applying software engineering best practices.

Mark advises ensuring your team is doing basic things, like merge requests and code checks, following standard guides and conventions for structuring code to ensure consistency, and automating pipelines for code validation as much as possible.

“Pretty standard CICD stuff you would typically do on most software projects — apply those same things to data pipelines,” he says.

Testing, too, is a critical element that can ultimately save material time and resources.

“Testing in the wild is interesting,” notes Mike. “If you’re working on limited hardware, this is where moving to the cloud makes such a huge difference, because if you’re working on a relatively limited tech stack, you may not be able to run at full scale.”

Lesson 3: Lean on small bets vs. projects too big to succeed

As you lay your data quality foundation, capture wins, and begin to convert the data-haters into data quality acolytes, it can be easy to become overly ambitious in your data quality initiatives.

“Often in data, we see a lot of people trying to take on these giant initiatives, and they’re just setting themselves up for failure,” says Mike. Instead, he says, you should work to identify smaller projects with a higher likelihood of success. “That will help you get organizational buy-in much sooner and lead to more investment and, hopefully, more wins in the future,” he says.

Similarly, it’s important to be realistic about the near-term purpose and role of new tools and technologies.

“When you bring a new tool in, it’s important to baseline what you’re trying to accomplish with that tool and not try to boil the ocean,” says Mike. Embrace new tools and allow them to complete the jobs they were designed to do as simply as possible from day one.

Lesson 4: Systemize data quality processes

The walk stage, according to Mark, is the “victim of our own success” stage. At this point, the data team has gained organizational buy-in such that it can’t keep up with the data observability work itself — likely due to a lack of systemic data quality processes.

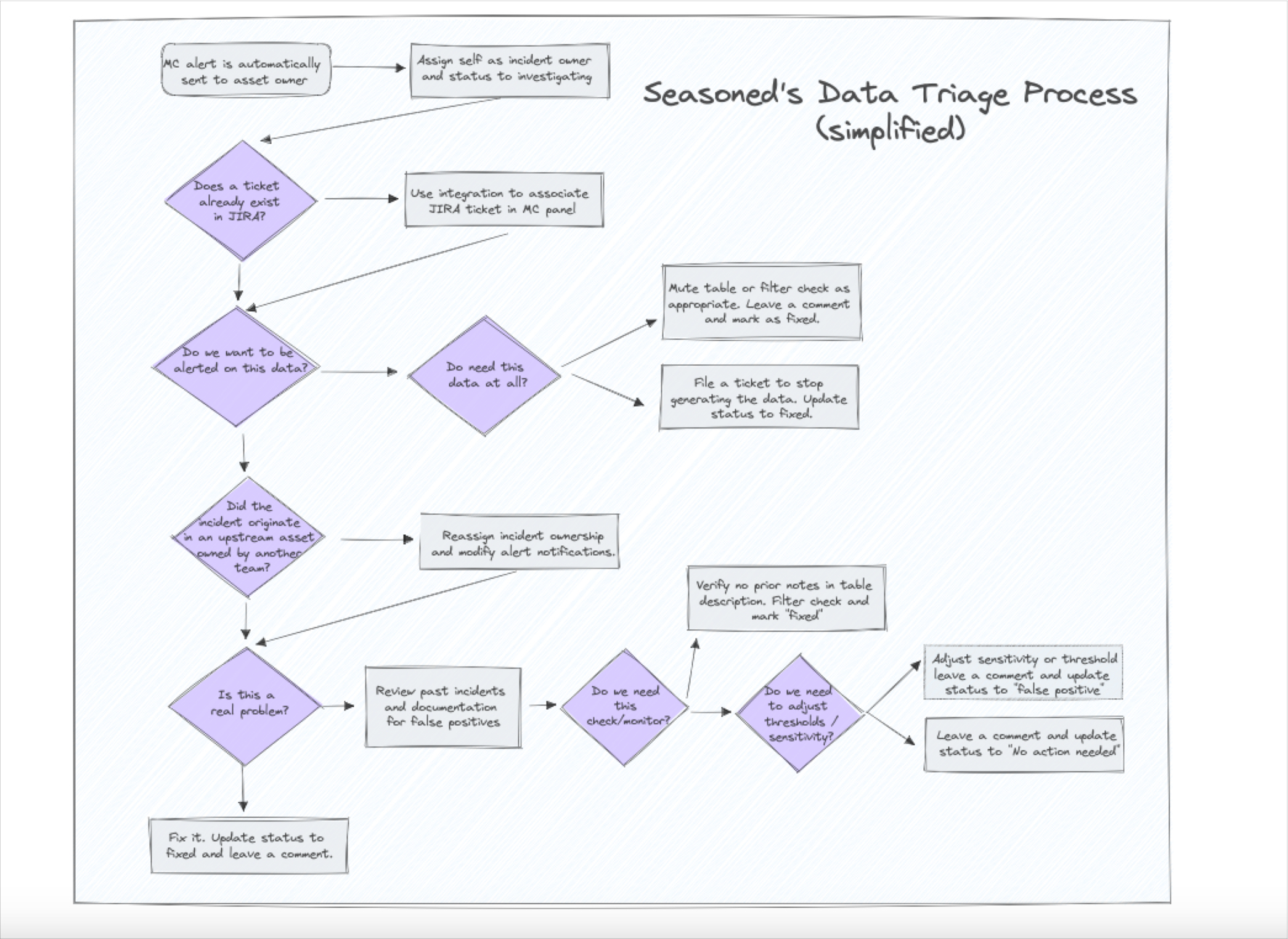

Data quality success isn’t just about the tools — it’s also about workflow. “What happens when an alert gets introduced? Who’s responsible?” Mark recommends asking. “None of this data quality work can happen at scale without some really deep system and process thinking.”

This is the time, according to Mike, to identify your company’s critical assets and put them under management first.

Lesson 5: Start with testing, then leverage data observability

Once you’ve built a solid foundation, gained organizational buy-in, and established systems and processes that support the tools in your data stack, you’ve officially reached the “run” stage of the data quality journey. This is where you may find yourself leveraging data observability to shift from reactive to proactive data quality management.

Data observability tools bring the data quality conversation into areas that will ultimately benefit the most people. According to Mike, it’s about working smarter — not harder. “Leveraging APIs and plugins and things like that to really enable producers and data producers to derive their own alerts is important,” he says.

Monte Carlo is equipped with several features that make managing data quality an easy habit rather than a tedious task. The tool automatically detects and monitors data pipelines, so various teams (not just the data team!) learn when and why data may be down.

“Monte Carlo’s custom monitors have been really nice, and field health monitors out of the box have been really valuable,” says Mike. “These offer really nice insights into the health not just of the table itself, but the actual structure of the table.” Monte Carlo gives upstream and downstream lineage for a 40,000-foot view of the entire data ecosystem — and this helps stakeholders across the company truly run.

The future of data quality management

In the future, data quality management won’t necessarily be the responsibility of data leaders like Mark and Mike. Rather, data observability will be the purview of the entire organization, facilitated by the data team, and this collective responsibility will help companies truly scale.

In this future state, we expect we’ll see ownership of data quality shift from a central data team to data producers and/or consumers, and we’ll see companies expand data observability to their broader ecosystems.

To scale data quality management and achieve company-wide data trust, Babylist and Nasdaq leveraged data observability. You should too.

Ready to learn more? Reach out to Monte Carlo today.

Our promise: we will show you the product.

Product demo.

Product demo.  What is data observability?

What is data observability?  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage

![[VIDEO] How Yotpo Drives Data Observability with Monte Carlo](https://www.montecarlodata.com/wp-content/uploads/2021/01/Screen-Shot-2021-01-29-at-9.35.32-AM.png)