How BlaBlaCar Reduced Data Incident Time to Resolution by 100+ Hours Per Quarter with Monte Carlo

BlaBlaCar is the world’s leading community-based travel network. Carpool is their core product, but they have expanded with bus lines throughout the world and are adding new modes of transportation.

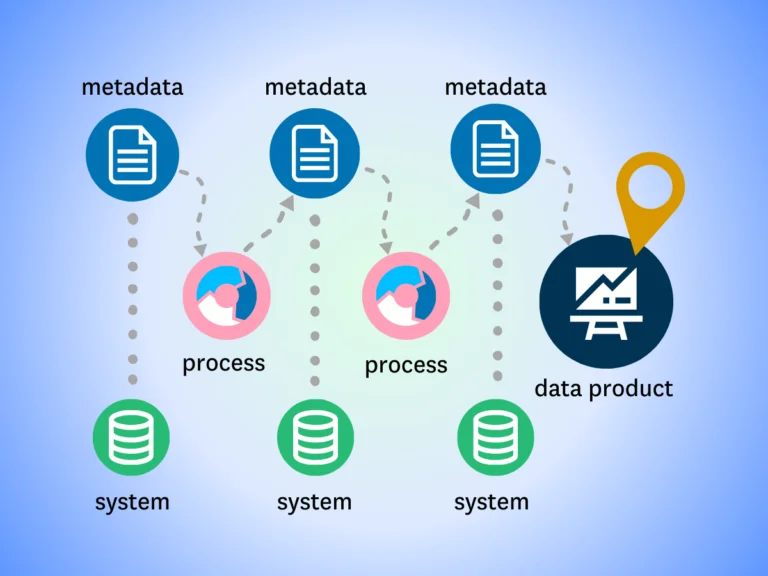

BlaBlaCar operates a modern data stack with multiple external data sources, streaming data from their platform, and production databases that land in BigQuery. Data is critical to their operations as it powers the features that help connect drivers and passengers.

“Data is the backbone of our business. It’s incorporated throughout our company from marketing to support through products, and we’ve been a pioneer in areas like machine learning for many years now,” said Kineret Kimhi, Data Engineering and BI Manager, BlaBlaCar.

The Challenge: Achieving Faster Time to Value at Scale

BlaBlaCar was growing fast, but adding headcount to the data engineering team wasn’t enough to keep up with the rapidly increasing needs of the business.

“We started with this core product and suddenly acquired a bus line and then another company and all of a sudden we had multiple modes of transportation,” said Kineret. “It took us a year to add everything into the central warehouse, and we realized if we want to help the company grow and add more modes of transportation we need to find a way to scale.”

After careful research and consideration, the engineering team zeroed in on data quality as a fundamental problem.

“When we looked into it we realized that much of our capacity issues came from data quality issues popping up,” said Kineret. “We discovered the process of finding the root cause of data quality issues took 200 hours a quarter across our entire team. About half of our data incidents were being found by data consumers, which is not ideal.”

Even getting a full picture of the company’s data health proved challenging.

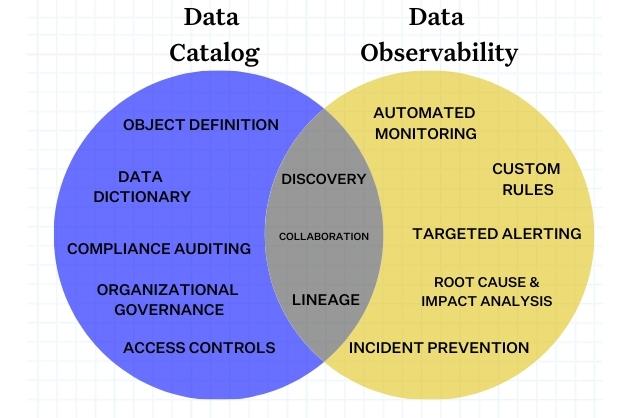

“We didn’t have good visibility into the data health of the organization. We could see there were failures and we had KPIs, but they couldn’t give us the full landscape,” she said. “That’s when we realized we needed data observability because you can’t plan to find all the ways data will break with all of the unknown unknowns.”

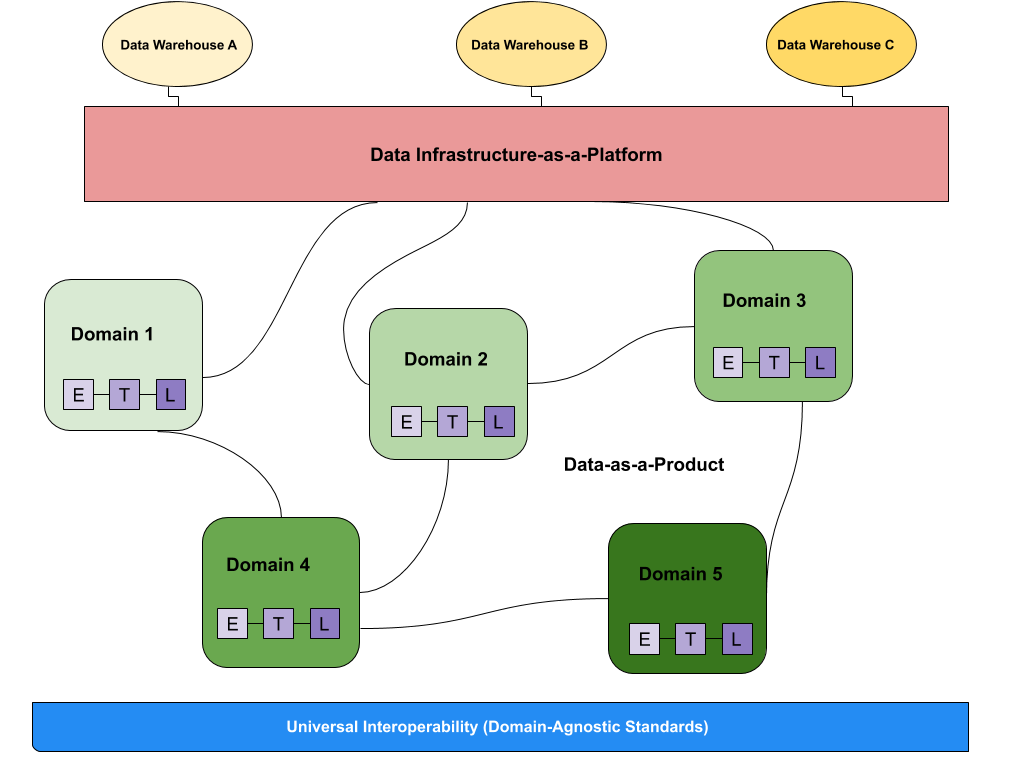

Around the same time the BlaBlaCar Data team came across Zhamak Dehghani’s data mesh concept.

“We realized many of the problems we were experiencing could be solved by this new socio-technical concept. We decided to start with a POC in collaboration with marketing. We embedded data engineers, scientists, analysts, and a software engineer within marketing to operate as a mesh and we collected feedback over three months,” said Kineret.

The team found they delivered more, moved faster, and work was easier. As a result, Kineret and BlaBlaCar decided to fully move toward a data mesh.

The Solution: Monte Carlo + Data Mesh

The BlaBlaCar team demoed multiple data observability solutions before selecting Monte Carlo as their vendor of choice.

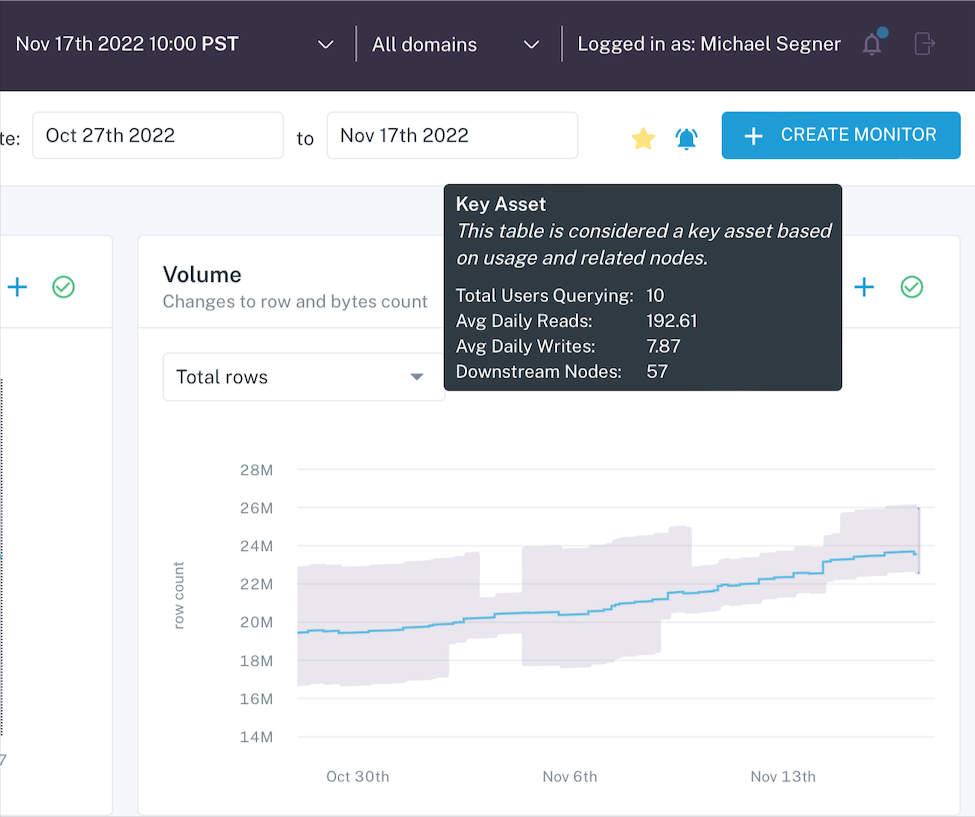

“We liked that we just had to plug it in and there wasn’t much set up at all. Within two weeks we had an alerting system in place and on our own time we added our custom SQL rules,” said Kineret. The platform’s data lineage and key asset capabilities were also instrumental in BlaBlaCar’s transition to a data mesh.

“When we decided we’re moving to a data mesh, the first thing we did was define our business domains and to do that we used Monte Carlo’s data lineage feature which helped us understand the dependencies,” she said. “It helped untangle the spaghetti and understand what’s going on across over 10,000 tables, which would have been impossible to do manually.”

“The key asset feature told us what tables were the most important and the data insights feature told us which tables were unused so we could prioritize our important tables as part of our reorganization and cut the thousands of tables we did not need, which was a massive time saver,” she added.

The Outcome: Reduced time to resolution 100+ hours per quarter

“There have been issues found by Monte Carlo that otherwise would not have been found, which has saved us time and resources since we are no longer going back and backfilling months of data that was wrong. The 200 hours of investigating the root cause is now basically cut in half. Now, data engineers barely have to investigate the issue because the root cause is right in front of you.”

In addition to the helpful insights and best practices Kineret has provided in the past for moving to a data mesh, she also recommends investing in governance structures from the start.

“If you’re going to separate your central team, you must have a strong set of governance policies so everyone is doing things the same way. You need to have some kind of contract between them or mutual understanding on how you build things, otherwise you will lose all your flexibility when priorities change and you need to shift people across teams.”

She also recommends setting up cross-functional teams to avoid the “lone expert” problem that can stifle collaboration.

“We got a lot of positive feedback from our internal teams on our transition to the data mesh, but one of the downsides was individuals working in domains worried they are the only practitioner and don’t have someone to consult with. So we created horizontal groups we call chapters that we strengthen and build collaboration and reporting structures around.”

The Future: Data SLAs

With high-quality data and decentralized teams in place, the next step for the BlaBlaCar data team is to further refine its data ownership practices and start setting data SLAs.

“We’re still finalizing ownerships, but we have a process and understanding in place so we avoid a game of hot potato when there are issues,” said Kineret. “We’re looking into data contracts as more of a carrot approach–if you want to participate in this amazing mesh then here are the things that you need to abide by including a data steward who will have a data contract with the central team that involves things like SLAs and API contracts you need to meet.”

Looking back on the team’s recent transformation, Kineret believes it has been a game changer for her team.

“Our main goals were to scale more easily, be more agile to change, and provide faster time to value. With our adoption of Monte Carlo as a part of the data mesh, we’ve achieved that in a relatively short period of time.”

If you are a data professional interested in joining the first rate BlaBlaCar team, they are currently hiring multiple data roles.

Watch the Big Data London session “The Road To Data Mesh: BlaBlaCar’s 3-year Journey To Self-serve Data Analytics” featuring BlaBlaCar below:

Interested in a demo? Schedule a time to talk to us and see data observability in action using the form below:

Our promise: we will show you the product.

Product demo.

Product demo.  What is data observability?

What is data observability?  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage