How To Implement Data Observability Like A Boss In 6 Steps

Data observability refers to an organization’s comprehensive understanding of the health and performance of the data within their systems.

Data observability tools employ automated monitoring, root cause analysis, data lineage, and data health insights to proactively detect, resolve, and prevent data anomalies. This relatively new technology category has been quickly adopted by data teams, in part due to its extensibility (here are 61 use cases it supports).

But perhaps one of the greatest advantages of a data observability platform is its time to value. Unlike data testing or modern data quality platforms, data observability solutions require minimal configuration or manual threshold setting. They use machine learning monitors to learn how your data behaves, typically over a period of less than 2 weeks, and then alert you to relevant data incidents.

Despite the ease of integration and set up, there are some best practices for implementing data observability. They can be quickly summarized as:

- Crawl: Setting up basic data freshness, volume, and schema monitors across your environment. Begin to train incident response operational muscles by routing and resolving incidents.

- Walk: Place field health monitors and create custom monitors for your key tables to detect data quality anomalies. Set and surface data pipeline SLAs with your data consumers to set expectations and build data trust.

- Run: Focus on preventing data quality incidents by leveraging data health insights and dashboards. Extend support to additional use cases such as MLOps engineering and more.

As we all know, when it comes to implementation of any technology, the devil can be in the details. Here are six steps, leveraged successfully by hundreds of real data teams, for implementing data observability like a boss.

How To Implement Data Observability

Step 1: Inventory Data Use Cases (Pre-Implementation)

One of the best places to start when trying to figure out how to implement data observability is by taking an inventory of your current (and ideally near future) data use cases. Categorize them by:

- Analytical: Data is used primarily for decision making or evaluating the effectiveness of different business tactics via a BI dashboard.

- Operational: Data used directly in support of business operations in near-real time. This is typically steaming or microbatched data. Some use cases here could be accommodating customers as part of a support/service motion or an ecommerce machine learning algorithm that recommends, “other products you might like.”

- Customer facing: Data that is surfaced within and adds value to the product offering or data that IS the product. This could be a reporting suite within a digital advertising platform for example.

This is important because data quality is contextual. There will be some scenarios, such as financial reporting, where accuracy is paramount. Other use cases, such as some machine learning applications, data freshness will be key and “directionally accurate” will suffice. This process helped Checkout.com when it determined how to implement data observability.

“Given that we are in the financial sector, we see quite disparate use-cases for both analytical and operational reporting which require high-levels of accuracy” says Checkout.com Senior Data Engineer Martynas Matimaitis. “That forced our hands to [scale data quality management] quite early on in our journey, and that became a crucial part of our day-to-day business.”

The next step is to assess the overall performance of your systems and team. At this stage you have just begun your journey so it’s unlikely you have detailed insights into your overall data health or operations. There are some quantitative and qualitative proxies you can use however.

- Quantitative: You can measure the number of data consumer complaints, overall data adoption, and levels of data trust (NPS survey). You can also ask the team to estimate the amount of time they spend on data quality management related tasks like maintaining data tests and resolving incidents.

- Qualitative: Is there a desire or an opportunity for more advanced data use cases? Do leaders feel like they’ve unlocked the full value of the organization’s data? Is the culture data driven? Was there a recent data quality disaster that led to very senior escalation?

Categorizing your data use cases and baselining current performance will also help you assess the gap between your current and desired future state across your infrastructure, team, processes, and performance.

Step 2: Rally And Align The Organization (Pre-Implementation)

Once you have a baseline, you are ready to start building support for your data observability initiative. You will want to start by understanding what pain is felt by different stakeholders.

If there is no pain, you need to take a moment to understand why. It could be the scale of your data operations or the overall importance of your data isn’t mature enough to warrant an investment in improving data quality via data observability. However that is unlikely to be the case, assuming you have more than 50 tables and/or your data team is spending time on data quality related items on a weekly basis.

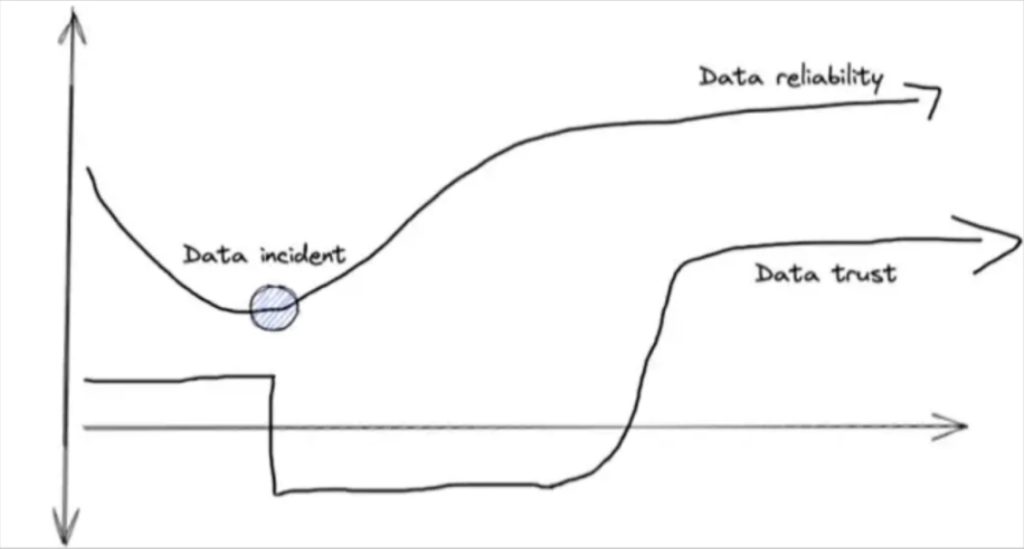

What’s more likely is your organization has quite a bit of unrealized risk. Data quality is low and a costly data incident may be just around the corner…but it hasn’t struck yet. Your data consumers will generally trust the data until you give them a reason not to. At that point, trust is much harder to regain than it was to lose.

The overall risk of poor data quality can be difficult to assess. The consequences of bad data can range from slightly under optimized decision making to reporting incorrect data to Wall Street.

One approach is to pool this risk by estimating your data downtime and attributing an inefficiency cost to it. Or you could take established industry baselines– our study shows bad data can impact on average 31% of a company’s revenue.

That risk assessment and cost of business stakeholders dealing with bad data will be informative, but perhaps a bit fuzzy. It should also be paired with the opportunity cost of an excess amount of data engineering hours being applied to dealing with bad data.

This can be done by totaling up the amount of time spent on data quality related tasks, wincing, and then multiplying that time by the average data engineering salary.

Data Observability Implementation Pro-Tip: Data testing is often one of the data team’s biggest inefficiencies. It is time consuming to define, maintain, and scale every expectation and assumption across every dataset. Worse, because data can break in near infinite number of ways (unknown unknown) this level of coverage is often woefully inadequate.

Data teams can benefit from data testing as part of a multi-layered defense that includes data observability, but tests (or custom monitors) should be reserved for your most clear thresholds on your most important fields.

Congratulations! You now have a business case for your data observability implementation initiative.

At this point, the following stages will assume you have obtained a mandate and made a decision to either build a machine learning based data monitoring solution or implement a data observability solution to assist in your efforts. Now, it’s time to implement and scale.

Step 3: Implement Broad Data Quality Monitoring

The third stage for how to implement data observability is to make sure you have basic machine learning monitors (freshness, volume, schema) in place across your data environment. For many organizations (excluding larger enterprises), you will want to roll this out across every data product, domain, and department rather than pilot and scale.

This will accelerate your time to value and help you establish critical touch points with different teams if you haven’t done so already.

Another reason for a wide data observability implementation roll out is that, even with the most decentralized organizations, data is interdependent. If you install fire depressant systems in the living room while you have a fire in the kitchen, it doesn’t do you much good.

Also, wide-scale data monitoring and/or data observability will give you a complete picture of your data environment and the overall health. Having the 30,000 foot view is helpful as you enter the next stage of your data observability implementation.

“With…broad coverage and automated lineage…our team can identify, understand downstream impacts, prioritize, and resolve data issues at a much faster rate,” said Ashley VanName, general manager of data engineering, JetBlue.

Step 4: Optimize Incident Resolution

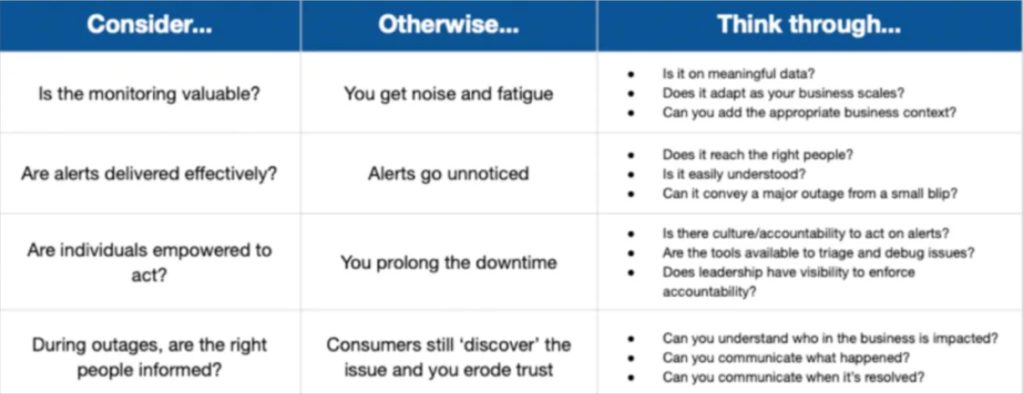

At this stage, we want to start optimizing our incident triage and resolution response. This involves setting up clear lines of ownership. There should be team owners for data quality as well as overall data asset owners at the data product and even data pipeline level.

Breaking your environment into domains, if you haven’t already, can help create additional accountability and transparency for the overall data health levels maintained by different groups.

Having clear ownership also enables fine tuning your alert settings, making sure they are sent to the right communication channels of the responsible team at the right level of escalation.

“We started building these relationships where I know who’s the team driving the data set,” said Lior Solomon, VP of Data at Drata. “I can set up these Slack channels where the alerts go and make sure the stakeholders are also on that channel and the publishers are on that channel and we have a whole kumbaya to understand if a problem should be investigated.”

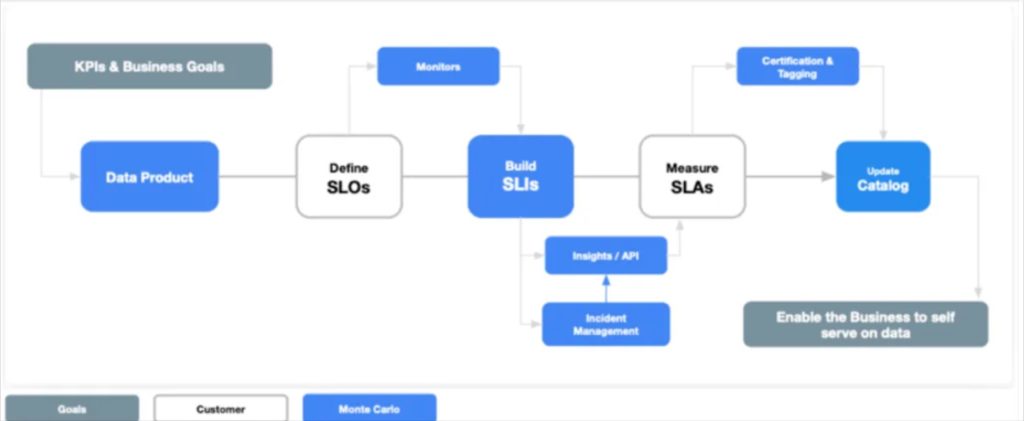

Step 5: Create Custom Data Quality Monitors

Next you will want to focus on layering more sophisticated, custom monitors. These can be either manually defined–for example if data needs to be fresh at 8:00 am every weekday for a meticulous executive–or machine learning based. In the latter case, you indicate which tables or segments of the data are important to examine and the machine learning alerts trigger when the data starts to look awry.

We recommend layering on custom monitors on your organization’s most critical data assets. These can typically be identified as those that have many downstream consumers or important dependencies.

Custom monitors and SLAs can also be built around different data reliability tiers to help set expectations. You can certify the most reliable datasets “gold” or label an ad-hoc data pull for a limited use case as “bronze” to indicate it is not supported as robustly.

The most sophisticated organizations manage a large portion of their custom data observability monitors through code (monitors as code) as part of the CI/CD process.

The Checkout.com data team reduced its reliance on manual monitors and tests by adding monitors as code functionality into every deployment pipeline. This enabled them to deploy monitors within their dbt repository, which helped harmonize and scale the data platform.

“Monitoring logic is now part of the same depository and is stacked in the same place as a data pipeline, and it becomes an integral part of every single deployment,” says Martynas. In addition, that centralized monitoring logic enables the clear and easy display of all monitors and issues, which expedites time to resolution.

Step 6: Incident Prevention

At this point of our data observability implementation, we have driven significant value to the business and noticeably improved data quality. The previous stages have helped dramatically reduce our time-to-detection and time-to-resolution, but there is a third variable in the data downtime formula: number of data incidents.

In other words, one of the final steps for how to implement data observability like a boss is to try and prevent data incidents before they happen.

That can be done by focusing on data health insights like unused tables or deteriorating queries. Analyzing and reporting the data reliability levels or SLA adherence across domains can also help data leaders determine where to allocate their data quality management program resources.

“Monte Carlo’s lineage highlights upstream and downstream dependencies in our data ecosystem, including Salesforce, to give us a better understanding of our data health,” said Yoav Kamin, business analysis group leader at Moon Active. “Instead of being reactive and fixing the dashboard after it breaks, Monte Carlo provides the visibility that we need to be proactive.”

Final thoughts

We covered a lot of ground in this article. Some of our key takeaways for how to implement data observability include:

- Make sure you are monitoring both the data pipeline and the data flowing through it.

- You can build a business case for data monitoring by understanding the amount of time your team spends fixing pipelines and the impact it has on the business.

- You can build or buy data monitoring–the choice is yours–but if you decide to buy a solution be sure to evaluate its end-to-end visibility, monitoring scope, and incident resolution capabilities.

- Operationalize data monitoring by starting with broad coverage and mature your alerting, ownership, preventive maintenance, and programmatic operations over time.

Perhaps the most important point is that data pipelines will break and data will “go bad” – unless you’re keeping them healthy. Whatever your next data quality step entails, it’s important to take it sooner rather than later. You’ll thank us later.

Interested in learning how to implement data observability like a boss? Schedule a time to talk with us using the form below.

Our promise: we will show you the product.

Product demo.

Product demo.  What is data observability?

What is data observability?  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage