The Chaos Data Engineering Manifesto: Spare The Rod, Spoil Prod

It’s midnight in the dim and cluttered office of The New York Times currently serving as the “situation room.”

A powerful surge of traffic is inevitable. During every major election, the wave would crest and crash against our overwhelmed systems before receding, allowing us to assess the damage.

We had been in the cloud for years, which helped some. Our main systems would scale– our articles were always served– but integration points across backend services would eventually buckle and burst under the sustained pressure of insane traffic levels.

However, this night in 2020 was different from similar election nights in 2014, 2016, and 2018. That’s because this traffic surge was simulated and an election wasn’t taking place.

Pushing to the point of failure

Simulation or not, this was prod so the stakes were high. There was suppressed horror as J-Kidd–our system that brought ad targeting parameters to the front end–went down hard. It was as if all the ligaments had been ripped from the knees of the pass-first point guard for which it had been named. Ouch.

J-Kidd wasn’t the only system that found its way to the disabled list. That was the point of the whole exercise, to push our systems until they failed. We succeeded. Or failed depending on your point of view.

The next day the team made adjustments. We decoupled systems, implemented failsafes, and returned to the court for game 2. As a result, the 2020 election was the first I can remember where the on-call engineers weren’t on the edge of their seats, white knuckling their keyboards…At least not for system reliability reasons.

Pre-Mortems and Chaos Engineering

We referred to that exercise as a “pre-mortem.” Its conceptual roots can be traced back to the idea of chaos engineering introduced by site reliability engineers.

For those unfamiliar, chaos engineering is a disciplined methodology for intentionally introducing points of failure within systems to better understand their thresholds and improve resilience.

It was popularized in large part by the success of Netflix’s Simian Army, a suite of programs that would automatically introduce chaos by removing servers, regions, and introduce other points of failure into production. All in the name of reliability and resiliency.

While this idea isn’t completely foreign to data engineering, it can certainly be described as an extremely uncommon practice.

No data engineer in their right mind has looked at their to-do list, the unfilled roles on their team, the complexity of their pipelines, and then said: “This needs to be harder. Let’s introduce some chaos.” That may be part of the problem.

Data teams need to think beyond providing snapshots of data quality to the business and start thinking about how to build and maintain reliable data systems at scale.

We cannot afford to overlook data’s increasingly large role in critical operations. Just this year, we witnessed how the deletion of one file and an out-of-sync legacy database could ground more than 4,000 flights.

Of course, you can’t just copy and paste software engineering concepts straight into data engineering playbooks. Data is different. DataOps tweaks DevOps methodology as data observability does to observability.

Consider this manifesto then a proposal for how to take the proven concepts of chaos engineering and apply them to the eccentric world of data.

The 5 Laws Of Data Chaos Engineering

The principles and lessons of chaos engineering are a good place to start defining the contours of a data chaos engineering discipline. Our first law combines two of the most important.

First Law: Have a bias for production, but minimize the blast radius

There is a maxim among site reliability engineers that will ring true for every data engineer who has had the pleasure of the same SQL query return two different results across staging and production environments. That is, “Nothing acts like prod except for prod.”

To that I would add, “production data too.” Data is just too creative and fluid for humans to anticipate. Synthetic data has come a long way, and don’t get me wrong it can be a piece of the puzzle, but it’s unlikely to simulate key edge cases.

Like me, the mere thought of introducing points of failure into production systems probably makes your stomach churn. It’s terrifying. Some data engineers justifiably wonder, “Is this even necessary within a modern data stack where so many tools abstract the underlying infrastructure?”

I’m afraid so. Remember, as the opening anecdote and J-Kidd’s snapped ligaments illustrated, the elasticity of the cloud is not a cure-all.

In fact, it’s that abstraction and opacity–along with the multiple integration points–that make it so important to stress test a modern data stack. An on-premise database may be more limiting, but data teams tend to understand its thresholds as they hit them more regularly during day to day operations.

Let’s move past the philosophical objections for the moment, and dive into the practical. Data is different. Introducing fake data into a system won’t be helpful because the input changes the output. It’s going to get really messy too.

That’s where the second part of the law comes into play: minimize the blast radius. There is a spectrum of chaos and tools that can be used:

- In words only, “let’s say this failed, what would we do?”

- Synthetic data in production

- Techniques like data diff that allow you to test snippets of SQL code on production data

- Solutions like LakeFS allow you to do this on a bigger scale by creating “chaos branches” or complete snapshots of your production environment where you can use production data but with complete isolation.

- Do it in prod, and practice your backfilling skills. After all, nothing acts like prod, but prod.

Starting with lesser chaotic scenarios is probably a good idea and will help you understand how to minimize the blast radius in production.

Deep diving into real production incidents is also a great place to start. Does everyone really understand what exactly happened? Production incidents are chaos experiments that you’ve already paid for, so make sure that you are getting the most out of them.

Mitigating the blast radius may also include strategies like backing up applicable systems or having data observability or data quality monitoring solution in place to assist with the detection and resolution of data incidents.

Second Law: Understand it’s never a perfect time (within reason)

Another chaos engineering principle holds to observe and understand “steady state behavior.”

There is wisdom in this principle, but it is also important to understand the field of data engineering isn’t quite ready to measure by the standard of “5 9s” or 99.999% uptime.

Data systems are constantly in flux and there is a wider range of “steady state behavior.” There will be the temptation to delay the introduction of chaos until you’ve reached the mythical point of “readiness.” Well you can’t out-architect bad data and no one is ever ready for chaos.

The Silicon Valley cliche of fail fast is applicable here. Or to paraphrase Reid Hoffman, if you aren’t embarrassed by the results of your first pre-mortem/fire drill/chaos introducing event, you introduced it too late.

Introducing fake data incidents while you are dealing with real ones may seem silly, but ultimately this can help you get ahead by better understanding where you have been putting bandaids on larger issues that may need to be refactored.

Third Law: Formulate hypotheses and identify variables at the system, code, and data levels

Chaos engineering encourages forming hypotheses of how systems will react to understand what thresholds to monitor. It also encourages leveraging or mimicing past real-world incidents or likely incidents.

We’ll dive deeper into the details of this in the next section, but the important modification here is to ensure these span the system, code, and data levels. Variables at each level can create data incidents, some quick examples:

- System: You didn’t have the right permissions set in your data warehouse.

- Code: A bad left JOIN.

- Data: A third-party sent you garbage columns with a bunch of NULLS.

Simulating increased traffic levels and shutting down servers impact data systems, and those are important tests, but don’t neglect some of the more unique and fun ways data systems can break bad.

Fourth Law: Everyone in one room (or at least Zoom call)

This law is based on the experience of my colleague, site reliability engineer, and chaos practitioner Tim Tischler.

“Chaos engineering is just as much about people as it is systems. They evolve together and they can’t be separated. Half of the value from these exercises come from putting all the engineers in a room and asking, ‘what happens if we do X or if we do Y?’ You are guaranteed to get different answers. Once you simulate the event and see the result, now everyone’s mental maps are aligned. That is incredibly valuable,” he said.

Also, the interdependence of data systems and responsibilities creates blurry lines of ownership even on the most well-run teams. Breaks often happen, and are overlooked, in those overlaps and gaps in responsibility where the data engineer, analytical engineer, and data analyst are pointing at the other.

In many organizations, the product engineers creating the data and the data engineers managing it are separated and siloed by team structures. They also often have different tools and models of the same system and data. Feel free to pull these product engineers in as well, especially when the data has been generated from internally built systems.

Good incident management and triage can often involve multiple teams and having everyone in one room can make the exercise more productive.

I’ll also add from personal experience that these exercises can be fun (in the same weird way putting all your chips on red is fun). I’d encourage data teams to consider a chaos data engineering fire drill or pre-mortem event at the next offsite. It makes for a much more practical team bonding exercise than getting out of an escape room.

Fifth Law: Hold off on the automation for now

Truly mature chaos engineering programs like Netflix’s Simian Army are automated and even unscheduled. While this may create a more accurate simulation, the reality is that the automated tools don’t currently exist for data engineering. If they did, I’m unsure if I would be brave enough to use them.

To this point, one of the original Netflix chaos engineers has described how they didn’t always use automation as the chaos could create more problems than they could fix (especially in collaboration with those running the system) in a reasonable period of time.

Given data engineering’s current reliability evolution and the greater potential for an unintentionally large blast radius, I would recommend data teams lean more towards scheduled, carefully managed events.

Data Chaos Engineering Variables AKA What To Break

It would be a bit of a cop out to suggest data engineers overhaul their processes and introduce greater levels of risk without providing more concrete examples of tests or the value that can be derived from them. Here are some suggestions.

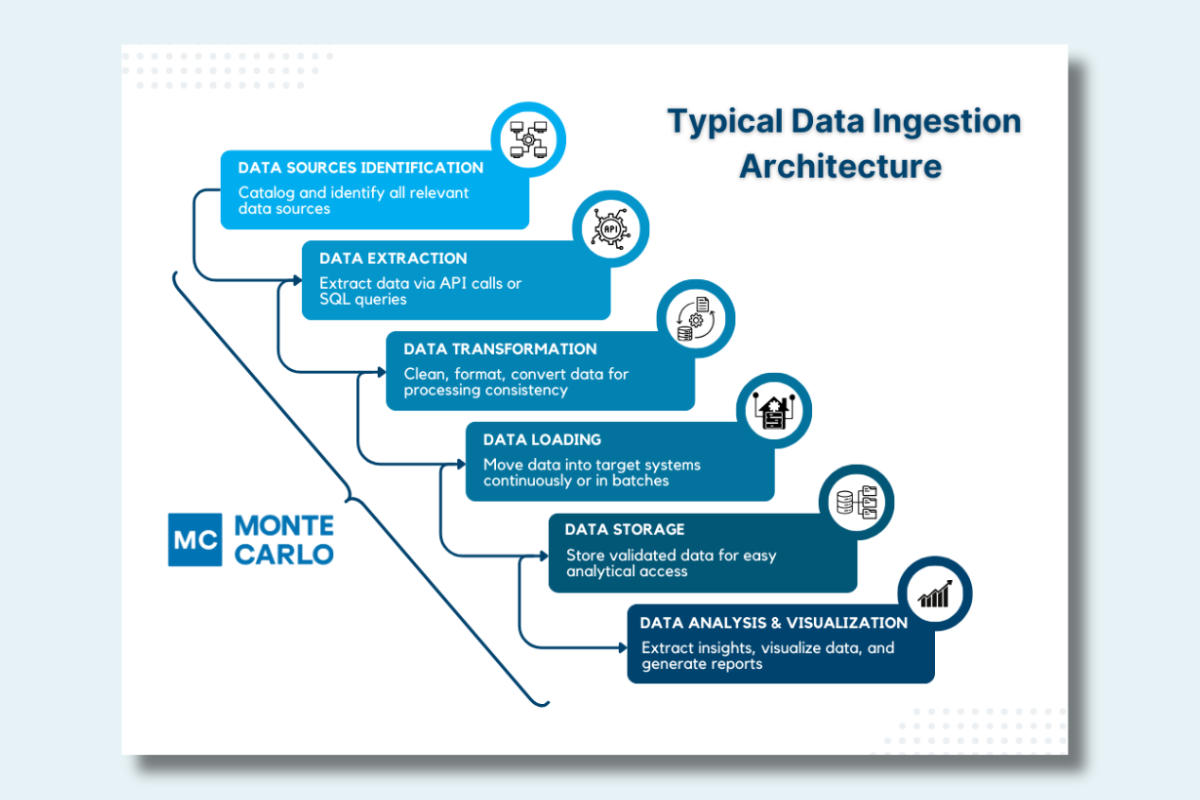

Systems Level

At this level you should be testing the integration points across your IT and data systems. This includes your data pipelines from ingestion and storage to orchestration and visualization. You could create some chaos by:

- Creating problems in Postgres– You may discover you need to decouple operational systems from your analytical systems.

- Killing Airflow jobs or Spark clusters- You may discover you need alerts to notify the team when these failures occur and self-healing pipeline design.

- Changing up your number of Spark core executors– Zach Wilson has pointed out how adding more Spark executor cores can negatively impact reliability without boosting performance. Experiment with that.

- Adjust the auto-suspend policy in Snowflake– A lot of teams are working to optimize their data cloud costs and it’s easy to cut a bit too deep and cause reliability issues. For example, if you set the auto-suspend more tightly than any gaps in your query workload, the warehouse could end up in a continual state of auto-suspend and auto-resume. This might help you discover you need to re-organize and categorize your data workloads by data warehouse size. Or, maybe be a bit more judicious on who has permission and authority to make these types of changes.

- Unpublish a dashboard– I call this the scream test. If no one says anything weeks after unpublishing a dashboard, chances are you can retire it. This can also help test your detection and communication skills across data engineering and analyst teams.

- Simulate a connection failure with your streaming pipelines– The Mercari data team deftly fixed this issue quickly thanks to data monitoring alerts. They are tentatively planning to simulate similar issues to drill their detection, triage, and resolution processes.

“The idea is we’ll roleplay an alert from a particular dataset and walk through the steps for triage, root cause analysis, and communicating with the business,” said Daniel, member of the Mercari data reliability engineering team. “By seeing them in action, we can better understand the recovery plans, how we prioritize to ensure the most essential tables are recovered first, and how we communicate this with a team that spans across four time zones.”

Code Level

This level is the T (transformation) in the ETL or ELT process. What happens when something goes wrong in your dbt models or SQL queries? Time to find out!

- Archive a dbt model- Similar to the dashboard scream test or Jenga. Is that model really needed or does the structure hold just fine when you remove a block? This can help evaluate your testing and monitoring; whether there are unknown dependencies; and lines of ownership.

- Adjust how a key metric is calculated-When there are multiple versions of truth within an organization, trust in data is inevitably undermined by conflicting trends. Do changes to a key metric flow to all your downstream reports? Are there rogue definitions floating around? This can help evaluate the organizational process for defining key metrics and whether you might need to invest in a semantic layer.

- Have other domains interpret your models or SQL code– Sure, one engineer or team might understand the models in a particular domain, but what happens when others need to understand? Is it well documented? Can others help triage incidents?

Data Level

Where software engineers also deal with reliability issues at the code and system level, only data engineers must address potential issues at the data level. Have I mentioned that data is different?

This level can’t be neglected because your Airflow jobs may be running fine, your data diff may check out, but the data running across them might just be complete garbage. Garbage in, garbage out (and maybe systems down)

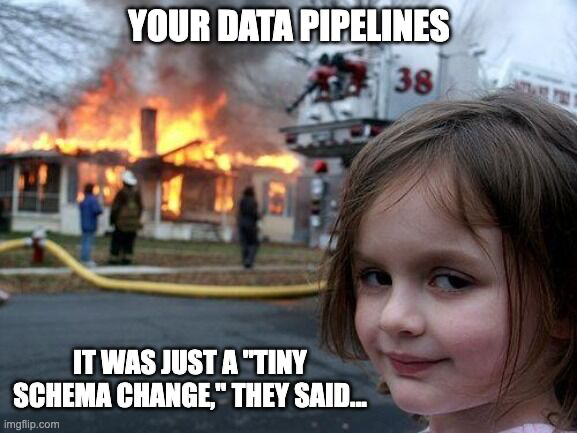

- Change the schema- Software engineers regularly and unintentionally commit code that changes how the data is output in a service, breaking downstream data pipelines. You may need to decouple systems and, for some operational pipelines, require a data contract architecture in place (or some other process for better engineering coordination).

- Create bad data- This may reveal your models aren’t robust against spikes of volume, NULLs, etc in their source tables.

- Duplicate the data– Does a spike in data reveal bottlenecks causing jobs to fail? Do you need to microbatch certain pipelines? Are your pipelines idempotent where they need to be?

We’re Talking About Practice

We started this manifesto with a point guard, perhaps it’s fitting we conclude with this infamous 2002 quote from shooting guard Allen Iverson as a warning for those who don’t buy into the practice like you play concept:

We’re in here talking about practice. I mean, listen, we’re talking about practice. Not a game! Not a game! Not a game! We’re talking about practice. Not a game; not the game that I go out there and die for and play every game like it’s my last, not the game, we’re talking about practice, man. I mean, how silly is that? We’re talking about practice.

I know I’m supposed to be there, I know I’m supposed to lead by example, I know that. And I’m not shoving it aside like it don’t mean anything. I know it’s important. I do. I honestly do. But we’re talking about practice, man. What are we talking about? Practice? We’re talking about practice, man!

We’re talking about practice! We’re talking about practice… We ain’t talking about the game! We’re talking about practice, man!

That year the 76ers lost in the Eastern Conference First Round of the playoffs to the Boston Celtics in five games. Iveson’s field goal percentage for the series was a tepid 38.1%.

Ryan Kearns, Tim Tischler, and Michael Segner contributed to this article.

What do you think? Leave your comments below.

Product demo.

Product demo.  What is data observability?

What is data observability?  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage

![[O’Reilly Book] Chapter 1: Why Data Quality Deserves Attention Now](https://www.montecarlodata.com/wp-content/uploads/2023/08/Data-Quality-Book-Cover-2000-×-1860-px-1.png)