Our Top 5 Generative AI Articles in 2023

The team here at Monte Carlo has done our fair share of thinking — and writing — about generative AI over the last 12 months. We’ve framed the problems, highlighted the opportunities, analyzed the implications, and given quite a few predictions for the future.

So, as the year comes to a close, we rounded up our top 5 blogs about generative AI in 2023, to help you catch up before we venture into the AI madness of 2024

These 5 articles aim to give some clarity on the current state of generative AI, how to define use-cases and build for value, and finally, what’s coming next for data and AI teams.

Happy reading!

Table of Contents

Generative AI and the Future of Data Engineering

The first and most important question: will generative AI impact data?

TLDR: Yes. In many ways.

Obviously data is a MASSIVE piece of the GenAI puzzle. In this article, Lior Gavish, CTO at Monte Carlo, explored how data and generative AI impact each other, including more access to data, more productive data engineers, a focus on fine-tuning and leveraging LLMs, and how data observability will make generative AI more secure – and maybe vice versa.

The moral of this story: data quality will become an essential consideration for generative AI.

As we hear all the time: garbage in, garbage out. It’s truer than ever with LLMs.

Read more about what generative AI means for data engineering and why data observability is critical for this groundbreaking technology to succeed in Generative AI and Future of Data Engineering.

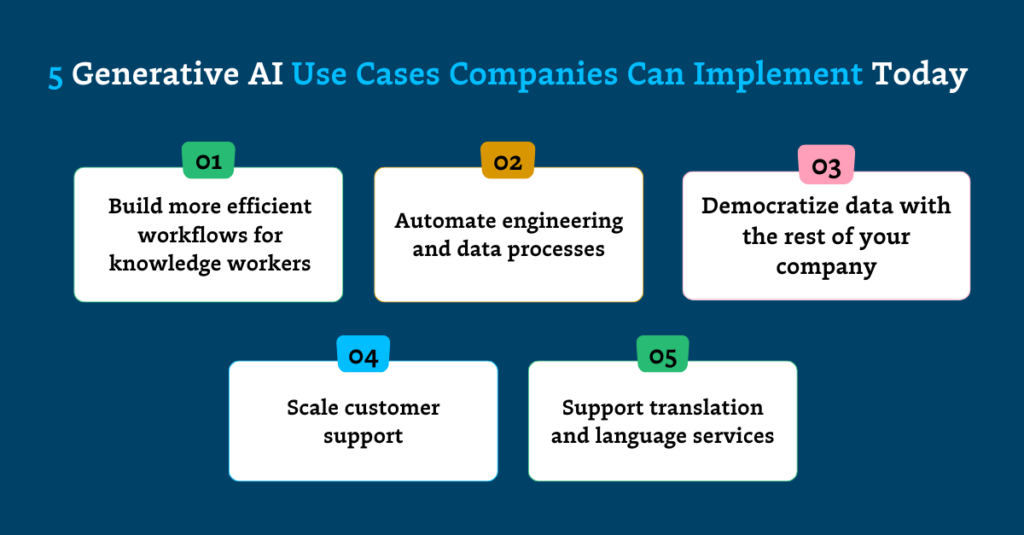

5 Generative AI Use Cases Companies Can Implement Today

If you’re ready to actually start incorporating generative AI use cases into your data strategy, you first need to ensure they’re realistic, achievable, and — probably most importantly — actually worth the ROI.

Barr Moses, CEO of Monte Carlo, dug into key strategies for early AI adopters, from legal firms to sales teams, to learn how they’re putting GenAI to use today and how they plan to scale. She also explores the key considerations for organizations looking to implement these use cases, including vector databases, fine-tuning models, and unstructured or streaming data processing.

Read more in 5 Generative AI Use Cases Companies Can Implement Today.

Organizing Generative AI: 5 Lessons Learned From Data Science Teams

So, your business leadership is all in on generative AI? Now what?

Well, you’re going to need more than a tiger team to turn that MVP AI into an enterprise-ready data product.

In Toward Data Science, Shane Murray, Field CTO at Monte Carlo and former data leader at The New York Times, shared how data leaders can lean on data science teams to build actionable and value-forward AI strategies.

Discover Shane’s top 5 lessons for developing organizational structure, processes, and platforms that can support your GenAI projects.

Read more in Organizing Generative AI: 5 Lessons Learned From Data Science Teams.

The Moat for Enterprise AI is RAG + Fine Tuning – Here’s Why

Production-ready generative AI may still be in its infancy, but it won’t be forever. Getting in at the ground level may be important, but jumping in without a strong data strategy won’t do your business or your team any favors.

In a follow-up article, Lior Gavish reminded readers that enterprise-ready AI requires diligence. Anyone can slap together an API-enabled AI feature. But to make something that’s truly a value driver, your AI needs to be purposeful and specialized—and that only comes one way: by plugging into reliable, high quality data for customer and organizational context.

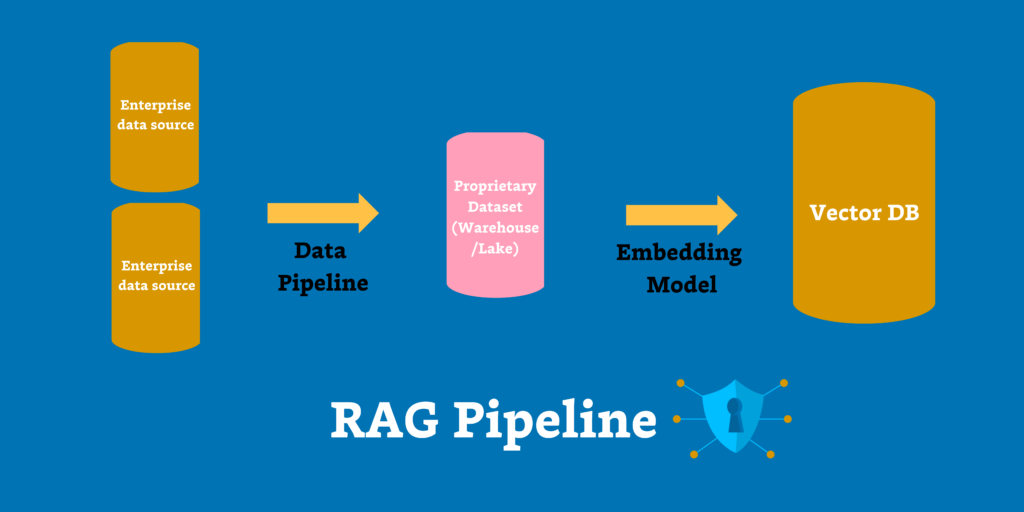

AKA, through RAG (retrieval augmented generation) and fine-tuning.

Leveraging proprietary data into LLMs is the differentiator, but it can only be done with security and privacy controls and high quality data from the jump. Lior explores the benefits of RAG and how to get started.

Read more in The Moat for Enterprise AI is RAG + Fine Tuning—Here’s Why.

A.I. Confidential | The Unvarnished Truth From 5 Anonymous Data Leaders

The best way to really understand what someone thinks? Grant them anonymity.

In A.I. Confidential, we did just that. We reached out to 5 top data leaders to get their hot takes on generative AI while keeping their identities completely anonymous.

Let’s just say they delivered.

Their responses ran the gambit, from the convicted: “We’re leaving productivity on the table if we don’t allow GenAI to take over some things and train our people to take advantage of it…”

To the cautious: “…with the landscape moving so fast, and the risk of reputational damage of a ‘rogue’ chatbot, we’re holding fire and waiting for the hype to die down a bit!”

To the aggressively diplomatic: “Does it do things perfectly? No. Is it killing jobs? No. Is it altering our lives? No. Does it seem to help in little ways today? Yes.”

So, if you’re having second thoughts about your generative AI initiative, or you just want a gut check on how others are feeling, read more in AI Confidential.

At the end of 2023, what’s the AI verdict?

Well, it’s safe to say that AI isn’t going anywhere. But what that means for your data team totally depends on your business needs.

However, no matter where you land with your GenAI initiatives in 2024, one thing is absolutely certain: you’re going to need lots of high-quality data to make ‘em happen. Without trust in the underlying data that’s feeding your models, your AI products will be on the shelf before they’re even built.

In 2024, as data teams evaluate how to ship AI value at scale, the focus on RAG and fine-tuning—and data quality along with it—will be at the center of the AI conversation.

Interested in learning more about how Monte Carlo helps organizations protect their data quality for generative AI? Schedule a call with the team below!

Our promise: we will show you the product.

Product demo.

Product demo.  What is data observability?

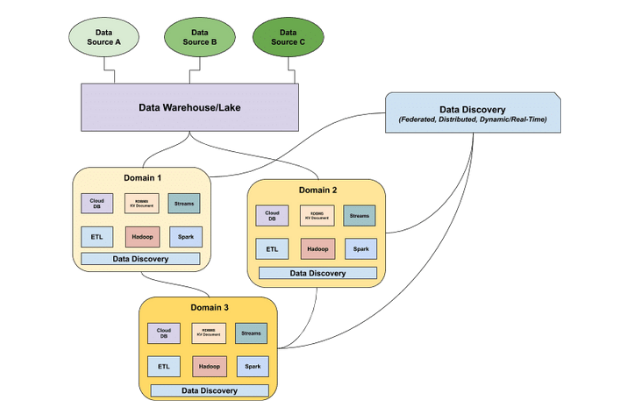

What is data observability?  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage