The Moat for Enterprise AI is RAG + Fine Tuning – Here’s Why

The hype around LLMs is unprecedented, but it’s warranted. From AI-generated images of the Pope in head-to-toe Balenciaga to customer support agents without pulses, generative AI has the potential to transform society as we know it.

And in many ways, LLMs are going to make data engineers more valuable – and that’s exciting!

Still, it’s one thing to show your boss a cool demo of a data discovery tool or text-to-SQL generator – it’s another thing to use it with your company’s proprietary data, or even more concerning, customer data.

All too often, companies rush into building AI applications with little foresight into the financial and organizational impact of their experiments. And it’s not their fault – executives and boards are to blame for much of the “hurry up and go” mentality around this (and most) new technologies. (Remember NFTs?).

For AI – particularly generative AI – to succeed, we need to take a step back and remember how any software becomes enterprise ready. To get there, we can take cues from other industries to understand what enterprise readiness looks like and apply these tenets to generative AI.

In my opinion, enterprise ready generative AI must be:

- Secure & private: Your AI application must ensure that your data is secure, private, and compliant, with proper access controls. Think: SecOps for AI.

- Scalable: your AI application must be easy to deploy, use, and upgrade, as well as be cost-efficient. You wouldn’t purchase – or build – a data application if it took months to deploy, was tedious to use, and impossible to upgrade without introducing a million other issues. We shouldn’t treat AI applications any differently.

- Trusted. Your AI application should be sufficiently reliable and consistent. I’d be hard-pressed to find a CTO who is willing to bet her career on buying or building a product that produces unreliable code or generates insights that are haphazard and misleading.

With these guardrails in mind, it’s time we start giving generative AI the diligence it deserves. But it’s not so easy…

Why is enterprise AI hard to achieve?

Put simply, the underlying infrastructure to scale, secure, and operate LLM applications is not there yet.

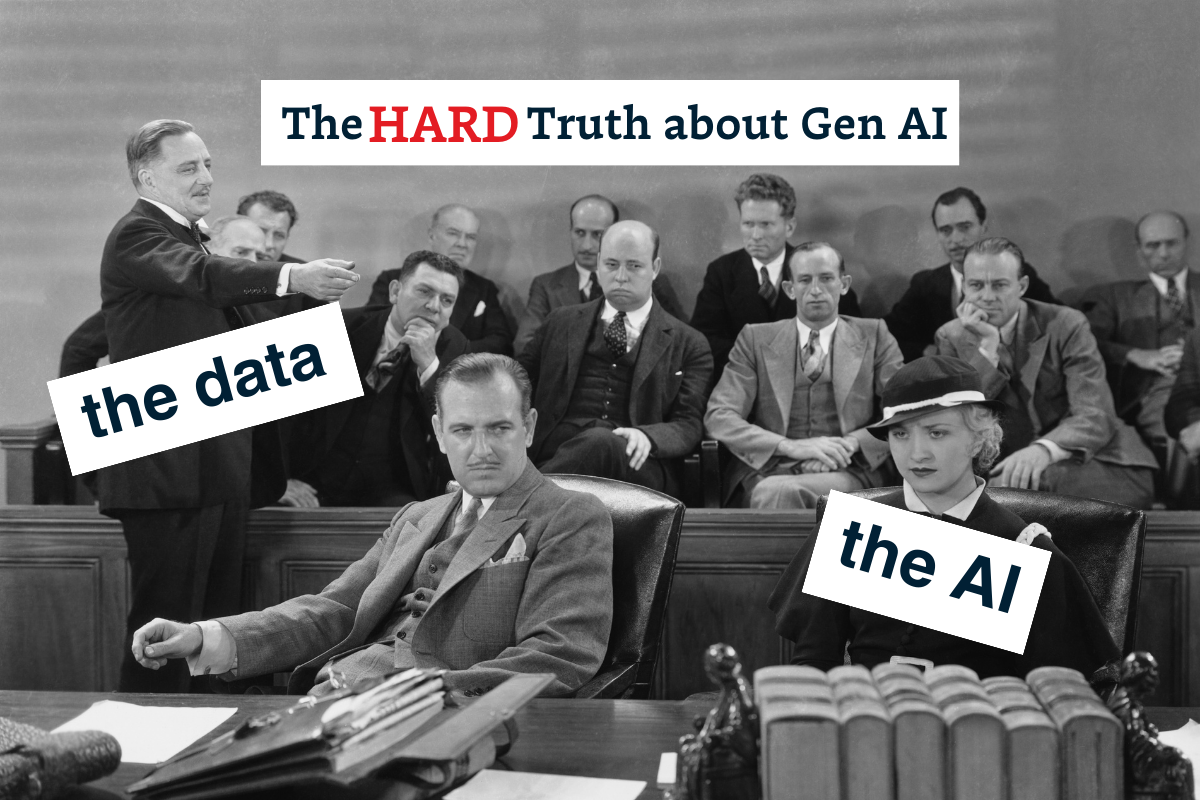

Unlike most applications, AI is very much a black box. We *know* what we’re putting in (raw, often unstructured data) and we *know* what we’re getting out, but we don’t know how it got there. And that’s difficult to scale, secure and operate.

Take GPT-4 for example. While GPT-4 blew GPT 3.5 out of the water when it came to some tasks (like taking SAT and AP Calculus AB exam), some of its outputs were riddled with hallucinations or lacked necessary context to adequately accomplish these tasks. Hallucinations are caused by a variety of factors from poor embeddings to knowledge cutoff, and frequently affect the quality of responses generated by publicly available or open LLMs trained on information scraped from the internet, which account for most models.

To reduce hallucinations and even more importantly – to answer meaningful business questions – companies need to augment LLMs with their own proprietary data, which includes necessary business context. For instance, if a customer asks an airline chatbot to cancel their ticket, the model would need to access information about the customer, about their past transactions, about cancellation policies and potentially other pieces of information. All of these currently exist in databases and data warehouses.

Without that context, an AI can only reason with the public information, typically published on the Internet, on which it was originally trained. And here lies the conundrum – exposing proprietary Enterprise data and incorporating it into business workflows or customer experiences almost always requires solid security, scalability and reliability.

The two routes to enterprise ready AI: RAG and fine tuning

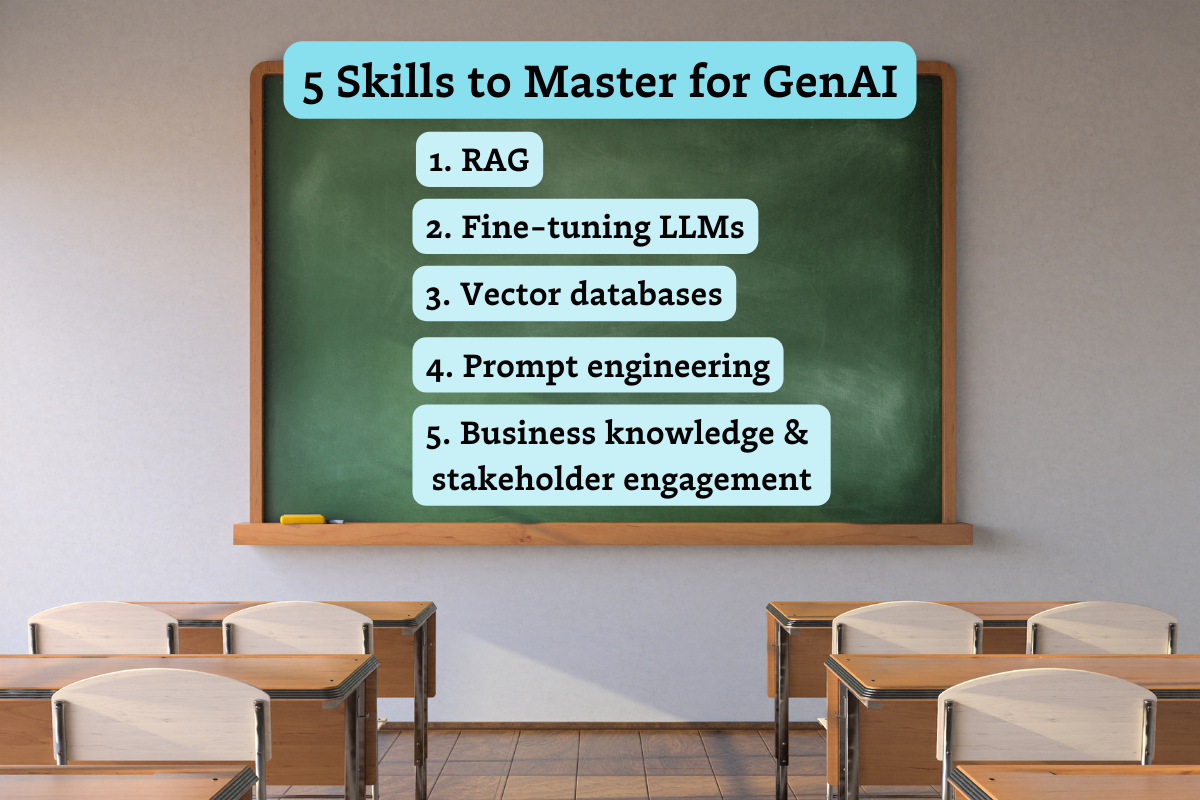

When it comes to making AI enterprise ready, the most critical parts come at the very end of the LLM development process: retrieval augmented generation (RAG) and fine tuning.

RAG integrates real-time databases into the LLM’s response generation process, ensuring up-to-date and factual output. Fine-tuning, on the other hand, trains models on targeted datasets to improve domain-specific responses.

It’s important to note, however, that RAG and fine tuning are not mutually exclusive approaches, and should be leveraged – oftentimes in tandem – based on your specific needs and use case.

When to use RAG

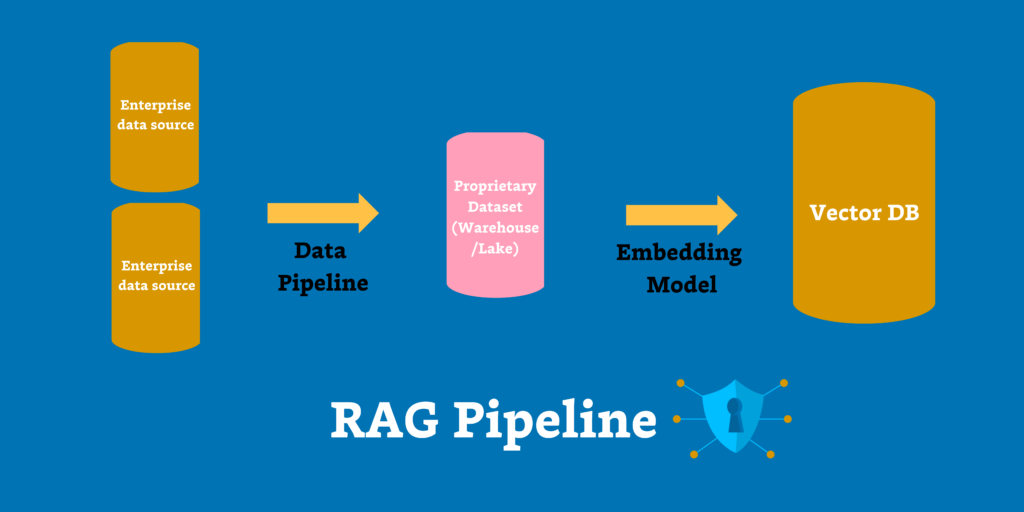

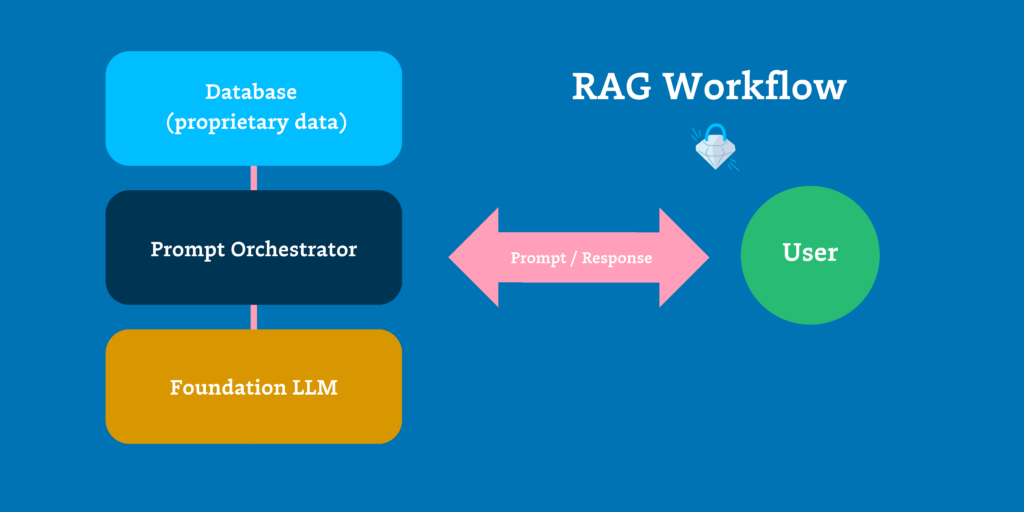

RAG is a framework that improves the quality of LLM outputs by giving the model access to a database while attempting to answer a prompt. The database – being a curated and trusted body of potentially proprietary data – allows the model to incorporate up-to-date and reliable information into its responses and reasoning. This approach is best suited for AI applications that require additional contextual information, such as customer support responses (like our flight cancellations example) or semantic search in your company’s enterprise communication platform.

RAG applications are designed to retrieve relevant information from knowledge sources before generating a response, making them well suited for querying structured and unstructured data sources, such as vector databases and feature stores. By retrieving information to increase the accuracy and reliability of LLMs at output generation, RAG is also highly effective at both reducing hallucinations and keeping training costs down. RAG also affords teams a level of transparency since you know the source of the data that you’re piping into the model to generate new responses.

One thing to note about RAG architectures is that their performance heavily relies on your ability to build effective data pipelines that make enterprise data available to AI models.

When to use fine tuning

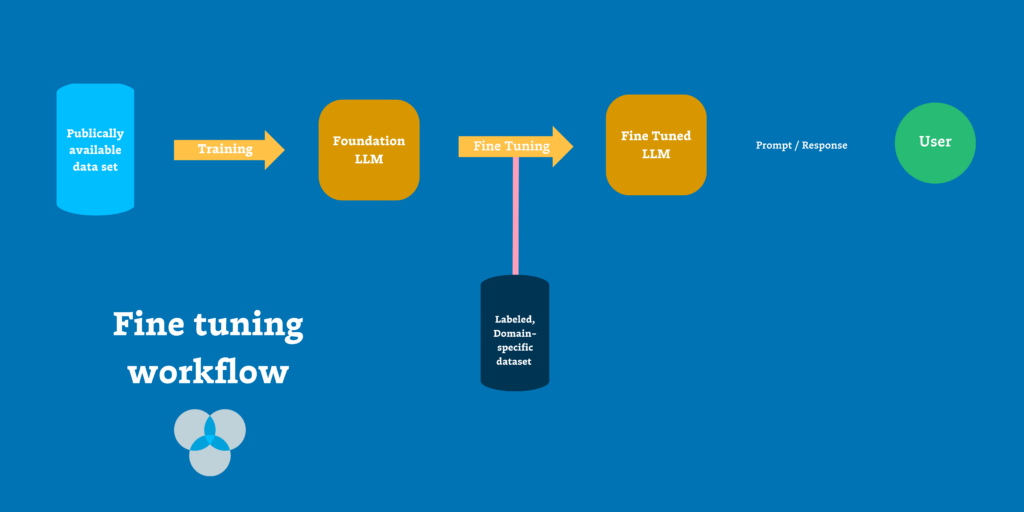

Fine tuning is the process of training an existing LLM on a smaller, task-specific and labeled dataset, adjusting model parameters and embeddings based on this new data. Fine tuning relies on pre-curated datasets that inform not just information retrieval, but the nuance and terminologies of the domain for which you’re looking to generate outputs.

In our experience, fine tuning is best suited for domain-specific situations, like responding to detailed prompts in a niche tone or style, i.e. a legal brief or customer support ticket. It is also a great fit for overcoming information bias and other limitations, such as language repetitions or inconsistencies. Several studies over the past year have shown that fine-tuned models significantly outperform off-the-shelf versions of GPT-3 and other publically available models. It has been established that for many use cases, a fine-tuned small model can outperform a large general purpose model – making fine tuning a plausible path for cost efficiency in certain cases.

Unlike RAG, fine tuning often requires less data but at the expense of more time and compute resources. Additionally, fine tuning operates like a black box; since the model internalizes the new data set, it becomes challenging to pinpoint the reasoning behind new responses and hallucinations remain a meaningful concern.

Fine tuning – like RAG architectures – requires building effective data pipelines that make (labeled!) enterprise data available to the fine tuning process. No easy feat.

Why RAG *probably* makes sense for your team

It’s important to remember that RAG and fine tuning are not mutually exclusive approaches, have varying strengths and weaknesses, and can be used together. However, for the vast majority of use cases, RAG likely makes the most sense when it comes to delivering enterprise Generative AI applications.

Here’s why:

- RAG security and privacy is more manageable: Databases have built-in roles and security unlike AI models, and it’s pretty well-understood who sees what due to standard access controls. Further, you have more control over what data is used by accessing a secure and private corpus of proprietary data. With fine tuning, any data included in the training set is exposed to all users of the application, with no obvious ways to manage who sees what. In many practical scenarios – especially when it comes to customer data – not having that control is a no-go.

- RAG is more scalable: RAG is less expensive than fine tuning because the latter involves updating all of the parameters of a large model, requiring extensive computing power. Further, RAG doesn’t require labeling and crafting training sets, a human-intensive process that can take weeks and months to perfect per model.

- RAG makes for more trusted results. Simply put, RAG works better with dynamic data, generating deterministic results from a curated data set of up-to-date data. Since fine tuning largely acts like a black box, it can be difficult to pinpoint how the model generated specific results, decreasing trust and transparency. With fine tuning, hallucinations and inaccuracies are possible and even likely, since you are relying on the model’s weights to encode business information in a lossy manner.

In my humble opinion, enterprise ready AI will primarily rely on RAG, with fine tuning involved in more nuanced or domain specific use cases. For the vast majority of applications, fine tuning will be a nice-to-have for niche scenarios and come into play much more frequently once the industry can reduce cost and resources necessary to run AI at scale.

Regardless of which one you use, however, your AI application development is going to require pipelines that feed these models with company data through some data store (be it Snowflake, Databricks, a standalone vector database like Pinecone or something else entirely). At the end of the day, if generative AI is used in internal processes to extract analysis and insight from unstructured data – it will be used in… drumroll… a data pipeline.

For RAG to work, you need data observability

In the early 2010s, machine learning was touted as a magic algorithm that performed miracles on command if you gave its features the perfect weights. What typically improved ML performance, however, was investing in high quality features and in particular – data quality.

Likewise, in order for enterprise AI to work, you need to focus on the quality and reliability of the data on which generative models depend – likely through a RAG architecture.

Since it relies on dynamic, sometimes up-to-the-minute data, RAG requires data observability to live up to its enterprise ready expectations. Data can break for any number of reasons, such as misformatted third-party data, faulty transformation code or a failed Airflow job. And it always does.

Data observability gives teams the ability to monitor, alert, triage, and resolve data or pipeline issues at scale across your entire data ecosystem. For years, it’s been a must-have layer of the modern data stack; as RAG grows in importance and AI matures, observability will emerge as a critical partner in LLM development.

The only way RAG – and enterprise AI – work is if you can trust the data. To achieve this, teams need a scalable, automated way to ensure reliability of data, as well as an enterprise-grade way to identify root cause and resolve issues quickly – before they impact the LLMs they service.

So, what is the de facto LLM stack?

The infrastructure and technical roadmap for AI tooling is being developed as we speak, with new startups emerging every day to solve various problems, and industry behemoths claiming that they, too, are tackling these challenges. When it comes to incorporating enterprise data into AI, I see three primary horses in this race.

The first horse: vector databases. Pinecone, Weaviate, and others are making a name for themselves as the must-have database platforms to power RAG architectures. While these technologies show a lot of promise, they do require spinning up a new piece of the stack and creating workflows to support it from a security, scalability and reliability standpoint.

The second horse: hosted versions of models built by third-party LLM developers like OpenAI or Anthropic. Currently, most teams get their generative AI fix via APIs with these up-and-coming AI leaders due to ease of use. Plug into the OpenAI API and leverage a cutting edge model in minutes? Count us in. This approach works great out-of-the-box if you need the model to generate code or solve well-known, non-specific prompts based on public information. If you do want to incorporate proprietary information into these models, you could use the built-in fine tuning or RAG features that these platforms provide.

And finally, the third horse: the modern data stack. Snowflake and Databricks have already announced that they’re embedding vector databases into their platforms as well as other tooling to help incorporate data that is already stored and processed on these platforms into LLMs. This makes a lot of sense for many, and allows data teams charged with AI initiatives to leverage the tools they already use. Why reinvent the wheel when you have the foundations in place? Not to mention the possibility of being able to easily join traditional relational data with vector data… Like the two other horses, there are some downsides to this approach: Snowflake Cortex, Lakehouse AI, and other MDS + AI products are nascent and require some upfront investment to incorporate vector search and model training into your existing workflows. For a more in-depth look at this approach, I encourage you to check out Meltano’s pertinent piece on why the best LLM stack may be the one sitting right in front of you.

Regardless of the horse we choose, valuable business questions cannot be answered by a model trained on the data that is on the Internet. It needs to have context from within the company. And by providing this context in a secure, scalable, and trusted way, we can achieve enterprise ready AI.

The future of enterprise AI is in your pipelines

For AI to live up to this potential, data and AI teams need to treat LLM augmentation with the diligence they deserve and make security, scalability and reliability a first-class consideration. Whether your use case calls for RAG or fine tuning – or both – you’ll need to ensure that your data stack foundations are in place to keep costs low, performance consistent, and reliability high.

Data needs to be secure and private; LLM deployment needs to be scalable; and your results need to be trusted. Keeping a steady pulse on data quality through observability are critical to these demands.

The best part of this evolution from siloed X demos to enterprise ready AI? RAG gives data engineers the best seat at the table when it comes to owning and driving ROI for generative AI investments.

I’m ready for enterprise ready AI. Are you?

Interested in learning how data observability plays a critical role in scaling enterprise ready AI? Reach out to Lior and the rest of the Monte Carlo team!

Our promise: we will show you the product.

Product demo.

Product demo.  What is data observability?

What is data observability?  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage