Databricks Data + AI Summit 2023 Keynote Recap: LakehouseIQ, Delta Lake 3.0, and More!

Like the omnipresent San Francisco drizzle, Data + AI keynote attendees steadily trickled down the escalators and toward the Moscone Center Hall B + C.

We were greeted with conference pop/techno (our generation’s elevator music) and flashing neon lights. The music seems like a missed opportunity to remind us of the 90s theme for the celebration event taking place later tonight with Salt-N-Peppa, but I’m not an event planner for a reason.

Attendance at the conference and at the keynote has been surprisingly strong. My seatmates were turned away from sessions yesterday that were filled past capacity and its standing room only in the keynote hall.

It turns out that media, vendors, and customers all took the same strategy of splitting up their teams to have a presence at both of the major data conferences taking place this week.

Table of Contents

Keynote Kick-Off: Welcome To Generation AI

Following a puff of smoke and a red lazer light show, Databricks CEO, Ali Ghodsi, stormed the stage.

The start included a few stumbles and over-promises about how the audience was going to use technology to “make everyone smarter, cure diseases, and raise the standard of living,” before the executive caught his stride. The opening message was that the audience didn’t need to fear the far reaching and unpredictable impacts of AI because we are part of Generation AI. We are going to lead the revolution.

Ali then paid homage to Databricks and the conferences open source origins. He thanked the community for their participation in making Spark, Delta, and MLflow a success. That got an applause line that reminded you that there still is a real sense of community and higher level of loyalty than you might find with other data vendors.

Ali then brought Microsoft CEO, Satya Nadella, onto the stage virtually, “via Skype.” The crowd was clearly excited, showing just what a rockstar Satya has become within tech circles.

Satya Nadella: The Importance of Responsible AI

Satya loomed large over the stage and continued the theme of the power of AI for good. You know a technology is powerful when the first two portions of the keynote are dedicated to easing anxieties and concerns before the specific applications and features are unveiled.

Satya discussed how Microsoft has been working on AI for a long time and was quick to jump on the AI revolution in part due to observations about its workload structure. AI training workloads are very data heavy and synchronous, so from an infrastructure perspective, Satya wanted to ensure that Azure was the best place for those profitable workloads.

He also talked about how he thinks about mitigating the possible negative impacts of Gen AI in three separate tracks.

- An immediate track focused on combating misinformation with technologies like watermarking and regulation around distribution.

- A medium term track focused on how to ground models to prevent issues with cyber security and bio risks.

- A long-term track focused on making sure we don’t lose control of AI.

It was an avuncular discussion that positioned these issues largely as alignment challenges that can be solved in part by technology and the people in attending the keynote.

Democratizing AI with the Lakehouse

Our MC, Ali, then returned to the stage to focus on the question of how AI can best be democratized. We heard the traditional Databricks thesis (which doesn’t make it any less revolutionary or insightful), that AI has been held back by two separate parallel worlds.

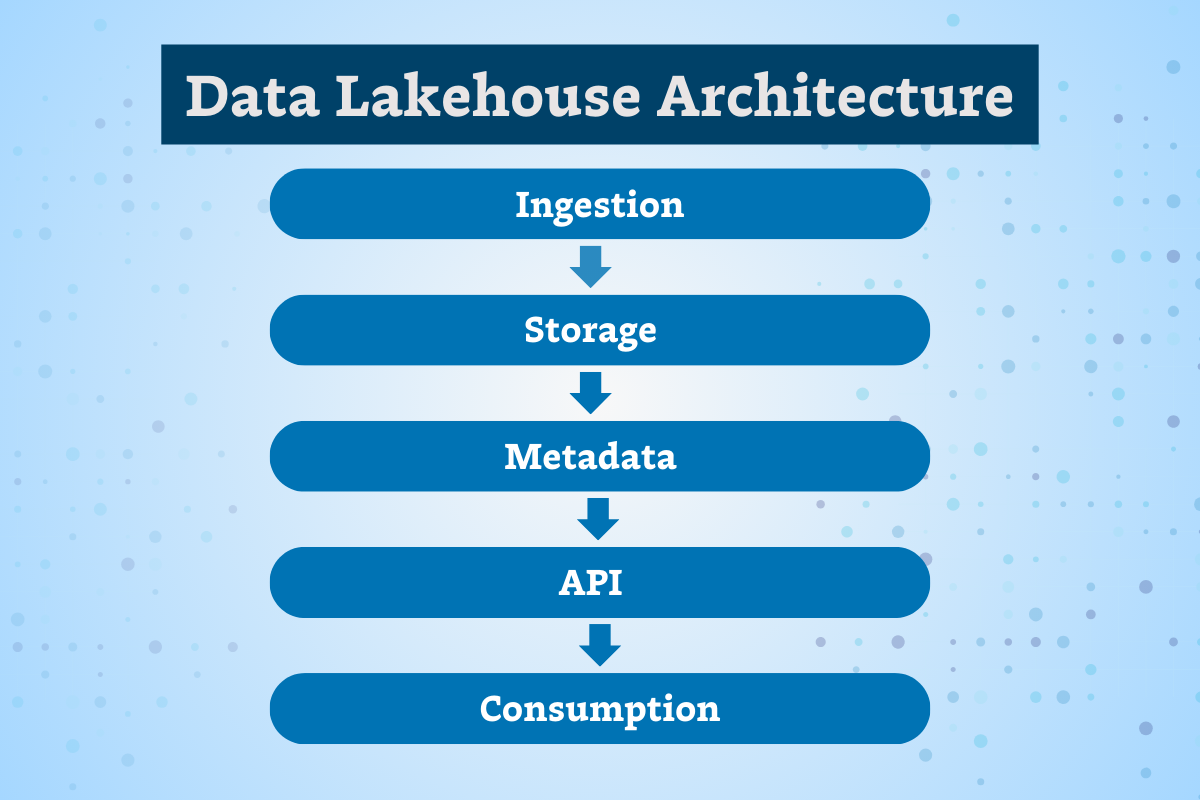

These are the world of data and the data warehouse that is focused on using structured data to answer questions about the past and the world of AI that needs more unstructured data to train models to predict the future. Databricks believes these worlds need to merge (and their strong preference would be that merger takes place in the Databricks Lakehouse).

He then provided the number of Lakehouse customers, 10,000 active users, for the first time in the company’s history. Again, the surprising applause break was a reminder that the audience really feel a bond of community thanks to Databricks’ open source roots.

JP Morgan Chase: Leveraging ML at Scale

Ali then welcomed Larry Feinsmith, head of global tech strategy at JPMC, to the stage for what felt like a relatively spontaneous fireside chat (for a major keynote that is).

Larry focused on a really important point that would be echoed by other Databricks speakers: the competitive advantage of ML and AI will be a company’s proprietary first party data (and intellectual property). Or as he put it, “What’s additive of [AI] is training it on our 500 petabytes, using our Mount Everest [of data] to put it to work for our customers.”

He also made some insightful points of cost efficiencies at scale, specifically a scale of 300 AI production use cases, 65 million digital customers, and 10 trillion transactions. From his perspective, this can only be done efficiently with platforms. At JPMC they leverage a ML platform called Jade for managed data and InfiniteAI platform for data scientists.

Larry’s point was that the Lakehouse architecture is transformational because of the capabilities it brought of the data it allowed JPMC to not have to support two stacks and move data around as little as possible.

Larry’s portion of the keynote also featured the biggest laugh of the day. He created a bit of tension in the room from claiming ChatGPT has made him the same as all of the Python, Scala, and SQL developers present, which was expertly diffused by Ali later with a quick quip after Larry’s pitch for developer talent to join his team at JPMC: “You don’t even have to know programming, you only have to know English.” It was a nice line that reassured folks their programming skills weren’t obsolete just yet.

LakehouseIQ: Querying Data In English Via An LLM

Then came one of Databricks’ most ambitious feature announcements: LakehouseIQ.

Ali showed the evolution of how the Lakehouse started with technical users with Scala, then Python, and then SQL. The next step was all enterprise users in their native language.

He then passed the mic to Databricks CTO, Matei Zaharia, to introduce LakehouseIQ, which likely made the cornucopia of startups working on the same problem immediately start squirming.

Matei was incredibly effective at pointing out how incredibly difficult the problem of query data with natural language was because each company has its own jargon, data, and domain structures. For a Databricks employe to ask the data “how many DBUs were sold in Europe last quarter,” the LLM needs to understand that a DBU is a unit Databircks uses to price, that Europe might be broken up into two sales regions, and when exactly Databricks has its quarters.

LakehouseIQ solves this by taking singles used in an organization, which it can do because of the information Databricks can pull from dashboards and notebooks of end users. It works through Unity Catalog to understand these signals and build models to return a response. At the moment, LakehouseIQ works best as an interface that goes back and forth between English prompts and refining prompts and the SQL/Python query that generates the data–but the end goal of only requiring English was clear.

Both Matei and Ali stressed that LakehouseIQ is in its infancy and something Databricks is actively working on to improve, but the future of querying your data similar to how you might Google a question didn’t seem as far away as it did yesterday.

MosaicML: The Machine To Build Your GenAI Models

Ali then introduced MosaicML, the $1.3 billion dollar acquisition Databricks announced earlier in the week, and its CEO Naveen Rao.

Naveen painted an exciting picture of just how accessible and affordable it is for companies to build their own Gen AI models. To Naveen (and Databricks), the future of Gen AI belongs to private models rather than the Chat-GPT and Bard’s of the world for three reasons: control, privacy, and cost.

To that end he showed how an effective model of 7 billion parameters can be created in days for around $250,000 or a 30 billion parameter model for around $875,000. He then shared the story of Replit, the shared IDE, and how it produced their own state of the art model in 3 days that acts as a coding co-pilot. As a recent Replit user, I can attest that the auto-complete and error highlights are very helpful.

The bigger picture here is that just like Databricks and Snowflake needed an easy way for users to create data applications in order to use their application store, they need an easy way for their users to create GenAI models to now host and monetize those on their marketplaces (but more on that later).

LakehouseAI: Vector Search, Feature Serving, MLFlow Gateway

Databricks introduced cool new functionality as part of their new LakehouseAI feature. The technical labels might be a miss, as Databricks co-founder and speaker for this portion of the event, Patrick Wendell remarked, “Let me explain what they mean and then the rest of you can clap.”

In a nutshell, LakehouseAI will help users solve three of the most difficult challenges in deploying AI models:

- Prepare the datasets for ML

- Tuning and curating the models

- Deploying and serving those models

The vector search and feature serving components will help unlock the value from unstructured data within an organization by auto-updating search indexes in unity catalog and accessing pre-computed features in realtime to feed the model.

He then used the example of a customer support bot who would get the request to “Please return my last order,” and understand when the order took place and the rules for returns so it could then say, “You made this order in the last 30 minutes, would you like me to cancel it for you?”

Patrick also talked about Databricks LakehouseAI features to allow users to easily compare and evaluate Gen AI models for specific use cases–pointing out that about 50% of Databricks customers are exploring SaaS models and 65% are exploring open source (meaning a decent percentage are exploring both types).

He then wrapped up by talking how Databricks also supported the deployment of these models with GPU support for fine tuning AI models and Lakehouse Monitoring for monitoring the drift and model quality. (Of course it’s important to note the data observability platforms like Monte Carlo will play helpful roles in monitoring the underlying data quality and data pipeline performance).

Following Patrick was a quick customer story vignette featuring JetBlue, one of the best data teams on the planet. Sai Ravuru, senior manager of data science and analytics, talked through how the company has used governance capabilities to govern how its chatbot will provide different answers based on the person’s role within the company.

He also discussed the critical operational decision support their BlueSky model is able to provide their front-line operational staff by simulating the possible outcomes of a decision over a 24 to 48 period in the future. Very cool stuff.

Better Queries With LakehouseAI

Ali returned to the stage in a brief interlude to provide some updates on usage across the Lakehouse:

- Delta Live Tables have grown 177% in the last 12 months. The momentum of streaming applications is lost a bit in the hype of Gen AI.

- Databricks Workflows reached 100m weekly jobs and are processing 2 excabytes of data per day.

- Databricks SQL or data warehouse has 4,600 customers actively using it since General Availability and is reaching about 10 percent of their revenue.

This was a nice transition to bringing Reynold Xin, another Databricks cofounder, to the stage to talk about how the lakehouse is also actually an awesome data warehouse thanks to LakehouseAI.

Reynold started with a warning that it was about to get technical, but he did a good job of not getting too deep in the weeds. He framed the problem LakehouseAI Engine can solve very well with a quote from a 1979 paper on data warehouse technology (OK maybe it did get a little technical):

“Future users of large data banks must be protected from having to know how the data is organized in the machine.”

He then asked who had to partition or cluster a table and if that work actually led to an increase rather than decrease in cost. Naturally, most of the room raised their hands.

He then discussed the tradeoffs implicity in data warehouse query optimizers that can choose two of the three between fast, easy, or cheap. He walked through a quick explainer of how data warehouse queries worked, but the punchline was that the process was overly simplistic and problematic…but also a great problem to be solved by AI.

He then pointed out how Databricks has more data on more diverse workloads than any other company. Databricks took this huge amount of query patterns to train what they call PredictiveIO to “reinvent data warehousing.” Essentially, their model predicts where the data might be and speeds up queries without manual tuning. The numbers Reyond cited were Merge 2-6x faster and Delete 2-10x faster than other services.

There is also an automatic data layout optimization component that thinks about how to cluster and partition based on your workloads nad picks the right cluster for you with automatic file size selection, clustering, run optimization, and more. The numbers here were 2x faster queries and 50% storage savings.

Finally, Reynold talked about smart workload management and how AI can help ensure that when a small job and large workload are running at the same endpoint, users wouldn’t have to worry about large workloads slowing down the smaller.

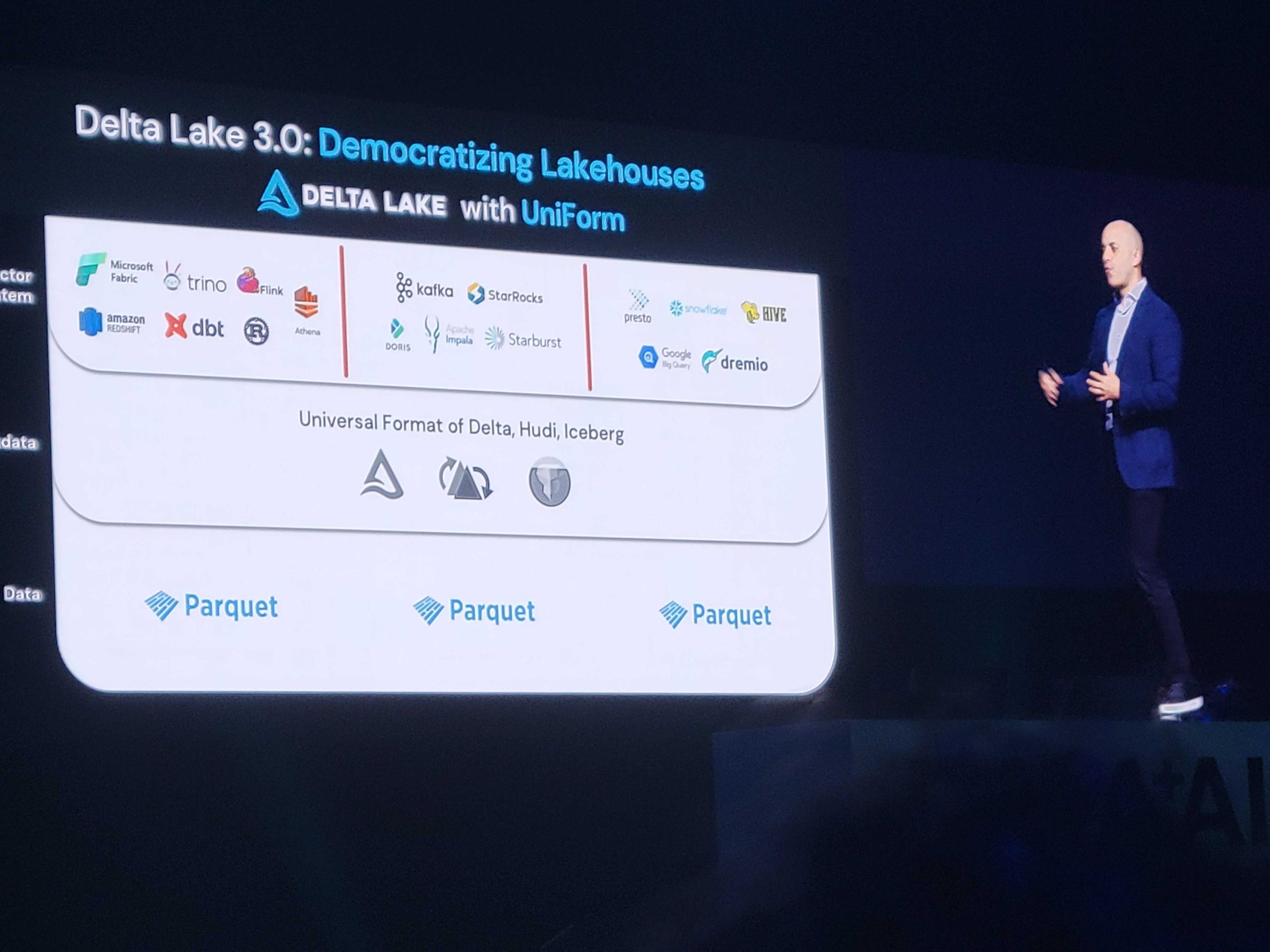

Delta Lake 3.0 and Uniform

Ali came back on stage to share what was one of the most surprising announcements. Yesterday, the data world (outside of data warehouses) essentially had to choose between Delta, Hudi, or Iceberg table formats. Your choice essentially locked you into that table’s ecosystem, despite the fact that under the hood all of those table formats are run on Parquet.

Today, with Delta Lake 3.0, when you write in a Delta table you will now create the underlying metadata in all three formats: Delta, Hudi, and Iceberg. It’s a big step forward that the community will love. Ali hinted at Databricks motivation, “Now everyone can just store their data dand do AI and all these things with it,” i.e. let’s get rid of the friction and get to the cool stuff!

The New Public Preview Features For Unity Catalog

This time Matei returned to the stage to talk about the new features of Unity Catalog including Lakehouse Federation, Lakehouse Monitoring, and a MLFlow model registry. These were described in the buckets of: enterprise wide reach, governance for AI, and AI for governance.

In summary, Lakehouse Federation will help you discover, query and govern your data wherever it lives. It will connect the data source’s unit catalog and set policies across them. In the near future, it will even push those policies down into warehouses like MySQL.

The MLFlow model registry is self-explanatory, and Lakehouse Monitoring will make data governance simpler with AI for quality profiling, drift detection, and data classification.

The Conclusion: Delta Sharing and Lakehouse Apps

Delta Sharing and Lakehouse Apps, the stars of last year’s show, were also quickly highlighted. Creating the “iphone app store” but for data is a key foundation for Databricks go-to-market strategy.

The typical benefits of not having to move data and avoiding security reviews were extolled, but the big news here was the ability to not only offer/monetize datasets and notebooks, but now AI models (thanks to MosasicML).

Rivian then essentially wrapped up the Databricks keynote, which was an interesting choice. They discussed how data and AI revolutionized their business model and will help them create more reliable cars and help their customers predict needed maintenance.

Finally after more than two hours Ali returned to the stage a final time for a quick recap along with a plug for attendees to “go out and get our electric venture vehicle.”

Wondering how data observability can help your AI and ML model development on Databricks? Talk to us!

Our promise: we will show you the product.

Product demo.

Product demo.  What is data observability?

What is data observability?  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage