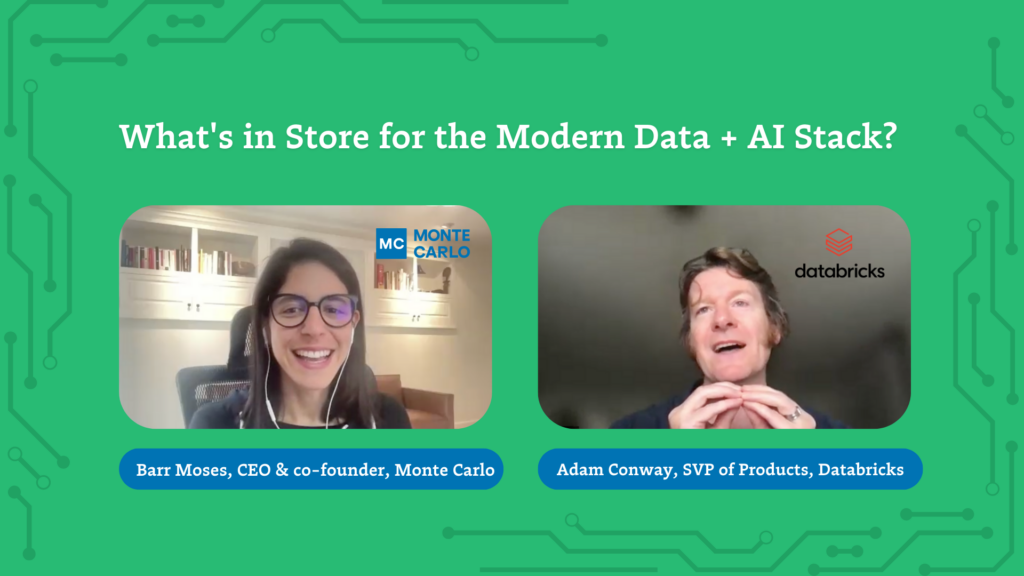

What’s Next for the Modern Data and AI Stack? 5 Predictions from Databricks’ SVP of Products, Adam Conway

When it comes to the intersection of data engineering and AI, longtime data leader Adam Conway has a particularly focused point of view. Adam was a co-founder of Aerohive Networks and the open source AI project Donkey Carl, and — since 2018 — a product leader at Databricks.

As Senior Vice-President of Products, Adam has led the development of machine learning, data engineering, and data science products at the company that introduced the data lakehouse architecture to thousands of enterprise organizations. And at the recent Data + AI Summit, Databricks leaders announced their ambitious LakehouseIQ, a new feature that aspires to make querying your data in plain language and announced the acquisition of Mosaic AI, a leading enterprise AI vendor.

So, much like everyone else in our industry, Adam’s had one particular topic on his mind lately: generative AI. But thanks to his unique position, he’s immersed in thinking specifically about how generative AI can help businesses get more value out of their data. Since this is a topic near and dear to our own mission at Monte Carlo as the leader in data observability and a Databricks partner, we recently sat down with Adam to get his take on how AI will transform the modern data and AI stack as we know it, as well as the factors contributing to the data team’s ability to practically – and meaningfully – harness AI.

One of the biggest drivers of AI success in Adam’s perspective? Data reliability.

Adam views data quality both as an enabler of better AI AND AI as the ticket to higher quality data: “I’m hoping we achieve both sides: one, we get very high-quality data, and and we get the ability to ask those questions about our data.”

In fact, at Databricks, Adam suggests that partnering with Monte Carlo has given them the ability to build data observability across enterprises and dramatically improve reliability. “I think data teams should know: if you’re working on Databricks and Monte Carlo, we have a great opportunity to improve data quality together.”

Here are his top five predictions: including where companies will start seeing value from AI first, how open-source models will shape the landscape, and the ever-present need to ensure data quality.

- Generative AI has enormous potential — but we’re just getting started

When we first started to see what generative AI models were capable of in 2022, the conversation at the time was centered around one question: Is this super intelligence?

According to Adam, the answer is no.

“These models don’t know everything, and they struggle with reasoning in some places. But they are extremely useful for certain tasks, like coding and summarization. We’re still in the infancy of generative AI, and we have some examples of how to use it, but very few applications are taking advantage of its potential.”

Adam and his team at Databricks see this as an opportunity for innovation, and they’re focused on how they can help enterprises move their applications to be AI-enabled. One clear first step: allowing companies to use AI to perform queries and answer questions about their data.

“I’ve seen examples of industries with huge amounts of documentation that want to enable their customer support or internal teams to retrieve answers out of tens of thousands of pages of records,” said Adam. “That’s the right approach, because the risk is low — it allows you to get your hands dirty, provides a lot of value, and doesn’t create a lot of risk. At Databricks, we have an internal chatbot that helps employees figure things out and look at their data. And we see a lot of value there.”

- Generative AI won’t disrupt the modern data stack – just enhance it

Adam sees generative AI as a natural complement to the data lakehouse — not a disruptor.

“From the beginning, the lakehouse started with the concept of combining the world of data scientists and AI — which is often messy and has poor governance — with data warehousing, which is structured,” said Adam. “The lakehouse provides the best of both worlds. And even before ChatGPT came out, hundreds of our customers were already using transformer models on Databricks.”

Specifically, he believes generative AI will bring a new class of users to the data lakehouse by drastically improving convenience. “Now that you can go into your data and just ask questions to get answers, that becomes a very appealing thing to new business users who are more used to looking at dashboards than interacting with data platforms.”

Databricks’ LakehouseIQ provides exactly this kind of question-and-answer interaction with data through a chat-like interface. “Now, it’s no longer a data scientist or data analyst looking at the data and providing insights. It’s now a model that’s able to take that data and provide it to an end user.”

While that does raise some concerns around data accuracy (more on that in a moment), the potential for enterprise organizations to see more value from their data is enormous. “The ability of AI to answer questions, understand jargon, and understand organizational nuances is working — and we’re very excited.”

- Open-source approaches will expedite LLM development

While ChatGPT may have dominated the headlines for the first half of 2023, Adam predicts that the landscape of large language models will be broad and diverse — not monopolized by a single company or a single model.

“A huge set of innovations over the last five years have made it possible for others besides OpenAI to create high-quality models,” said Adam. “Basically everybody who was somewhat related to AI are now building generative AI models. It’s going to create a ton of innovation, and it’s going to be hard for an individual company with hundreds of engineers to compete with the whole world of researchers who are now creating advanced AI models.”

Adam points to a few examples: “Llama 2 is a phenomenal model. The new Anthropic models are pretty remarkable.[Mosaic] MPT 30B is amazing, at a much smaller size. It’s becoming clear that smaller models are getting much better — and the smaller they get, the easier they are to serve.”

However, Adam warns that the open-source community will face a challenge when it comes to accessing the computing power required for training these models. “There will be tension between how many GPUs can people get to train models versus these large companies. There’s going to be scarcity for a time, until GPUs become more readily available.”

- Data reliability and governance will be critical for LLM success

As we mentioned earlier, Adam recommends every data team begins to implement AI by using it to allow consumers to query data in an approachable, scalable way. But, when teams provide more data access to people within an organization who don’t understand the underlying data pipelines, it opens the door for potential issues — especially if your data isn’t accurate and reliable.

“If you produce a bunch of bad results, you potentially create a customer issue or an internal perception issue,” said Adam. “You might give people bad data and they might make bad decisions. These are very real problems that are going to happen, because there’s not somebody in between the consumer and the data that’s able to look at that.”

This concern is not new to data teams. And Adam sees it as simply the next step in the evolving challenge of ensuring quality within an organization. “To use another hot topic word, this is a data product problem. We talk about data products like dashboards, a set of tables, or a set of views. Now, when we introduce an interface people talk to, that similarly increases people’s expectations on that data. And people are tired of broken data products. Of course everybody makes sure the CEO’s dashboard works, but if you need to go and find some piece of information and that turns into a several-day ordeal with a set of tickets, it just lowers confidence across the organization.”

However, Adam also predicts that AI has the potential to help improve data quality efforts. “If you produce bad data, or the data’s different, there’s also an opportunity with these AI assistants to say that this data doesn’t seem right. We can ask the model what’s happening. We can learn about a job that failed to run last night, or how a bunch of nulls in our bronze table propagated through and broke the pipeline.”

- Data observability will help shape what comes next

Adam sees data quality as a crucial challenge for data-driven companies to solve as we enter this next phase of the modern data + AI stack.

“It’s more and more critical for enterprises to make data decisions, and we’re seeing companies that are investing in data platforms are performing better than those companies that don’t. It really comes down to, Are you building a culture within your organization where everybody can feel confident that they can access that data reliably? If we want to build data-forward companies, we have to do that with reliable data and high-quality data products.”

Among Databricks customers, Adam has seen great success at improving quality and reliability when teams integrate their lakehouse with a data observability solution like Monte Carlo.

Start improving data quality across your lakehouse

Forward-thinking teams at data-driven companies like Comcast, BairesDev, Abacus Medicine, and more are achieving data reliability with Monte Carlo and Databricks. By layering our data observability solution across their stack, including Databricks’ Delta Lake and Unity Catalog, these teams are able to proactively detect and resolve data quality incidents before they impact downstream data products and consumers.

Learn how Databricks and Monte Carlo’s mutual customers are achieving data reliability — and contact us to start preparing your data stack for the arrival of AI.

Our promise: we will show you the product.

Product demo.

Product demo.  What is data observability?

What is data observability?  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage