7 Data Quality Checks in ETL Every Data Engineer Should Know

Whether by mistake or entropy, anomalies are bound to occur as your data moves through your production pipelines. With the right data quality tests in place, you can identify and fix these issues in real-time and build a strong foundation for data reliability.

In this blog post, we’ll discuss seven common data quality tests that you can perform during the ETL (Extract, Transform, Load) process to validate your data. We’ll also highlight the challenges of performing these tests and explore an alternative approach: data observability.

The seven must-have data quality checks in ETL:

- NULL values test

- Volume tests

- Numeric distribution tests

- Uniqueness tests

- Referential integrity test

- String patterns

- Freshness checks

- Data Observability: The Key to Scaling Data Quality

NULL values test

Checking for NULL values is a basic, but essential data quality check. It ensures no data is missing from any required fields.

Imagine a company that sells online courses. The company collects data on its customers, including their names, email addresses, and course preferences. One day, the company’s data team notices that they are getting fewer course sign-ups than expected. They decide to analyze their customer data to see if they can identify any trends or issues. However, when they start their analysis, they realize that there are many NULL values in the data for the “course preferences” field. This is because the field was not mandatory when customers were signing up for courses, so many people left it blank.

Without checking for NULL values, the company’s analysis is incomplete and they are unable to accurately identify any trends or issues. Moreover, this can lead to flawed decision-making, such as investing in the wrong courses or marketing to the wrong audience.

One excellent out-of-the-box test for uncovering NULL values is dbt’s generic not_null test.

{% test not_null(model, column_name) %}

select *

from {{ model }}

where {{ column_name }} is null

{% endtest %}

This test can become tedious, however, if there are many NULL values in the data, as it then requires manually checking and fixing each one.

Volume tests

Ensuring the data volume is within expected limits is another important data quality check. If the batch update regularly comes in at 200 rows and suddenly jumps to 2,000 or drops to 2 that could be a reflection of real world behavior, or it could be the result of a mistake made during a query change (or thousands of other ways data can break). Either way, you want to know.

Imagine a company that provides customer service through a website and a mobile app. The company collects data on customer interactions, including the date, time, and type of interaction. One day, the company’s data team notices that the website is running slowly. They decide to analyze their customer interaction data to see if they can identify any trends or issues. However, when they start their analysis, they realize that the volume of data is much lower than expected. This is because the data collection system was not properly configured and not every interaction on the website was tracked.

Not checking the volume of the data could lead to flawed decision-making, such as investing in unnecessary infrastructure upgrades, when in fact the existing system just wasn’t configured properly.

Volume tests can be difficult to perform if the data volume is constantly changing or if there are no established baselines for comparison.

Numeric distribution tests

Checking the distribution of numeric data is important for ensuring the data is accurate and representative. If you don’t check the distribution, you may end up with skewed or biased results that don’t accurately reflect the underlying data.

Imagine a company that sells a subscription-based product. The company collects data on its customers, including their ages, genders, and subscription lengths. One day, the company’s data team notices that the average subscription length has been decreasing over time. They decide to analyze their customer data to see if they can identify any trends or issues. However, when they start their analysis, they realize that the distribution of ages is not representative of their customer base. It turns out their data collection system was not properly configured and had been collecting data from both the website and a third-party vendor, resulting in skewed data.

Without checking the distribution of numeric data, they may mistakenly conclude that there is no correlation between age and subscription length, when in reality, the data is simply skewed. This can lead to flawed decision-making, such as targeting the wrong age group in marketing campaigns or developing the wrong product features. By performing a numeric distribution test, the company would have been able to identify the skewed data and come to a more accurate conclusion.

One great example of an out-of-the-box distribution test is dbt’s accepted_values test, which allows the creator to define a range of acceptable distribution values for a given column.

However, this test can be time-consuming if there are a large number of numeric fields to check.

Uniqueness tests

Ensuring that there are no duplicate records in the data is essential for maintaining data integrity. If you don’t check for duplicate records, you may end up with multiple copies of the same data, which can lead to confusion and inaccurate results.

Imagine a company that operates a loyalty program for its customers. The company collects data on its customers, including their names, email addresses, and loyalty points. One day, the company’s data team notices that the loyalty points balance for some customers is much higher than expected. They decide to analyze their customer data to see if they can identify any trends or issues. However, when they start their analysis, they realize that there are many duplicate records in the data.

Without checking for unique records, the company’s analysis could lead to flawed decision-making, such as targeting the wrong customers in marketing campaigns or developing the wrong loyalty program features. By performing a uniqueness test, you’d be able to identify the duplicate data and come to a more accurate conclusion.

However, this test can be difficult to perform if the data is large or if there are many fields to check for uniqueness.

Referential integrity test

Checking that foreign keys in the data match the corresponding primary keys is crucial for maintaining the relationships between different data sets. If you don’t check for referential integrity, you may end up with broken relationships between data sets, which can lead to incorrect conclusions.

Imagine an online store that has a “customers” table and an “orders” table. The “customers” table has a primary key called “customer_id” and the “orders” table has a foreign key called “customer_id” that links each order to a customer.

For whatever reason if the “customer_id” values in the “customers” table don’t match the “customer_id” values in the “orders” table, the company then has incorrect relationships between the data sets. By performing a referential integrity test, the company would have been able to identify the incorrect relationships, fix them, and come to a more accurate conclusion.

Known as the relationships test in dbt, referential integrity tests ensure that any data reflected in a child table has a corresponding parent table.

However, this test can be challenging if the data is coming from multiple sources or if there are many relationships to check.

String patterns

Checking that string data follows the expected pattern is important for ensuring the data is accurate and consistent.

String pattern tests can be used to validate a variety of common patterns like UUIDs, phone numbers, emails, numbers, escape characters, dates, etc.

For example, you can use regular expressions to search for patterns that match the structure of an email address (e.g. [a-zA-Z0-9._%+-]+@[a-zA-Z0-9.-]+\.[a-zA-Z]{2,}). If any records in the dataset contain a string that matches this pattern, it is likely that the string is an email address. This test can help identify any invalid email addresses in the data set.

However, this test can be difficult to perform if the data is large or if there are many string fields to check.

Freshness checks

If you don’t check the freshness of your data, you may end up using outdated or stale data, which can lead to incorrect conclusions and poor decision-making.

Freshness checks validate the quality of data within a table by monitoring how frequently that data is updated against predefined latency rules, such as when you expect an ingestion job to load on any given day.

Freshness tests can be created manually using SQL rules, or natively within certain ETL tools like the dbt source freshness command.

This test can be challenging if the data is coming from multiple sources or if the data is updated frequently.

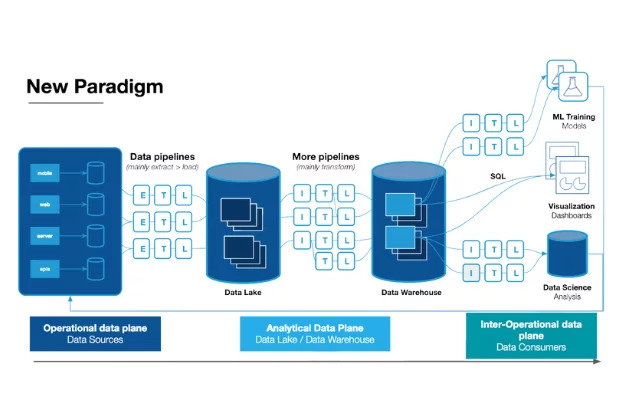

Data Observability: The Key to Scaling Data Quality

As your company’s data needs grow, your manual testing program will struggle to keep up. You’ll move to automate tests, but even then there’s extensive lift required. Your data team needs to continue updating the tests, writing new ones, and deprecating old ones as your data ecosystem grows and data evolves. It won’t be possible to account for every possible issue.

That’s where data observability comes in.

Data observability is more effective than testing because it accounts for the unknown unknowns. As your pipelines become more complex, so will the data quality issues that cannot be predicted. A data observability platform like Monte Carlo deploys ML-powered anomaly detection to automatically detect, resolve, and prevent incidents.

Data observability should be at the heart of your data stack, providing the holistic framework necessary for true end-to-end reliability.

Interested in scaling data quality with data observability? Reach out to Brandon and the rest of the Monte Carlo team to learn more by picking an open time in the form below.

Our promise: we will show you the product.

Product demo.

Product demo.  What is data observability?

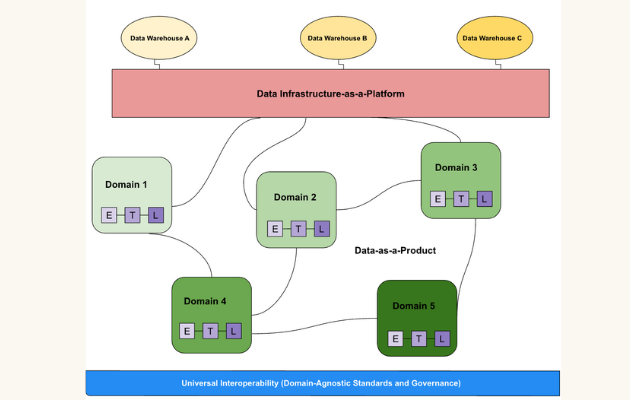

What is data observability?  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage