What are data clean rooms? The best place to share without really sharing

Data is pretty useless if you can’t act on it, but how do you share insights without crossing privacy lines or worrying that your counterparty mishandles the data? While the old days of “just send them everything and see what they find” was fun, we now live in an era where privacy regulations like GDPR and CCPA rule the roost. What’s a company to do?

Enter the world of data clean rooms – the super secure havens where you can mix and mingle data from different sources to get insights without getting your hands dirty with the raw data.

The idea is that by doing all the fun analytical stuff in this squeaky-clean space, you can make sure none of the sensitive bits (think: user preferences and purchase histories) get exposed.

Keep reading to learn how data clean rooms work, leading providers to consider, and how not to get lost with spurious insights by embracing data observability.

How data clean rooms work

Data clean rooms combine and analyze different data sources without directly accessing the raw data. Instead, aggregated insights are extracted from the original data, ensuring that individual data points – which could be sensitive or private – are never exposed.

To achieve this, layers of encryption, access controls, and firewalls are must-haves to ensure that the data remains protected against external breaches and internal misuse.

Once the data clean room is secure, it then needs to be capable of integrating multiple data sources. Whether it’s customer data from CRM systems, web analytics, or sales data, a well-functioning clean room should integrate them all seamlessly with a high degree of interoperability.

Next, with global data regulations such as GDPR and CCPA, data clean rooms must be compliant-ready. This means having clear policies on data retention, access controls, and processing methods. Periodic audits, data lineage tracking, and other governance protocols are crucial to ensure that clean rooms adhere to all of these standards.

Setting up a data clean room is resource-intensive, requiring specialized knowledge. Integration with legacy systems can also create hurdles and ensuring continuous compliance as regulations evolve can be taxing. Several well-known cloud companies have stepped in with solutions.

Data clean room providers

For companies looking for clean rooms, there are many to choose from.

Snowflake Global Clean Rooms

Snowflake stands out by being continuously on the forefront with its clean room offering. Built from the ground-up with a distinctive multi-cluster architecture, Snowflake promotes a paradigm where data sharing doesn’t necessarily mean sharing raw data. This combined with their Zero-Copy Cloning feature underpins Snowflake’s commitment to secure data collaboration. For organizations seeking to easily share insights without compromising data sanctity, Snowflake’s offering might be particularly appealing.

Databricks Clean Rooms

On the other hand, Databricks Clean Rooms integrates big data and AI functionalities into a unified platform, dominating in analytics-intensive use cases. For example, with its Delta Lake storage layer, Apache Spark and big data workloads benefit from the reliability of ACID transactions. This makes Databricks especially attractive for organizations eyeing an end-to-end analytics solution from ETL to AI modeling.

Google BigQuery Clean Rooms

Google BigQuery Clean Rooms differentiates itself with its serverless approach to data analysis, emphasizing scalability without the overhead of infrastructure management. Paired with geospatial analytics and in-warehouse machine learning capabilities, it provides a holistic solution for organizations looking for both data querying and machine learning within the same ecosystem.

AWS Clean Rooms

Lastly, AWS Clean Rooms tap into the vast AWS ecosystem, promising granular security and a plethora of storage integrations. Organizations already invested in the AWS ecosystem might find this offering aligning seamlessly with their existing workflows, ensuring both data security and flexibility.

Data clean room examples

Publishers sharing data with advertisers

As cookies face extinction, clean rooms let publishers share insights about their audiences with advertisers to enhance ad targeting and measure outcomes like sales. Rather than transferring raw, potentially identifiable data, these spaces offer a controlled environment where aggregated insights can be shared, balancing the need for privacy with the demands of data-driven marketing.

Healthcare institutions sharing data with medical researchers

Medical researchers access patient data without exposing sensitive personal information, enabling studies on large datasets while preserving patient privacy.

Banks sharing data with clients

Financial institutions employ data clean rooms to securely share transaction and customer behavior insights with third-party vendors without revealing individual transaction details.

Manufacturers sharing data with suppliers

Manufacturers utilize data clean rooms to share production insights with suppliers, excluding proprietary manufacturing processes or specific contractual details.

A data clean room is only as clean as the data that goes in

As more companies embrace them, remember that the real value inside a data clean room stems from the quality of data ushered in. As the saying goes, garbage in, garbage out. Poor quality data can lead to spurious insights, even if the data is processed in a secure and privacy-compliant manner.

To assure counterparties about the quality of your data, you need a constant eye on your data pipelines. That’s where a data observability platform like Monte Carlo comes into play. Observability allows you to:

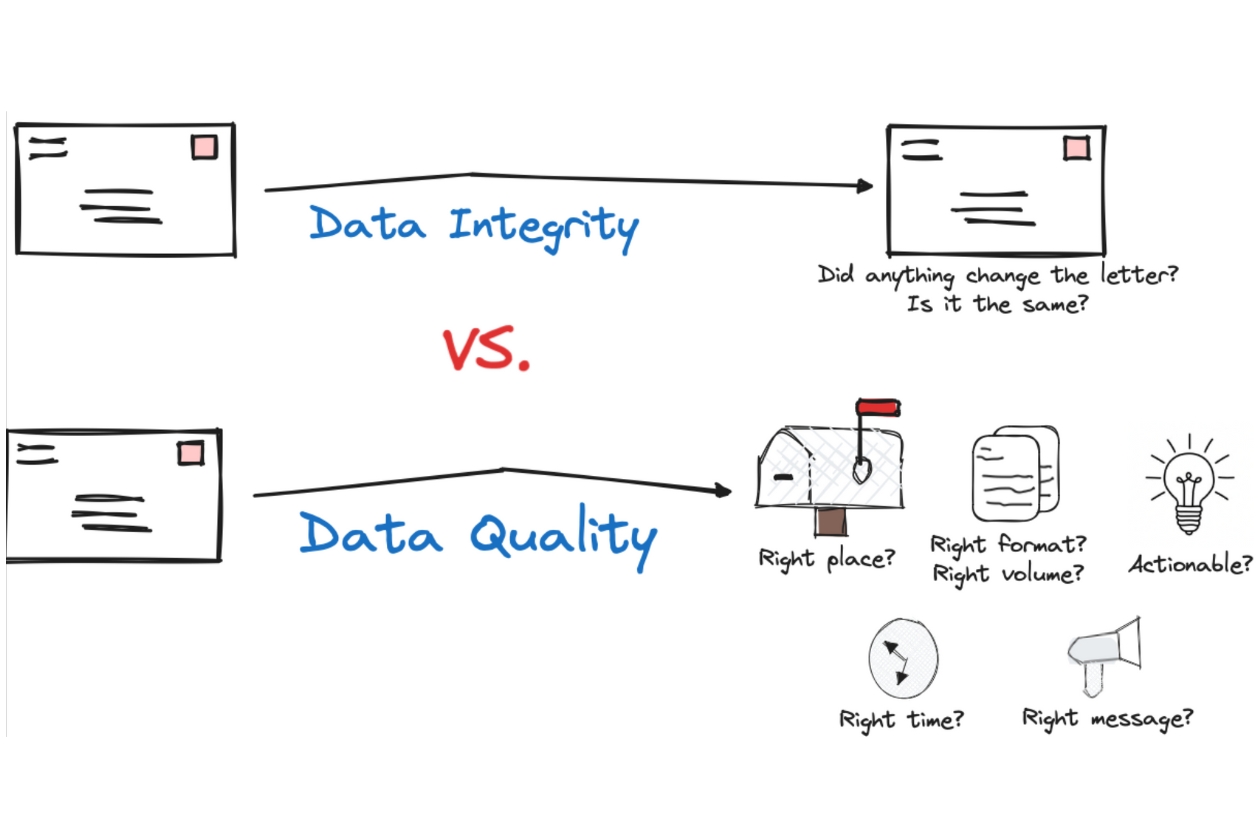

- Monitor Data Quality: Ensure only accurate and reliable data enters the clean room, avoiding spurious insights.

- Track Lineage & Provenance: Understand data sources and transformations, ensuring data integrity when combining multiple sources.

- Ensure Timeliness & Freshness: Confirm that data is current and relevant, with alerts for unexpected delays or gaps.

- Assure Compliance: Verify that data adheres to regulations like GDPR or CCPA before it’s used in the clean room.

- Detect Anomalies: Identify and act upon data inconsistencies or irregularities in real-time, safeguarding the quality of insights derived.

If you’re keen on optimizing the quality of data within your clean room, it’s time to explore Monte Carlo to ensure your insights are both secure and spot-on.

Product demo.

Product demo.  What is data observability?

What is data observability?  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage