5 Proven Best Practices for Measuring Data Team ROI

Data leaders are, by nature, comfortable with pulling metrics and crunching numbers. So why is measuring data team ROI such a universally challenging task?

That was the first question we posed to our recent powerhouse panel of data leaders: Meenal Iyer, VP of Data at Momentive (maker of SurveyMonkey); Shivani Khanna Stumpf, Group VP of Analytics, Data Science, and New Solutions at education tech provider PowerSchool; and Barr Moses, CEO and co-founder of Monte Carlo.

We assembled this trio to break down the do’s and don’ts of measuring data team ROI. With decades of experience building and leading teams, they brought hard-won wisdom and strong opinions to our lively conversation.

Watch the full on-demand video, or read on for five top takeaways from Meenal, Shivani, and Barr.

Accept that calculating data team ROI is hard.

Our panel confirmed what many data leaders know to be true: measuring the ROI of your data team is no small feat.

According to Meenal, the difficulty in measuring ROI arises because data teams sit between technology and business.

“When we measure ROI, we have to merge both of these together. Technology enables us, but it does not drive strategy for us. So we have to ensure that our strategy is driven through business and organizational objectives, but then we use technology to make us more effective.”

While data teams and technical engineering teams can be closely related—and share best practices in areas like observability—how they measure ROI can be quite different.

“The challenge for data teams and data products is that we’re not in control of the data that gets generated,” Shivani said. While application engineers are designing their systems from the ground up, data teams are on the receiving end of whatever inputs are generated by transactional systems within a product. “And if the transactional systems are not structured in a way to think about how they want to collect and analyze the data, it’s going to have massive downstream implications.”

Our panelists agreed that for data leaders, it can be difficult simply to identify what outcomes are important to measure.

“As data people, we can measure everything,” said Barr. “And we know what it’s like to be overloaded with metrics that don’t matter. Measuring ROI is a journey. It’s hard to do it overnight.”

Focus on the business impact of your data team.

While data teams can—and should—look at SLAs around data quality and response times, our panelists all recommend looking at the bigger picture for ROI. How does your data team impact the business?

For Barr, there are four general ways a data team can contribute value to an organization. She used the example of supporting a marketing team to describe each:

- Increase the ROI of a business function’s operations

The data team can provide the marketing team with information on which channels are most effective at driving conversions, which allows the marketing team to improve their own operational ROI.

- Help identify or uncover net-new growth initiatives

The data team can help the marketing team identify a new segment or strategy, and conduct simulation scenarios or experiments to learn about the possibilities before the marketing team invests real dollars in a new initiative.

- Find ways to scale initiatives

The data team could automate insights for the marketing team, helping them scale from Excel spreadsheets to self-serve data products.

- Build new capabilities

The data team could create models or optimizations that enable the marketing team adopt a sophisticated muilti-touch attribution model.

“Once you know the different ways a data team is driving impact across business functions, you can quantify that impact,” said Barr. She advised using a dollar-by-dollar basis—such as, in the growth initiative example above, tying the data team’s value to the revenue brought in by the new customer segment—or using an impact scorecard and ranking progress across how directly the data team supports each business unit.

For Meenal’s team at Momentive, measuring ROI starts with identifying the underlying problem—and articulating the desired outcome after you solve it. “Look at the project that you’re starting, and focus on the problem you’re trying to solve and what solving it will change for you. Is it removing inefficiency? Is it automating something that was previously done manually? There is always a way to quantify the impact.”

Develop a framework that works for your business—not anyone else’s.

“I’ve been in the business of data analytics for most of my career,” said Shivani. “Every company that I’ve worked for has given me learnings that I can apply, but every situation is going to be a little different.”

Each panelist in our conversation has developed their own framework for measuring the impact of data teams within the organization. Three leaders, three frameworks, all successful:

At Momentive, Meenal measures success by her team’s ability to impact specific metrics that reflect adoption, trust, and value:

- Adoption: Are end users actually coming in and getting access to data through our self-serve platform? How is the data team reducing the total cost of ownership of data at the company, or reducing technical debt?

- Trust: Is data high-quality and well-governed so the organization as a whole is able to trust it? (Meenal points out that especially in decentralized teams, metrics across different data silos are probably not going to be consistent—so this responsibility falls to the data team, and their success can be measured by it.)

- Value: How does this initiative tie back to an organizational objective? What is the final value this work will deliver? How quickly can data platforms deliver actionable insights?

“Beyond the operational metrics that we all have to manage, in terms of our developer productivity or bug quality, or how our operations look on a day-to-day basis,” said Meenal, “I use these three metrics to measure the overall success of our team, across the organization.”

At PowerSchool, Shivani’s team is directly focused on the consumer-facing product (not supporting internal business functions). She uses three different criteria to judge success:

- Are we unleashing data as an asset in order to make it useful?

- Are we building solutions for our customers that add value?

- Are we removing redundancy?

“We really need to make sure that we’re being true to these three aspects of the problems that we’re trying to solve for our customers,” said Shivani. “If [a data initiative] doesn’t pass that litmus test, we need to go back to the drawing board and reassess what we’re trying to do.”

Within that framework, Shivani tracks and reports on specific data quality metrics, like latency. With a need to provide near real-time data, “We have replication running on our transactional data sources that apply changes to our data lakes every five to 15 minutes,” said Shivani. “We closely measure the SLAs on that, and if the data doesn’t land in 15 minutes, we have alerts that go off to alert our operations and support teams in order to make sure we address anything that’s wrong.”

Finally, Barr describes three core buckets of metrics she uses to measure data team ROI at Monte Carlo:

Leading indicators (performance or activity-based)

- Is data accurate, on-time, fresh, and complete?

- What’s our percentage for adherence to data SLAs?

- What’s our time to resolve data issues? How quickly are we notified about an issue, and how quickly do we triage it?

- How many users are accessing our data products, and what is their engagement?

- How long does it take users to deliver reports or draw insights?

Lagging indicators (direct ROI): How is the data team impacting the ROI of different business functions (as described above)?

Team motivation

- How is my team morale?

- How do they perceive their work-life balance?

- What do our employee NPS scores tell us?

“Some of these are operational metrics that help the team, and don’t always tie back to ROI,” said Barr. “But measuring ROI will always be somewhat subjective.”

Start small—and specific.

Since knowing where to start with measuring data team ROI is half the battle, our panelists all agree to start small.

“Start with a small number you can focus on,” said Barr. “I think the biggest trap that folks fall into is analysis paralysis. You want to get it right and perfect, and then you end up doing nothing. Be really focused on starting with one product, or one team. There are folks who have been trying to do this for a couple of decades, and we’re still not getting it perfectly right. Just getting started is a great place.”

Shivani agrees. When her team started to measure their ROI, they used a specific instance where they identified they were being significantly inefficient with one of their analytics products. They saw the opportunity to operationalize and reduce costs, and they could track the direct operational reduction in cost—their ROI.

“We were not trying to boil the ocean,” said Shivani. “We were not trying to figure out all these metrics right out of the gate. We looked at tangible, targeted problems that we were trying to solve, and the impact that the data teams were able to make right away.”

Claim your seat at the table

Once you’re calculating your ROI and able to demonstrate the impact your team is making, don’t stay quiet about it. Our panelists recommend making a case for data to have a seat at the leadership table.

“Data teams are unsung heroes and always an afterthought,” said Meenal. “But given that data is a huge focus and makes a huge impact on organizations, all data teams now have more of a say. Use that opportunity to make yourself heard.”

By educating the C-suite about the value that your data team is providing, Meenal said, “You can get a seat at the table to head off problems, be advised of what’s on the horizon, and have the ability to influence change.”

And when it’s clear how much value can be created (or costs saved) by better use of data, it becomes easier to have better conversations and drive adoption with stakeholders throughout the organization.

“Find ways to hold your stakeholders accountable,” said Barr. “Delivering a strong data solution requires your stakeholders to participate. We can’t just throw the data over to marketing and hope it works out.”

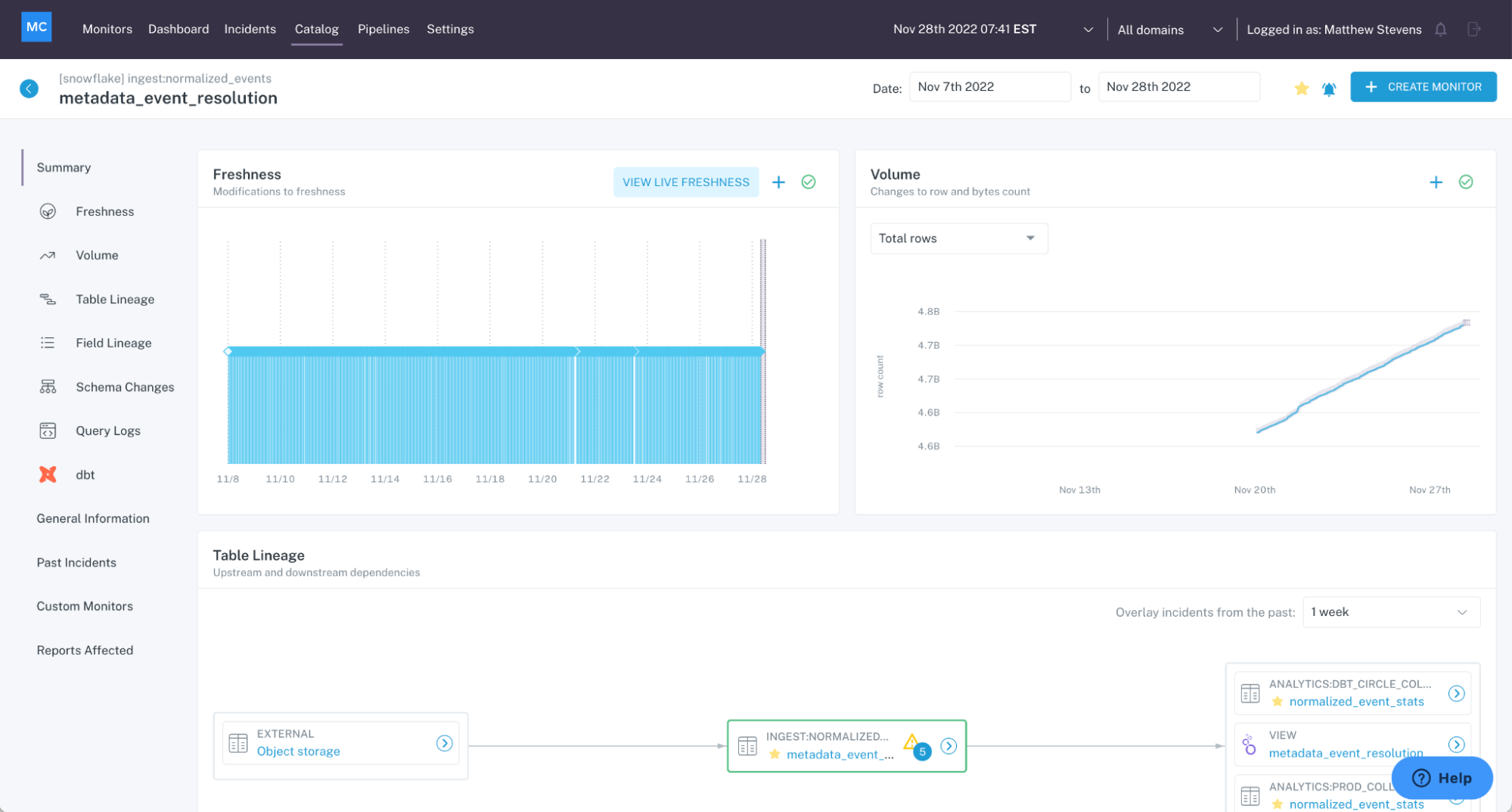

But first: surface the right data metrics

Finally, our panelists agreed that the right tooling makes calculating the ROI of data teams—especially around data accuracy and trust—much easier.

“Especially when you’re dealing with petabyte-scale datasets, it’s not humanly possible to anticipate all the issues that are going to come,” said Shivani. “That’s why anomaly detection, artificial intelligence and machine learning, pattern recognition around our data sets, all of the things that Monte Carlo provides help us understand our data and what we need to do.”

Ultimately, the benefit of calculating your data team ROI is having the knowledge at your fingertips to improve it. As Barr said, “At the end of the day, to be totally honest, the metrics don’t matter if you’re not doing anything with them.”

Curious how data observability can help maximize your data team’s ROI? Contact Monte Carlo to learn more.

Our promise: we will show you the product.

Product demo.

Product demo.  What is data observability?

What is data observability?  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage