Data Testing vs Data Observability: Everything You Need to Know

Data Observability vs Data Testing — do you really need both?

For the past decade, the first-and-only line of defense against bad data has been data testing.

Similar to how software engineers would use unit tests to identify buggy code before it was pushed to production, data engineers would leverage data testing to detect and prevent potential data quality issues from moving further downstream. This approach was (mostly) fine—until the modern data workflow evolved such that companies began ingesting so much data into their database that a single point of failure just wasn’t feasible.

In the world of software engineering, no programmer would ever deploy code to production without testing it first. Similarly, no engineer would run production code without continuous monitoring and observability.

Given that analytical data powers these software applications—not to mention decision-making and strategy for the broader business—why aren’t we applying this same diligence to our data pipelines?

Data, especially big data, is constantly changing, and the consequences of working with unreliable data are far more significant than just an inaccurate dashboard or report. Even the most trivial-seeming errors in data quality can snowball into a much bigger issue down the line.

Recently, a FinTech customer (we’ll call her Maria) explained how a currency conversion error that her team failed to catch until days after it hit led to a significant loss in revenue and engineering time.

Maria and her team assumed they had all the proper tests in place to prevent data downtime —until they didn’t. What happened?

In any data system, there are two types of data quality issues: those you can predict (known unknowns) and those you can’t (unknown unknowns). While Maria and her team had hundreds of tests in place to catch a discrete set of known unknowns, they didn’t have a clear way to account for unknown unknowns or adding new tests to account for known unknowns as the company’s data ecosystem evolved.

By the time Maria fixed the issue, the damage had been done.

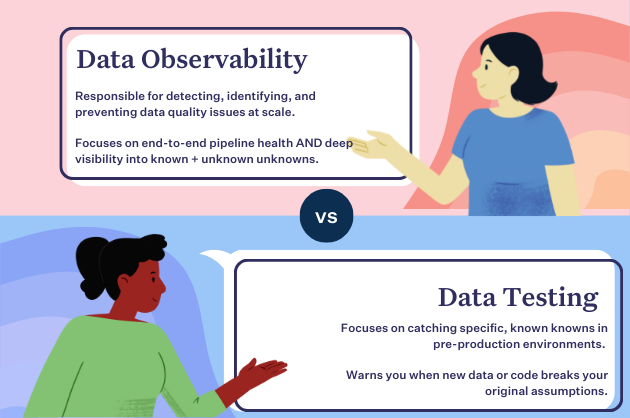

The truth is, known unknowns and unknown unknowns require two distinct approaches: data testing and data observability. In this article, we’ll discuss what each is, how they differ, and what some of the best teams are doing to tackle data quality at scale.

Table of Contents

What is data testing?

Data testing is a form of data quality practice that involves writing manual checks in SQL for known data quality issues to protect ETL pipelines from downtime and confirm that your code is working correctly in a small set of well-known scenarios.

Also known as data quality testing, data testing is a must-have to help catch specific, known problems in your database and data pipelines and will warn you when new data or code breaks your original assumptions.

Some of the most common data quality tests include:

- Null Values – Are any values unknown (NULL) where there shouldn’t be?

- Volume – Did your data arrive? And if so, did you receive too much or too little?

- Distribution – Are numeric values within the expected/ required range?

- Uniqueness – Are any duplicate values in your unique ID fields?

- Known invariants – Is profit always the difference between revenue and cost?

Data testing is very similar to how software engineers use testing to alert them on well-understood issues that they anticipate to happen to their applications.

But is data testing enough to stay on top of broken data pipelines?

When should I test my data?

One of the most common ways to discover data quality issues before they enter your production data pipeline is by testing your data. With data testing, data engineers can validate their organization’s assumptions about the data and write logic to prevent the issue from working its way downstream.

Similar to software, data is fundamental to the success of your business. Therefore, it needs to be reliable and trustworthy at all times. In the same way that Site Reliability Engineers (SREs) manage application downtime, data engineers need to focus on reducing data downtime. And that involves being able to efficiently detect and resolve data quality issues at scale.

Much in the same way that unit and integration testing is insufficient for ensuring software reliability, data testing alone cannot solve for data reliability. While data testing is great for detecting known issues on a small scale, the manual nature of data testing prevents it from being manageable at scale. And while data testing can be helpful for detecting a subset of data quality issues, it’s not very helpful in resolving them.

In order to appropriately manage and maintain data reliability, organizations need a solution that will empower them to both detect and resolve data quality issues — including known unknowns and unknown unknowns — in away that’s efficient, repeatable, and scalable.

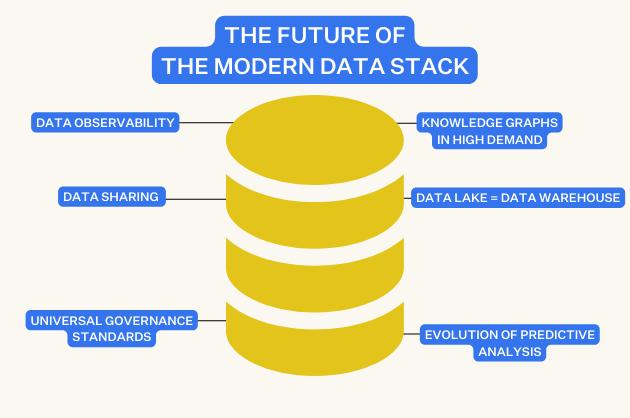

What is data observability?

Unlike data testing, data observability refers to a comprehensive scalable solution that allows teams to detect and resolve data quality issues in a single platform. Put simply, where testing would simply identify problems, data observability identifies those problems and makes them actionable.

When should I use data observability?

Even the most comprehensive data testing suites can’t account for any and every issue that might arise across your entire stack. To account for both unknown unknowns and keep up with the ever growing amount of known unknowns in your data pipeline, you need to invest in data observability, too.

To guarantee high data reliability, your approach to data quality must have end-to-end visibility over your data pipelines. Like DevOps observability solutions (i.e., Datadog and New Relic), data observability uses automated monitoring, alerting, and triaging to identify and evaluate data quality issues.

Some examples of data quality issues covered by observability include:

- A Looker dashboard or report that is not updating, and the stale data goes unnoticed for several months—until a business executive goes to access it for the end of the quarter and notices the data is wrong.

- A small change to your organization’s codebase that causes an API to stop collecting data that powers a critical field in your Tableau dashboard.

- An accidental change to your JSON schema that turns 50,000 rows into 500,000 overnight.

- An unintended change happens to your ETL (or ELT) that causes some tests not to run, leading to data quality issues that go unnoticed for a few days.

- A test that has been a part of your pipelines for years but has not been updated recently to reflect the current business logic.

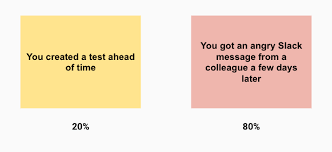

If testing your data catches 20% of data quality issues, the other 80% is accounted for by data observability. Data observability also helps your team identify the “why?” behind broken data pipelines—even if the issue isn’t related to the data itself.

Four ways data observability differs from data testing

Data observability and data testing help you accomplish the same goal—reliable data. Both testing and observability are important, and both approaches go hand-in-hand, but each method goes about ensuring data quality differently.

Here are four critical ways observability differs from data testing:

1) End-to-end coverage

Modern data environments are highly complex, and for most data teams, creating and maintaining a high coverage robust data testing suite is not possible or desirable in many instances.

Due to the sheer complexity of data, it is improbable that data engineers can anticipate all eventualities during development. Where testing falls short, observability fills the gap, providing an additional layer of visibility into your entire data stack.

Data observability tools should use machine learning models to are automatically trained to learn your environment and your data. It uses anomaly detection techniques to let you know when things break, and it minimizes false positives by with training that takes into account not just individual metrics, but a holistic view of your data and the potential impact from any particular issue. You do not need to spend resources configuring and maintaining noisy rules within your data observability platform.

2) Scalability

Writing, maintaining, and deleting tests to adapt to the needs of the business can be challenging, particularly as data teams grow and companies distribute data across multiple domains. A common theme we hear from data engineers is the complexity of scaling data tests across their data ecosystem.

If you are constantly going back to your data pipelines and adding testing coverage for existing pipelines, it’s likely a massive investment for the members of your data team.

And, when crucial knowledge regarding data pipelines lies with a few members of your data team, retroactively addressing testing debt will become a nuisance—and result in diverting resources and energy that could have otherwise been spent on projects that move the needle.

Observability can help mitigate some of the challenges that come with scaling data reliability across your pipelines. By using an ML-based approach to learn from past data and monitor new incoming data, data teams can gain visibility into existing data pipelines with little to no investment. With the ability to set custom monitors, your team can also ensure that unique business cases and crucial assets are covered, too.

3) Root cause & impact analysis

Testing can help catch issues before data enters production. But even the most robust tests can’t catch every possible problem that may arise—data downtime will still occur. And when it does, observability makes it faster and easier for teams to solve problems that happen at every stage of the data lifecycle.

Since data observability includes end-to-end data lineage, when data breaks, data engineers can quickly identify upstream and downstream dependencies. This makes root cause analysis much faster, and helps team members proactively notify impacted business users and keep them in the loop while they work to troubleshoot the problem.

4) Saving time and resources

One of the most prominent challenges data engineers face is speed – and they often sacrifice quality to achieve it. Your data team is already limited in time and resources, and pulling data for that critical report your Chief Marketing Officer has been bugging you about for weeks can’t wait any longer. Sure, there are any number of tests you should set before you transform the data, but there simply isn’t enough time.

Data observability acts as your insurance policy against bad data and accelerates the pace your team can deploy new pipelines. Not only does it cover those unknown unknowns but also helps you catch known issues that scale with your data.

So, do you need both?

No matter how great your testing suite is, unless your data is static, it’s simply not feasible to expect that testing alone will save you from data downtime. The uplift required to write new tests, update existing tests and thresholds, and deprecate old tests as your data stack evolves is tedious even for the most well-staffed teams.

While data testing can work for smaller pipelines, it does not scale well across distributed data systems. To meet the needs of this new normal, data teams must rely on both testing and end-to-end observability.

With this two-pronged approach to data quality, your defense against downtime just got a whole lot stronger.

Interested in learning more about how data observability can supplement your testing? Reach out to Scott O’Leary and book a time to speak with us in the form below.

Our promise: we will show you the product.

Product demo.

Product demo.  What is data observability?

What is data observability?  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage