Data Fabric vs. Data Mesh: Everything You Need to Know

Enterprise data is more complex than ever before. More data is coming from disparate sources, and most of that data is likely to be unstructured.

To address these challenges, new frameworks are regularly emerging that promise to simplify and optimize how data is ingested, stored, transformed, and analyzed. One of the latest is the concept of a data fabric.

A data fabric isn’t just a buzzword, but it is a somewhat abstract design concept. So let’s unpack what a data fabric actually is, how it works, what it promises to deliver—and how it differs from the similarly popular data mesh architecture.

What is a data fabric?

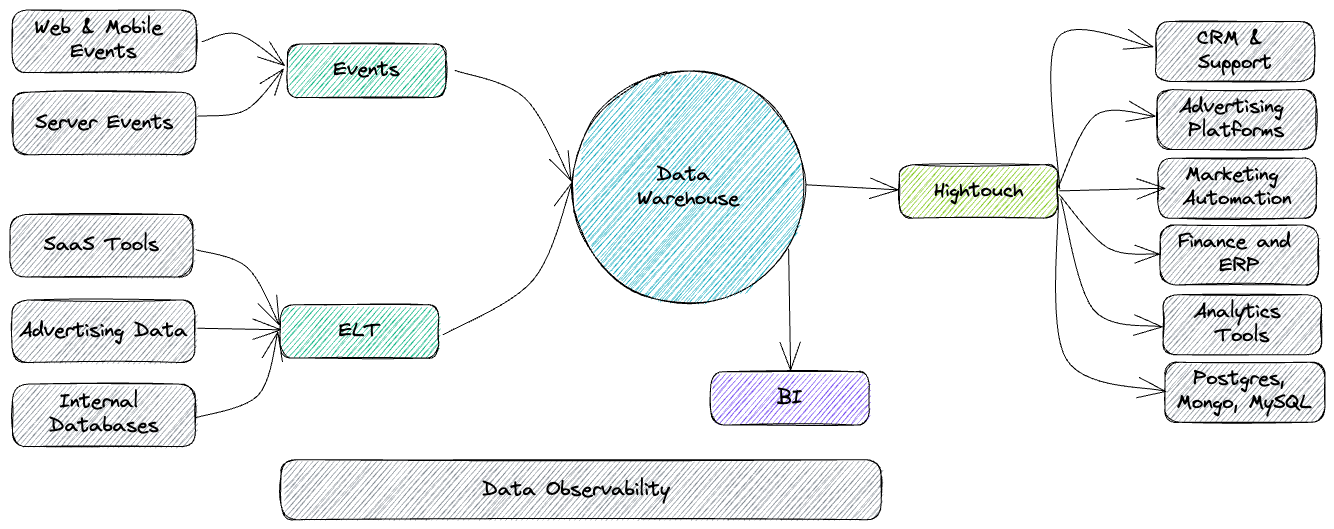

Put as simply as possible, a data fabric is a data platform architecture framework with a layer of technology that separates your data from your applications. This creates a centralized network of all your data, where connections and relationships can be identified freely—without relying on point-to-point integrations between specific applications or datasets.

Benefits of a data fabric

The core promise of the data fabric is making it faster and simpler for companies to extract valuable insights from their data. This happens when your data fabric unifies all your data, provides universal access controls, and improves discoverability for all data consumers.

Instead of relying on time-consuming integrations, complicated pipelines, and hefty relational databases, data consumers can tap into easily accessible and visualized data. Repetitive tasks get automated, and data that would otherwise sit idle is automatically ingested and put to use.

Abstract enough for you? Let’s dive into the nuts and bolts of how a data fabric gets woven together in the first place.

How does a data fabric work?

Data fabric architecture is complex, but there are a few key components that every data fabric should include: automated metadata analysis, semantic knowledge graphs, and universal access controls.

Key components of a data fabric

Metadata analysis

Metadata is data about your data. And simply put, your metadata is the foundation of your data fabric.

A data fabric will make your metadata active by continuously querying and analyzing your metadata. These interactions are how your data fabric identifies the connections and relationships within your datasets.

This also makes it possible to separate your data from the applications that contain it. This autonomous data can then be accessed within this interwoven fabric of data itself, rather than relying on point-to-point integrations.

This reliance on metadata within the data fabric framework is confirmation of what we’ve discussed before: modern data teams need automation and scalability in metadata management.

Knowledge graphs

A data fabric is useful because it makes it easy for consumers to discover insights and access the data they need to do their jobs. According to Gartner, this happens when the data fabric creates knowledge graphs. With a semantic layer that’s easy to interpret and allows teams to extract meaning from data, knowledge graphs bring the value of the data fabric to consumers.

Universal data controls

Your data fabric should allow you to control access to data, making it simpler to meet compliance standards and manage permissions across your entire data landscape. Given the comprehensive nature of the data fabric, you should be able to set universal controls—embedding access and permissions at the data level, rather than setting them over and over for every app or source.

Key challenges of building a data fabric

No off-the-shelf solution yet exists

Data fabrics are made possible with technology, but there isn’t yet a single solution on the market that can provide a comprehensive data fabric architecture (again, according to Gartner). So data teams will need to build their own data fabric by combining out-of-the-box and homegrown solutions—which any data engineer knows wlll take considerable time and effort to construct and maintain.

Organizational buy-in

Big architectural shifts always require buy-in from the right leaders and stakeholders. As you begin to explore whether the data fabric is right for your business, include those key leaders in your conversations and begin to build support from the earliest possible days. Your staunchest advocates will likely be those leaders who feel the pain of hard-to-discover data, slow time to insight, or manual data management processes.

Data reliability and trustworthiness

In order for your data fabric to be effective, your data must be trustworthy. After all, making bad data more accessible and discoverable could cost you dearly, doing more harm than good.

As you begin to build out and implement your data fabric, have good manual testing processes in place for your most critical assets. And as you scale, incorporate data observability tooling into your data fabric. Data observability ensures your data reliably meets your expectations across freshness, distribution, volume, and schema—and that you have good data lineage in place.

Data fabric examples

Domino’s

Domino’s now describes itself as an “e-commerce company that happens to sell pizza”. With Domino’s AnyWare, customers can order pizzas via Alexa or Google Home, Slack, text message, smart TVs, or Domino’s own website or apps. That’s just one reason why Domino’s is ingesting a huge amount of customer data across 85,000 structured and unstructured data sources. And the pizza brand is using a data fabric architecture to bring that data together and provide a 360-degree customer view.

Ducati

Italian motorcycle brand Ducati collects data from dozens of physical sensors placed on its MotoGP racing bikes. That performance data helps engineers to analyze and refine the design of their bikes, making improvements to product development based on real-world use. Ducati uses a data fabric architecture to consolidate its data and facilitate more efficient storage and broader discoverability.

The U.S. Army

The U.S. Army—in fact, the entire Department of Defense—is adopting the data fabric framework to deliver the most relevant insights to its personnel across the globe, as fast as possible. Data is quickly becoming one of the military’s most strategic assets, and the defense department wants to ensure its officers have access to the right information at the right time.

Historically, military data has been isolated and restricted. But modern leadership is adopting a modern approach and has articulated their data fabric as a “Department of Defense federated data environment for sharing information through interfaces and services to discover, understand, and exchange data with partners across all domains, security levels, and echelons.”

Data mesh vs. data fabric

Let’s pause for a moment and clear up a common question—what’s the difference between a data fabric and a data mesh?

Both are popular (and somewhat abstract) concepts in data platform architecture. Both address key challenges of managing data at scale in the modern enterprise. And both are named after textiles.

But they are two entirely different concepts. Here’s what you need to know.

What is a data mesh?

As we’ve described before, a data mesh is an approach to data platform architecture that uses a domain-oriented, self-serve design. A data mesh is an organizational paradigm, not a piece of technology, and it includes four basic pillars:

- Distributing ownership of data from one centralized team to the business domains where the data comes from, who are most knowledgeable and suitable to control it

- Providing self-serve data infrastructure to empower teams to access and leverage data as the business needs

- Treating data as a product

- Federating data governance while providing universal standards of quality, discoverability, and schema

Similarities between a data mesh and a data fabric

Both a data mesh and a data fabric attempt to solve the same problem: the complexity modern data teams face in attempting to manage ever-increasing amounts of data at scale.

And both should, ideally, provide similar outcomes: enabling businesses to more efficiently extract useful insights from their data.

A data mesh and a data fabric could exist simultaneously in the same organization—it’s not an either/or proposition. But it’s important to understand the differences in how the two approaches go about consolidating and democratizing access to data.

How does a data mesh differ from a data fabric?

At the highest level, a data mesh is an organizational paradigm and a data fabric is a layer of technology.

To build a data mesh, you have to orient your business around domain teams and open up ownership and control over data. To build a data fabric, you have to leverage automation across your applications and datasets.

One key difference is how data ownership is treated by each framework. A data mesh will apply a microservice-inspired approach with domain teams owning and being accountable for their data, while a data fabric relies on a virtual layer to manage all data across a single environment—usually with a single team overseeing that data.

Is a data fabric right for your company?

A data fabric is powerful, but as you can tell by now, quite complex. And since it’s still an emerging technology, it does require a significant investment to get started building a data fabric. Like any complex architecture, a data fabric will make sense depending on:

The volume and growth of your company’s data. Are you dealing with a large amount of unstructured data that’s challenging to manage with traditional data catalogs? Do you expect your data landscape to continue growing over the next several years?

The number of data applications you rely on. Are you ingesting data from dozens of different sources? Are you transforming data in multiple applications? Are you storing data across multiple cloud or in-house environments?

Your data team structure. Do you have in-house expertise ready to take on a data fabric? Do you have a data engineering team qualified to design, implement, test, and refine a complex architecture?

Your data health. Do you have reliable data that can be trusted? Do you have quality metadata available to power your data fabric?

Curious how to build a more reliable and scalable data fabric? Reach out to the Monte Carlo team to learn how to drive adoption and trust of your data fabric with better data quality.

Our promise: we will show you the product.

Product demo.

Product demo.  What is data observability?

What is data observability?  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage