Building a Data Platform: 7 Signs It’s Time to Invest in Data Quality

One of the most frequent questions I get from customers is: “when does it make sense to invest in data quality and observability?”

My answer is, “it depends.”

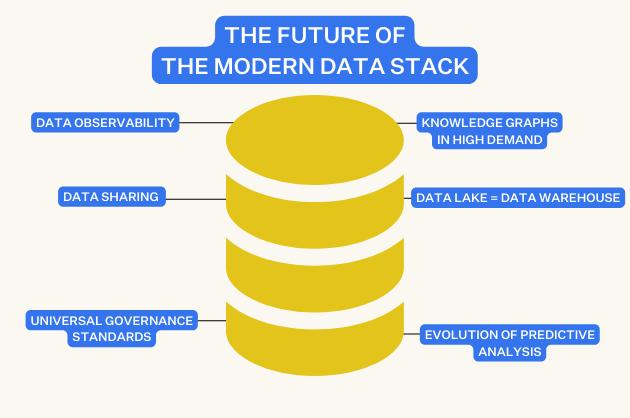

The reality is that building a data platform is a multi-stage journey and data teams have to juggle dozens of competing priorities. Data observability may not make sense for a company with a few dashboards connected to an on-premises database.

On the other hand, many organizations I’ve spoken with have increased their investment in developing their data platform without seeing a corresponding increase in data adoption and trust. If your company doesn’t use or trust your data, your best laid plans for data platform domination are a pipe dream.

To answer this question, I’ve outlined seven leading indicators that it’s time to invest in data quality for your data platform.

7 signals you have “good pipelines, but bad data”

From speaking with hundreds of customers over the years, I have identified seven telltale signs that suggest your data team should prioritize data quality.

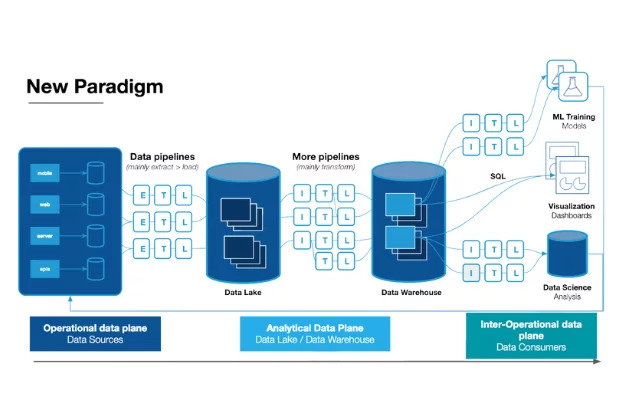

You’ve recently migrated to the cloud

Whether your organization is in the process of migrating to a data lake or between cloud platforms (e.g., Amazon Redshift to Snowflake), maintaining data quality should be high on your data team’s list of things to do.

After all, you are likely migrating for one of three reasons:

- Your current data platform is outdated, and as a result, data reliability is low, and no one trusts the data.

- Your current platform cannot scale alongside your business nor support complex data needs without a ton of manual intervention.

- Your current platform is expensive to operate, and the new platform, when maintained properly, is cheaper.

Regardless of why you migrated, it’s essential to instill trust in your data platform while maintaining speed.

You should be spending more time building your data pipelines and less time writing tests to prevent issues from occurring.

For AutoTrader UK, investing in data observability was a critical component of their initial cloud database migration.

“As we’re migrating trusted on-premises systems to the cloud, the users of those older systems need to have trust that the new cloud-based technologies are as reliable as the older systems they’ve used in the past,” said Edward Kent, Principal Developer, AutoTrader UK.

Your data stack is scaling with more data sources, more tables, and more complexity

The scale of your data product is not the only criteria for investing in data quality, but it is an important one. Like any machine, the more moving parts you have, the more likely things are to break unless the proper focus is given to reliability engineering.

While there is no hard and fast rule for how many data sources, pipelines, or tables your organization should have before investing in observability, a good rule of thumb is more than 50 tables. That being said, if you have fewer tables, but the severity of data downtime for your organization is great, data observability is still a very sensible investment.

Another important consideration is the velocity of your data stack growth. For example, the advertising platform Choozle knew to invest in data observability as it anticipated table sprawl with their new platform upgrade.

“When our advertisers connect to Google, Bing, Facebook, or another outside platform, Fivetran goes into the data warehouse and drops it into the reporting stack fully automated. I don’t know when an advertiser has created a connector,” said Adam Woods, CTO, Choozle. “This created table sprawl, proliferation, and fragmentation. We needed data monitoring and alerting to make sure all of these tables were synced and up-to-date, otherwise we would start hearing from customers.”

Your data team is growing

The good news is your organization values data, which means you are hiring more data folks and adopting modern tooling to your data stack. However, this often leads to changes in data team structures (from centralized to de-centralized), adoption of new processes, and knowledge with data sets living amongst a few early members of the data team.

If your data team is experiencing any of these problems, it’s a good time to invest in a proactive approach to maintain data quality. Otherwise, technical debt will slowly pile up over time, and your data team will invest a large amount of their time into cleaning up data issues.

For example, one of our customers was challenged by what we call the, “You’re Using That Table?!” problem. As data platforms scale, it becomes harder for data analysts to discern which tables are being actively managed vs. those that are obsolete.

Data certification programs and end-to-end data lineage can help solve these issues.

It’s important to note that while a growing data team is a sign to invest in data quality, even a one-person data team can benefit greatly from more automated approaches. For example, Clearcover’s one-man data engineering team was able to remove bottlenecks and add more value to the business by investing in automation.

Your team is spending at least 30% of their time firefighting data quality issues

When we started Monte Carlo, I interviewed more than 50 data leaders to understand their pain points around managing data systems. More than 60% indicated they were in the earlier stages of their data reliability journey, and their teams spend the first half of their day firefighting data quality issues.

For example, Gopi Krishnamurthy, Director of Engineering at Blinkist, estimated that prior to implementing data observability, his team was spending 50 percent of their working hours on firefighting data drills.

Multiple industry studies have confirmed: data engineers are spending too much of their (valuable!) time fixing rather than innovating. During my research, I also discovered that data engineering teams spend 30-50 percent of their time tackling broken pipelines, errant models, and stale dashboards.

Even organizations further along the curve that have developed their own homegrown data quality platform, are finding their team spends too much time building and upgrading the platform – and that gaps remain.

Your team has more data consumers than they did 1 year ago

Your organization is growing at a rapid speed, which is awesome. Data is powering your hiring decisions, product features, and predictive analytics.

But, in most cases, rapid growth leads to an increase in business stakeholders that rely on data, more diverse data needs, and ultimately more data. And, with great data comes great responsibility as the likelihood of bad data entering your data ecosystem increases.

That is the irony: the most data-driven organizations will have more data consumers to spot any error when it arises. For example, at AutoTrader UK, more than 50% of all employees are logging in and engaging with data in Looker every month, including complex, higher-profile data products such as financial reporting.

Typically, an increase in data needs from stakeholders is a good indicator that you need to proactively stay ahead of data quality issues to ensure data reliability for end-users.

Your company is moving to a self-service analytics model

You are moving to a self-service analytics model to free up data engineering time and allow every business user to directly access and interact with data.

Your data team is excited since they no longer have to fulfill ad-hoc requests from business users. Likewise, your stakeholders are happy since a bottleneck is removed from having access to data.

While this is exciting for your data team, your stakeholders need to trust the data. If they don’t trust it, they won’t use it for decision making. And, ultimately if your end-users don’t trust the data, it defeats the purpose of moving to a self-service analytics model.

There are two types of data quality issues that exist: those you can predict (known unknowns) and those you can’t (unknown unknowns). As data becomes more and more integral to the day-to-day operations of data-driven organizations, the need for reliable data only increases.

Data is a key part of the customer value proposition

Every application will soon become a data application. As a data leader, it’s exciting when your company finds a new use case for data, especially if it’s customer-facing. Personally, I couldn’t be more excited for this new norm.

Toast, a leading point of sale provider for restaurants, separates itself from its competitors based on the business insights it provides to its customers. Through Toast, restaurants get access to hundreds of data points, such as how their business has done over time, how their sales compared to yesterday, and who their top customers are.

“We say our customers are all Toast employees,” said Noah Abramson, a data engineering manager at Toast. “Our team services all internal data requests from product to go-to-market to customer support to hardware operations.”

While this is a huge value add, it also makes their data stack customer-facing. That means it has to be treated with the same reliability and uptime standards as its core product. When data quality isn’t prioritized the data team – and your customers – get burned.

Data quality starts with trust

Much like gas companies that need to trust their oil rigs daily to provide gas and oil to consumers, organizations need to trust their data to deliver clean and reliable data to stakeholders.

By following a proactive approach to data quality, your team can be the first ones to know about data quality issues, well in advance of frantic Slack messages, terse emails, and other trailing indicators of data downtime.

Otherwise, valuable engineering time is wasted firefighting data downtime, your efforts at becoming a data-driven company will hinder over time, and business users will lose trust in data.

While each situation varies based on your business, it’s best to bake data quality into your data platform as early as possible.

After all, not everyone has Spiderman on speed dial.

Interested in learning more about taking a proactive approach to data quality for your data platform? Reach out to Barr Moses and book a time to speak with us in the form below.

Our promise: we will show you the product.

Product demo.

Product demo.  What is data observability?

What is data observability?  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage