5 Ways To Ensure High Functioning Data Engineering Teams

Data engineering is a relatively young profession, even for the tech space. To put it in perspective, front-end engineering has twice the number of years in industry maturity.

While the role itself is rapidly evolving, the tooling, processes, and team structure are fragmented and amorphous at best. As a result, the day-to-day responsibilities of a data engineer can look radically different from one company to another, depending on the needs of the business and the data that drives it.

Overcoming these challenges requires a specific mindset that allows a data engineer to continuously assess how their time and skills are adding value to the business.

That’s because not only is data engineering talent in high demand, but the very nature of handling big data necessitates prioritization and focus. In most scenarios, it is human capacity rather than technological capacity that serves as the larger constraint to realizing the full potential of your company’s data.

Simply put, data engineering time is a precious resource that needs to be invested wisely by both practitioners and data team leaders. In this post, I’ll share how I think about creating the right mix of people, processes, and technology to ensure data engineers are spending time on meaningful work that contributes to the overall business.

Cultivate the right mindset

A heightened focus on productivity doesn’t mean being overly prescriptive with people’s time. Like anyone else, data engineers do their best work when they are passionate about the project they’re working on. And frankly, micromanaging doesn’t just waste their time, it wastes the manager’s time as well.

I’ve found it to be more effective to hire for and cultivate two types of mindsets: engineering and analytical.

The analytical mindset helps you ask the right questions to evaluate business impact whereas the engineering mindset helps you reverse engineer the path from the goal to the steps required to reach it. It’s combining the “why” and the “how” which allows you to answer, “is the juice worth the squeeze?”

Purely analytical thinkers might hone on the most important projects, but without the right engineering understanding they could run the risk of over-engineering, under-resourcing or having mismanaged timelines on a project. Conversely, a purely engineering mindset might build a very cool data product, but if the data isn’t applicable or it wasn’t built with the right methodology it could end up being not just a waste of people’s time, but also compute resources.

You don’t necessarily need to have both skill sets right away (Editors note cue: analytics engineering), but you need to be open to these ways of thinking. This allows additional responsibility, accountability, and ownership to be delegated and decentralized, which greatly accelerates the speed at which the team can operationalize their data to unlock business values. Speaking of decentralizing…

Leverage decentralized “data meshy” team structures

There are a number of factors that will determine if a data mesh model is right for your team including organizational structure, business workflow maturity, and data product use cases.

That being said, when you have an overly centralized process around data governance or one governing body that acts in a gatekeeper function, things often move slowly and projects run the risk of being abandoned as a result of this bottleneck. Each subdivision within a large multinational corporation is going to have its own unique workflows and the data models that are needed to support the business will be different.

A data mesh working model, coupled with modern advancements in data lineage, storage and analytics, enables domain teams to take ownership of their own data without having to look to a central authority for getting buy-in or validation. In a way it is the data engineering version of the Netflix cultural value, “loosely coupled but tightly aligned teams.”

It will also ensure that there is a strong understanding and execution across the specific data governance processes (how the data is collected, where it lives, how it’s maintained), the regulation of which will vary from region to region.

If you’re not careful right-sizing your level of governance, the team can move from a value center to a cost center. The same can be said for data quality. It’s critical for productive data teams to define the minimum data requirements (data SLAs can help) for each data product and then enforce those standards.

For example, thanks to advancements in natural language processing and computer vision, it is now often more effective to have smaller amounts of high quality, representative data than noisier, larger datasets for specific model training scenarios.

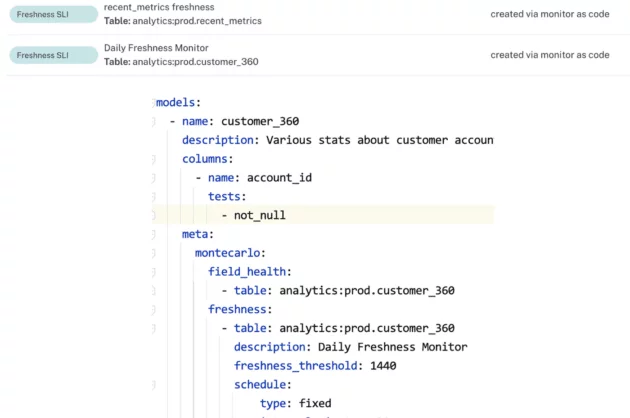

Treat data like code

For data engineering teams to be productive, data needs to be a first class citizen. For me, data as code means:

- Data engineers work directly with source dataset owners or applications

- Automated data anomaly and business logic monitoring

- End-to-end testing of data pipeline code so that schema regressions and runtime issues don’t become a surprise

- Utilizing modern DevOps practices to reduce pipeline deployment and maintenance overhead

Let the data drive the stack

It’s human nature to gravitate toward the known. It can be the same way with data engineers and the bevvy of technology solutions we leverage on the job. The question isn’t data lake vs data warehouse, ETL vs ELT, or data observability vs data testing–the question is what tool is best for the data that’s important to you.

When I worked for Nauto, which develops AI software for driver safety, the main data types we handled were telemetry and video from the dashcams. Our storage and infrastructure paradigm was built around how we could best process this data, which essentially meant a lot of homegrown builds because commercial options didn’t exist.

However if your organization is mainly concerned with analytics and business intelligence from data, there are multiple commercial data warehouse solutions (Snowflake, Redshift, BigQuery, among others) that will work and work really well. Leveraging off-the-shelf commercial solutions, even if they cover 80% of your requirements, can help lean data engineering teams make an outsized impact and set your business up foundationally to be successful in deriving value from your data.

Be the bridge between data and impact

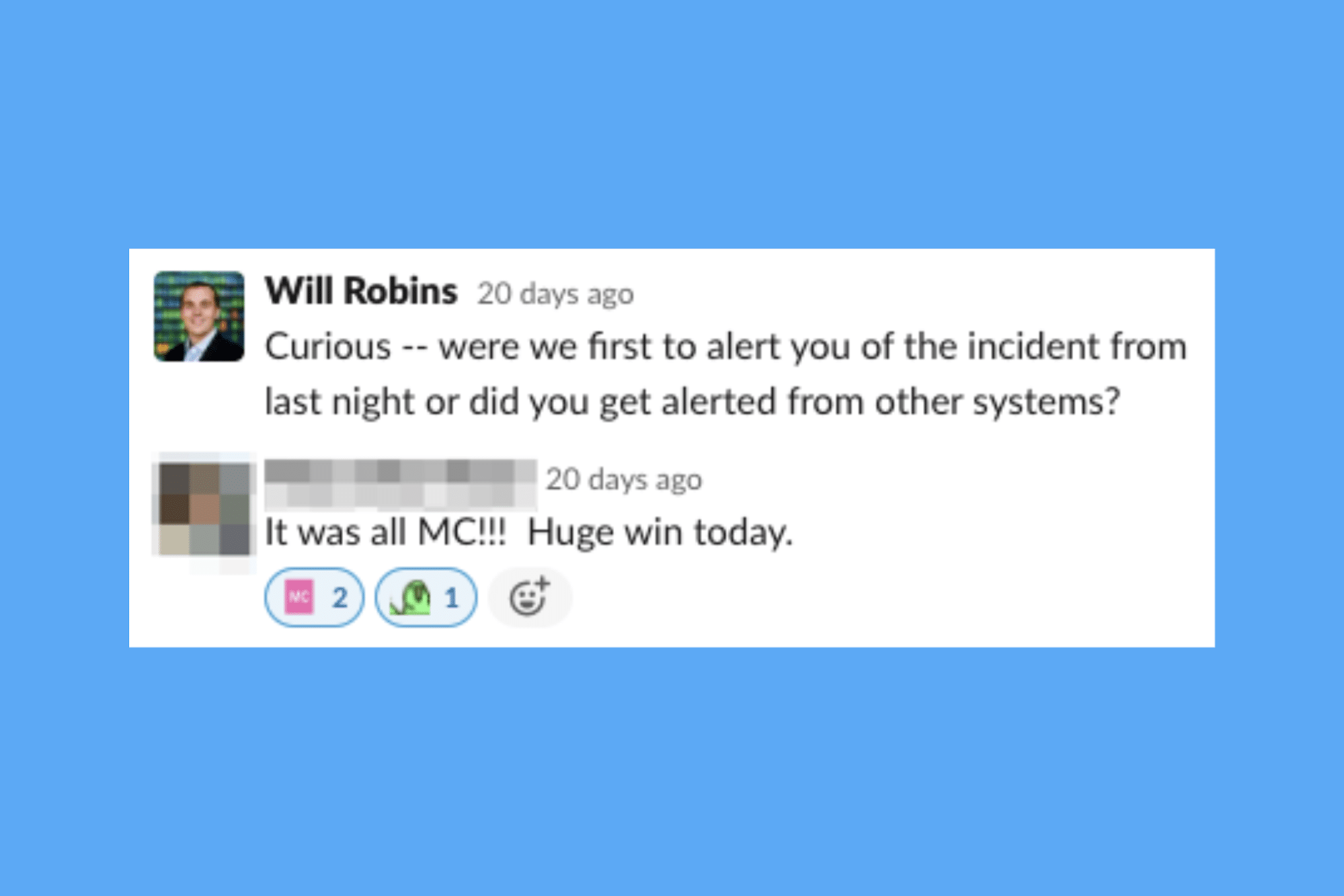

You shouldn’t be spending your time or your team’s time building tools that are already available or on tasks that can be better done by machines. Don’t spend more time building the technical components of a pipeline or firefighting data quality issues than what is necessary to build stakeholder trust and shared understanding in the dataset within a subject area.

The core competency of the team, where you should be spending more than a third of your time, is serving as the bridge, whether through code or collaboration, between the data source of truth and the data consumers on the other end.

This can take many forms but a common example would be serving as the bridge between the data generated by a web application and the product manager who needs those insights to create better user experiences.

Work Should Be Meaningful and Impactful

The art of mastering data engineering is mastering your time. The volume, velocity, and variety of big data can be overwhelming, but not every data model or pipeline is of equal weight.

Prioritize, focus and thrive.

Interested in how data observability can help amplify the efforts of high performing data teams? Schedule a time to talk with us using the form below!

Our promise: we will show you the product.

Product demo.

Product demo.  What is data observability?

What is data observability?  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage