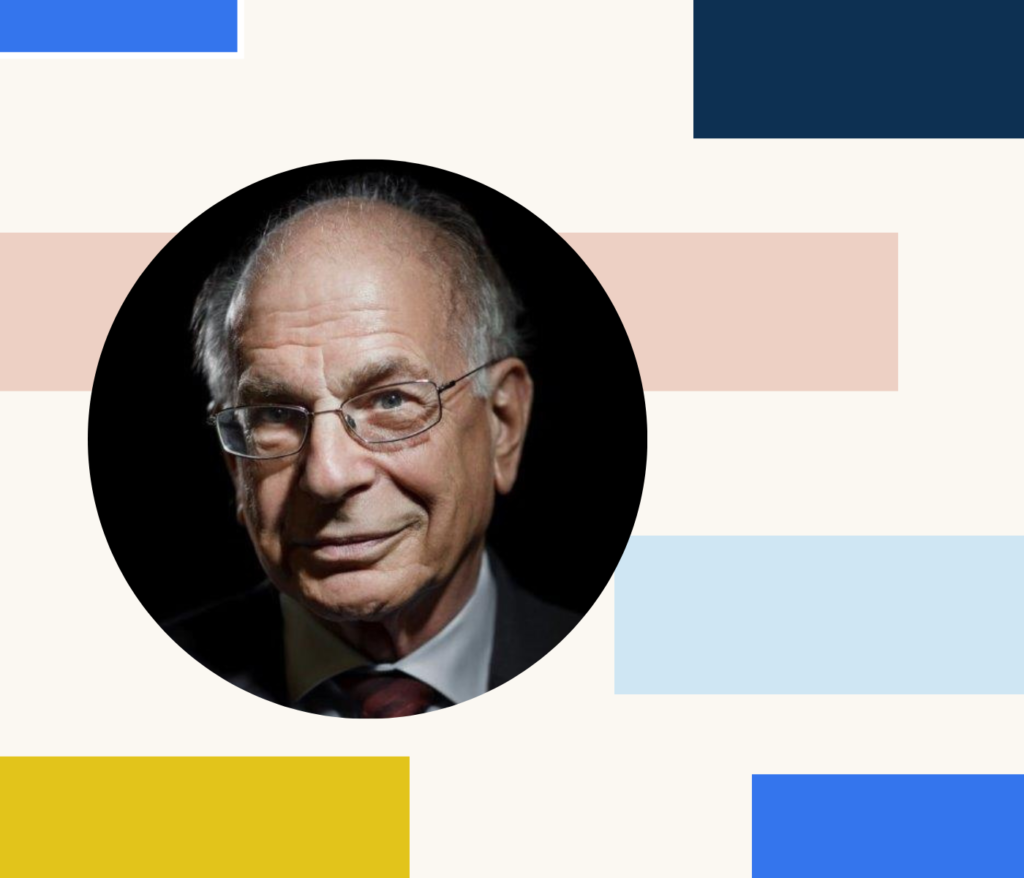

3 Questions with Daniel Kahneman, Author of Thinking, Fast and Slow

Last month at IMPACT 2022: The Data Observability Summit, I had the distinct privilege of chatting with Daniel Kahneman, Nobel Prize-winning economist and author of one of my favorite books, Thinking, Fast and Slow.

Most notably, Daniel discussed the difference between two major types of thinking: System 1, decision making that operates automatically (say, doing simple multiplication) and System 2, decision making that requires effort and attention (for instance, a complex Calculus problem).

Check out out the full discussion, below:

During our conversation, we discussed everything under the sun when it came to these two types of thinking, broader human behavior, decision science, cognitive bias, and even modern dance (fun fact: Daniel is a fan of the iconic choreographer Twyla Tharp).

Following our fireside chat, I asked Daniel a few final questions sourced from our audience. Enjoy!

Could we differentiate between SI and S2 with regard to hemispheric specialization?

No connection to brain hemispheres. Altogether, localizing the systems is tricky. System 1 is much of the brain. We have good localization information for attention and executive function, which are essential aspects of what I (and many others) have called System 2 thinking

Do you see humans and AI cooperating in the future (as in, humans can think broadly across disciplines and define problems while AI can solve specific, well-defined problems)? Or will AI be dominant?

In the short and medium term, people will use AI as a tool, keeping the important functions to themselves. But when AI comes close enough to give useful advice, it will not take long for it to operate better by itself, simply because AI can learn so much faster (for example. all autonomous cars pool all their experiences). Kasparov had the idea that the combination of grandmasters with computers would be superior, but a few years later Alpha Zero, with no trace of human chess wisdom in it, could outplay – not only grandmasters, but also chess programs that drew on human knowledge.

Do you think AI will be able to truly develop empathy?

If what you mean by empathy is feeling what the other feels, the answer is surely no. But if you mean being able to use cues such as facial expressions and voice to predict what an individual will do next, and do so more accurately than people can do, the answer is surely yes.

+++

Enjoyed our session with Daniel Kahneman? Check out all of our on-demand talks from IMPACT!

Product demo.

Product demo.  What is data observability?

What is data observability?  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage