Bringing Reliable Data and AI to the Cloud: A Q&A with Databricks’ Matei Zaharia

It’s one thing to say your company is data driven. It’s another thing to actually derive meaningful insights from your data.

Just ask Matei Zaharia, original creator of Apache Spark. Since its original release in 2010 by Matei and U.C. Berkeley’s AMPLab, Apache Spark has emerged as one of the world’s leading open source cluster-computing frameworks, giving data teams a faster, more efficient way to process and collaborate on large-scale data analytics.

With Databricks, Matei and his team took their vision for scalable, reliable data to the cloud by building a platform that helps data teams more efficiently manage their pipelines and generate ML models. After all, as Matei notes: “your AI is only as good as the data you put into it.”

We sat down with Matei to talk about the inspiration behind Apache Spark, how the data engineering and analytics landscape has evolved over the past decade, and why data reliability is top of mind for the industry.

You released Spark in 2010 as a researcher at U.C. Berkeley, and since then, it’s become one of the modern data stack’s most widely used technologies. What inspired your team to develop the project in the first place?

Matei Zaharia (MZ): Ten years ago, there was a lot of interest in using large data sets for analytics, but you generally had to be a software engineer and have knowledge of Java or other programming languages to write applications for them. Before Apache Spark, there was MapReduce and Hadoop as an open source implementation for processing and generating big data sets, but my team at Berkeley’s AMPLab wanted to find a way to make data processing more accessible to users beyond full-time software engineers, such as data analysts and data scientists.

Specifically, for data scientists, one of the first functionalities we built was a SQL engine overhead that allowed users to combine SQL with functions that you could write in another programming language like Python.

Another early goal for Apache Spark was to make it easy for users to set up big data computations with existing, open source libraries by designing the programming interface for modularity, so that users can easily combine multiple libraries in an application. This led to hundreds of open source packages being built for Spark.

What encouraged you and the rest of your group at U.C. Berkeley to turn your research project into a solution for enterprise data teams?

Pretty early on in the research project, there were some technology companies that were interested in using Apache Spark, for instance, Yahoo!, which employed one of the largest teams using Hadoop at the time, and several startups. So we were excited to see if we could support their needs and, through this collaboration, generate ideas for new research problems since it was still such a new space.

Thus, we spent a lot of time early on to make Apache Spark accessible for the enterprise. Then, in 2013, the core research team for this project was finishing up our PhDs and we wanted to continue working on this technology. We decided that the best way to get it to a lot of people in a sustainable and easy-to-use way was through the cloud, and Databricks was born.

Seven years later, your vision was spot on. In 2020, more and more data teams are taking to the cloud to build their data platforms. What are some considerations enterprise data teams should keep in mind when designing their data stacks?

First of all, it’s important to think about who you want to access your data platform. Who will be accessing the things that are produced in it, and what kind of governance tools will you need around that? If you don’t have permission to actually use the data, or if you have to ask another team to write a data engineering job vs. just running a simple SQL command, then you can’t access existing data or share the results with other stakeholders at the company easily, and that becomes a problem.

Another question is: what your goals are for data availability? Whether you’re just building a simple report or a machine learning model or anything in between, you want them to keep updating over time. Ideally, you wouldn’t be spending lots of time firefighting downtime in your applications.

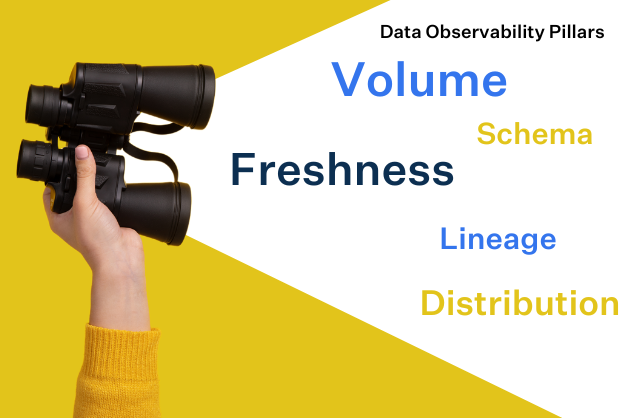

So, assessing the features you need to meet the data availability requirements of data users at your company are super important when designing your platform. This is where data observability comes in.

In the past, you’ve said “AI is only as good as the data you put into it.” I couldn’t agree more. Could you elaborate?

I would even go so far as to say AI or machine learning should really have been called something like “data extrapolation”, because that’s basically what machine learning algorithms do by definition: generalize from known data in some way, very often using some kind of statistical model. So when you put it that way, then I think it becomes very clear that the data you put in is the most important element, right?

Nowadays, more and more AI research is being published highlighting how little code you need to run this or that new model. If everything else you’re doing to train the model is standard, then that means that the data you put in this model is super important. To that end, there are a few important aspects of data accuracy and reliability to consider.

For example, is the data that you’re putting in actually, correct? It could be incorrect because of the way you collected it, or it could be because of bugs in the software. And the problem is when you’re producing, you know, a terabyte of data or a petabyte of data to put into one of these training applications, you often have to individually check all of it and see whether it’s valid.

Likewise, does the data you put in cover a diverse enough set of conditions or are you missing critical real-world conditions where your model needs to do well?

To that end, what are some steps that data teams can take to achieve highly reliable data?

At a high level, I’ve seen a few different approaches, all of which can be combined. One of them is as simple as just having a schema and expectations about the type of data that will go into a table or into a report. For example, in the Databricks platform, the main storage format that we use is something called Delta Lake, which is basically a more feature-rich version of Apache Parquet with versioning and transactions on your tables. And we can enforce the schema of what kind of data goes into a table.

Another data quality approach I’ve seen is running jobs that inspect the data once it’s produced and raise alerts. You ideally want an interface where it’s very easy to generate custom checks and where you can centrally see what’s happening, like a single pane of glass view of your data health.

The final thing I’d note relates to how you design your data pipelines. Basically the fewer data copy, ETL, and transport steps you have, the more likely your system is to be reliable because there are just fewer things that can go wrong. For example, you can take a Delta Lake table and treat it as a stream and have jobs that are listed to the changes so you don’t need to replicate the changes into a message bus. You can also query these tables directly with a business intelligence tool like Tableau so you don’t have to copy the data into some other system for visualization and reporting.

Data reliability is a very fast-evolving space, and I know Monte Carlo is doing a lot of interesting things here.

When you co-founded Databricks, the cloud was fairly nascent. Now, many of the best data companies are building for the cloud. What’s led to this rise of the modern cloud-based data stack?

I think it’s a matter of both timing and ease of adoption. For most enterprises, the cloud makes it much easier to adopt technologies at scale. With the cloud, you can buy, install, and run a highly reliable data stack yourself without expensive setup and management costs.

Management is one of the hardest parts of maintaining a data pipeline, but with cloud data warehouses and data lakes, it comes built in. So with a cloud service, you’re buying more value than just a bunch of bits on a CD-ROM that you have to install on your servers. The quality of management you have directly impacts how likely you are to build critical applications on it. If you’ve got something that’s going down for maintenance every weekend and it has to be down for a week to upgrade, you’re less likely to want to use it.

On the other hand, if you’ve got something that a cloud vendor is managing, something that has super high availability, then you can actually build these more critical applications. Finally, on the cloud, it is also much faster for vendors to release updates to customers and get immediate feedback on them.

It means the cloud vendor has to be very good at updating something live without breaking workloads, but for the user, it basically means you’re getting better software faster: imagine that you could access software today that you would only have gotten one or two years into the future from an on-premise vendor.

These are the big factors that have made cloud products succeed in the marketplace.

Learn more about Matei’s research, Apache Spark, or Databricks.

Interested in learning more about data reliability? Reach out to Barr Moses and the Monte Carlo team.

Our promise: we will show you the product.

Product demo.

Product demo.  What is data observability?

What is data observability?  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage